Data observability is a relatively new term that has been gaining traction in the world of data engineering over the past few years. But what exactly is it? And more importantly, why should you care? In this blog post, I will try to answer these questions and more. We will discuss data observability, how it differs from other approaches, and why it is becoming increasingly important for businesses today by focusing on the ten key points you absolutely need to know about this new category.

By the end of this post, you will have a better understanding of this growing trend and be able to decide whether or not it is something you should invest in.

Let’s first start with a brief definition of data observability.

Data observability is the practice of monitoring data as it flows in order to detect and fix any issues that may arise in the data pipelines. This allows organizations to catch and correct data errors before they cause significant damage, leading to increased efficiency and productivity of the data teams. Some of the benefits of data observability include the following:

- Increased trust in data

- Improved decision-making due to better visibility of the data

- Reduced data entropy

Let’s now dive into the 10 things data leaders absolutely need to know about data observability.

1. Data Observability originated in software observability

The origin of the concept of data observability can be found in control theory, and its mathematical definition is the measure of how well the state of a system can be inferred from its outputs. The concept of observability was then applied to software with the DevOps revolution, in which companies like Datadog and New Relic revolutionized the software engineering world by enabling engineers to get a better view of the health status of the systems.

Software engineers cannot imagine not having the centralized view across their systems provided by software observability tools. These tools allow developers to see how all the pieces of their infrastructure fit together and help them diagnose and fix problems quickly. As the cloud allows hosting more and more infrastructure components — like databases, servers, and API endpoints — it is more important than ever to have these tools in place in order to keep your systems running smoothly.

Today, the data space is undergoing the same challenges that software engineering faced a decade ago. As data volumes continue to grow, so does the challenge of ensuring data quality. Many organizations are finding that they are struggling to keep up with the increasing number of data use cases and products that require high-quality data. Additionally, the higher probability of data breaking means that businesses can no longer afford to take their data quality for granted. In order to ensure that their data is of the highest quality, businesses started investing in tools and resources, as well as putting in place processes and protocols for maintaining data quality. This is leading to the creation of the category of data observability.

2. And just like software observability, data observability is built on 3 pillars

There are several parallelisms between software and data observability, one of them being that they are built on three main pillars.

The three pillars of software observability are metrics, traces, and logs. Metrics are used to measure the performance and health of a system. Traces are used to track the path of a request through a system. Logs are used to store information about events that occur in a system. Collecting data from these three sources can help you understand how your system is performing and identify potential problems.

Similarly, the three pillars of data observability are metrics, metadata, and lineage. Metrics help you measure the quality of your data, metadata gives you access to data about your data, and lineage tells you how data assets are related to each other. Together, these three pillars help you understand your data infrastructure and make sure your data is always in a good state.

3. Data observability goes beyond data quality testing and data monitoring

Now you may be asking yourself: “What about data quality testing and monitoring solutions? How do they fit in all this?”. Let’s dive into it.

Now that companies are dealing with more data, it is becoming increasingly difficult to detect issues and predict problems through testing alone. To cover all of the potential data issues, organizations put hundreds of tests in place. However, there are countless other possibilities for data to break throughout the entire data journey. This means that even with all of the tests in place, there is no guarantee that the data will be perfect. In order to ensure the quality of the data, companies need to be proactive in identifying and correcting any data issues as they occur.

Data quality monitoring is often used interchangeably with data observability, while in reality, it can be considered the first step needed to enable it. In other words, data quality monitoring alerts users when data assets or sets don’t match the established metrics and parameters. In this way, data teams get visibility over the quality status of the data assets. However, this approach ultimately presents the same limitations as testing. Although you can have visibility over the status of your data assets, you have no way of knowing how to solve the issue. Data observability is designed to overcome this challenge.

Data observability provides users with a more complete view of their data by allowing them to not only assess the health status of their data but also understand how that data is being used and what issues may be causing problems through data lineage. This allows users to troubleshoot issues more effectively and improve the overall quality of their data.

4. There are 3 main reasons behind bad-quality data in organizations

There are many reasons that can lead to bad-quality data, but it is possible to identify three main reasons:

- The increasing number of third-party sources companies rely on. Poor data governance. Lack of standardization within an organization. The first reason, the increasing number of third-party sources companies rely on, is due to the fact that many businesses today are relying on external data sources to make decisions. This includes data from social media, sensors, devices, and more.

- The complexity of data pipelines. And as organizations are integrating data from more and more external sources, data pipelines are getting increasingly complex.

- The increasing number of data consumers. As data becomes more accessible, there are more people who want to use it. This includes analysts, data scientists, and even business users. The problem is that not everyone has the same level of understanding when it comes to data. This can lead to misunderstandings and incorrect usage of data.

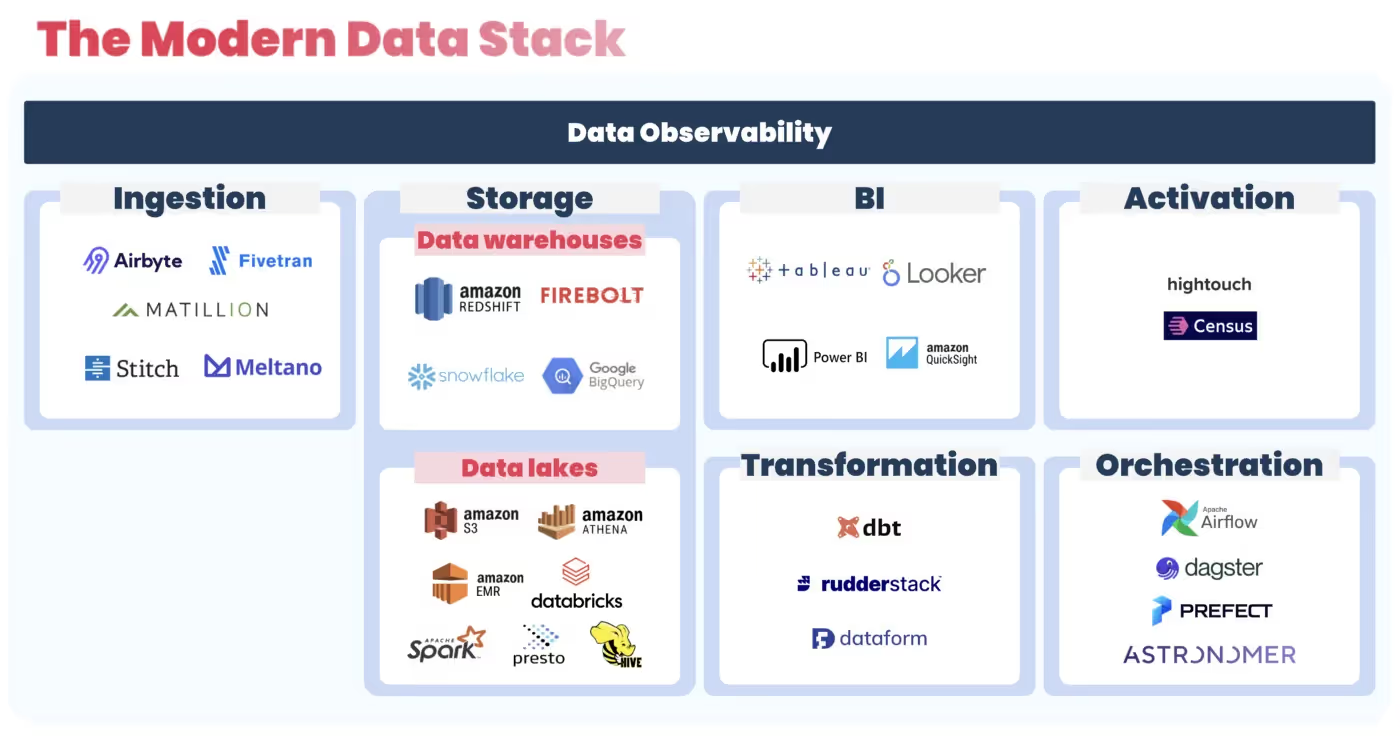

5. Data observability “sits on top” of the existing data stack of an organization

Let’s quickly go through some basics. What do we mean by data stack? It can be described as a collection of tools and technologies that help businesses collect, transform, store and utilize data for analytics and machine learning (ML) use cases.

The stack is cloud-based and typically includes the following layers:

- Integration, ETL/ELT tools: which extract and transport data from the source systems into the data storage.

- Storage: where the data is stored, typically a cloud data warehouse or data lake.

- Transformation: where the raw data is transformed and put into a format and structure that is appropriate for analytics.

- Business Intelligence/Data Visualization: where the data is consumed by business users, typically in dashboards and charts.

- Workflow orchestration: this is the piece that holds everything together by enabling users to create, schedule, and monitor data pipelines.

- Reverse ETL: this layer allows data consumers to move data from the storage to other cloud-based applications so that it can be used for operational analytics (CRM or personal CRM, marketing, finance, etc.).

Data observability, by integrating into tools at each stage of the modern data stack, is capable of providing data engineering teams, or, more in general, data practitioners, with an overview of everything that is going on with their data. So, data observability should be considered the layer that sits on top of the organization’s existing data pipeline, ensuring that data quality is high at each stage of its lifecycle.

6. Data lineage is what enables data teams to move from firefighting to fire prevention

Lineage is the tracing of data assets and their dependencies within an organization. By understanding how these are related, businesses can better manage their data platforms.

So, data lineage is the ability to track data through its lifecycle, from the time it’s entered into the system to when it’s used in reports or dashboards. This information is incredibly valuable for troubleshooting data issues, as it can quickly show you where the data went wrong.

Lineage models can also link to the applications, jobs, and orchestrators that gave rise to anomalous data. This makes it easy to track down the source of the issue and fix it.

7. We can summarize the benefits of data observability in 3 main points

Data observability is essential for any organization that wants to make the most of its data. By understanding how data is transformed, produced, and used across the entire ecosystem, businesses can identify and correct any errors that may occur.

Data observability also enhances data discovery and data quality, ensuring that data is accurate and consistent at every stage of its lifecycle. In general, it’s possible to identify three main benefits of data observability in business:

- Improved decision-making. Data observability can help you identify and quickly fix issues that impact business decisions. By monitoring data in near-real-time, you can find problems early and correct them before they cause long-term damage. Basing business decisions on accurate data necessarily leads to improved decision-making. Think of all the benefits of better decision-making — increased profits, stronger competitive edge, and higher customer satisfaction, only to cite a few.

- Increased efficiency. Data observability can lead to increased efficiency by allowing you to see problems and potential problems before they happen. This way, you can fix them before they cause any damage or slow down your process. Having this information available allows you to make more informed decisions, which can lead to a more streamlined and efficient operation within the data team.

- Regulations compliance. There are a few ways in which not implementing data observability can create data governance challenges within organizations. Today’s data flows are dynamic, making it challenging to keep the documentation of your data up-to-date. On top of this, as the data stack grows, so does the data team. More people working with data means more risk of unexpected changes to data that can decrease data quality. Additionally, as different roles have different expertise and confidence around data, things can quickly start to go wrong. Failing to monitor data quality issues and troubleshoot them swiftly can lead to broader problems and cause companies not to be compliant with regulations like GDPR and HIPAA.

8. Whether companies should start implementing data observability depends on multiple factors

There is no simple answer to the question of whether or not companies should invest in a data quality program. The decision will depend on multiple factors, such as the company’s size, industry, and specific needs.

Some of the factors that companies should consider when deciding whether or not to invest in data observability include:

The company’s size

It can be helpful for companies of any size, but it is often seen as particularly important for large organizations. This is because large companies tend to have more data, more complex data ecosystems, and larger data teams, making it harder to keep track of everything that is going on. Data observability can help these companies get a better understanding of their data and ensure that it is accurate and reliable.

Level of data maturity/Adoption of a modern data stack

Data observability can be helpful for companies at any stage of data maturity. As soon as organizations set up a data stack, they should start monitoring the data passing through all of the different tools to prevent data reliability issues.

Budget

Data observability can be an expensive investment, depending on the size of the company and the complexity of its data ecosystem. Companies should weigh the cost of data observability against the benefits it can provide.

Companies should invest in the data observability tool that best meets their needs. There are a variety of data observability tools on the market, each with its own specificities.

The company’s industry

Data observability can be helpful for companies and data teams in any industry, but some industries are more heavily regulated than others. For example, the healthcare industry is subject to strict regulations around data privacy, while the financial industry is subject to regulations around data security. Data observability can help companies in these industries comply with these regulations by allowing them to monitor their data.

The company’s specific needs

Data observability can be helpful for companies that need to comply with regulations or improve their data quality. Data observability can also be helpful for data teams that want to get more value from their data or improve their decision-making.

Ultimately, the decision of whether or not to invest in data observability will come down to the company’s specific needs.

9. Data observability tools/solutions: One size does not fit all

The right solution for your organization will depend on your specific needs and goals. There are a variety of potential solutions on the market, ranging from open-source to commercial. But even within the category of commercial solutions, we can find important differences in terms of capabilities and integrations with other tools used by data engineers. When it comes to data observability, one size does not fit all. The right solution for your organization will depend on your specific needs and goals.

You can read more on what to look for when choosing a data observability tool in one of my previous blogs.

10. The category of data observability is young but growing at a really fast pace

Companies are starting to realize the importance of being able to track and monitor their data. As more and more data is used for business decision-making, the need for better data observability will continue to grow. Data observability is a growing trend, with more and more companies investing in tools and technologies to help them monitor their data and many companies being created with the aim of developing data observability solutions.

Conclusion

Data observability is a complex and dynamic topic, but hopefully, this introduction has given you a better understanding of what it is and how it can be leveraged to benefit your organization. If you’re interested in learning more, reach out to contact@siffletdata.com!

%2520copy%2520(3).avif)

-p-500.png)