Shared Understanding. Ultimate Confidence. At Scale.

When everyone knows your data is systematically validated for quality, understands where it comes from and how it's transformed, and is aligned on freshness and SLAs, what’s not to trust?

Always Fresh. Always Validated.

No more explaining data discrepancies to the C-suite. Thanks to automatic and systematic validation, Sifflet ensures your data is always fresh and meets your quality requirements. Stakeholders know when data might be stale or interrupted, so they can make decisions with timely, accurate data.

- Automatically detect schema changes, null values, duplicates, or unexpected patterns that could comprise analysis.

- Set and monitor service-level agreements (SLAs) for critical data assets.

- Track when data was last updated and whether it meets freshness requirements

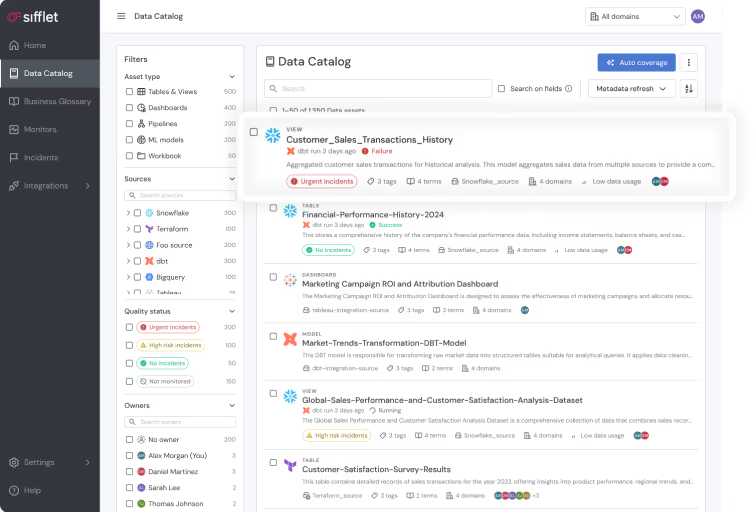

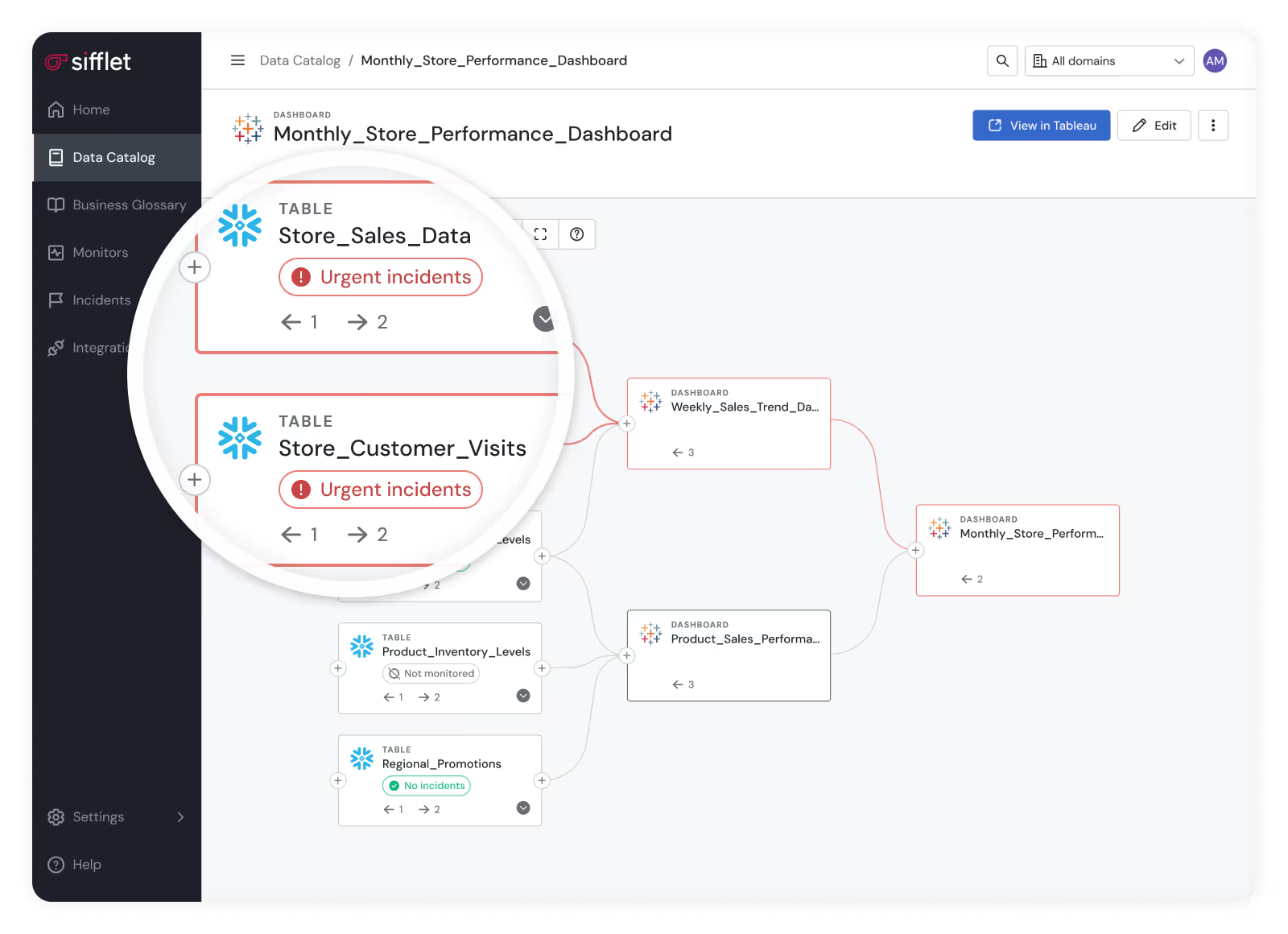

Understand Your Data, Inside and Out

Give data analysts and business users ultimate clarity. Sifflet helps teams understand their data across its whole lifecycle, and gives full context like business definitions, known limitations, and update frequencies, so everyone works from the same assumptions.

- Create transparency by helping users understand data pipelines, so they always know where data comes from and how it’s transformed.

- Develop shared understanding in data that prevents misinterpretation and builds confidence in analytics outputs.

- Quickly assess which downstream reports and dashboards are affected

Still have a question in mind ?

Contact Us

Frequently asked questions

How does reverse ETL improve data reliability and reduce manual data requests?

Reverse ETL automates the syncing of data from your warehouse to business apps, helping reduce the number of manual data requests across teams. This improves data reliability by ensuring consistent, up-to-date information is available where it’s needed most, while also supporting SLA compliance and data automation efforts.

How does Sifflet help optimize Data as a Product initiatives?

Sifflet enhances DaaP initiatives by providing comprehensive data observability dashboards, real-time metrics, and anomaly detection. It streamlines data pipeline monitoring and supports proactive data quality checks, helping teams ensure their data products are accurate, well-governed, and ready for use or monetization.

How does Sifflet help with monitoring data distribution?

Sifflet makes distribution monitoring easy by using statistical profiling to learn what 'normal' looks like in your data. It then alerts you when patterns drift from those baselines. This helps you maintain SLA compliance and avoid surprises in dashboards or ML models. Plus, it's all automated within our data observability platform so you can focus on solving problems, not just finding them.

What role does Sifflet’s Data Catalog play in data governance?

Sifflet’s Data Catalog supports data governance by surfacing labels and tags, enabling classification of data assets, and linking business glossary terms for standardized definitions. This structured approach helps maintain compliance, manage costs, and ensure sensitive data is handled responsibly.

What does a modern data stack look like and why does it matter?

A modern data stack typically includes tools for ingestion, warehousing, transformation and business intelligence. For example, you might use Fivetran for ingestion, Snowflake for warehousing, dbt for transformation and Looker for analytics. Investing in the right observability tools across this stack is key to maintaining data reliability and enabling real-time metrics that support smart, data-driven decisions.

Why is the traditional approach to data observability no longer enough?

Great question! The old playbook for data observability focused heavily on technical infrastructure and treated data like servers — if the pipeline ran and the schema looked fine, the data was assumed to be trustworthy. But today, data is a strategic asset that powers business decisions, AI models, and customer experiences. At Sifflet, we believe modern observability platforms must go beyond uptime and freshness checks to provide context-aware insights that reflect real business impact.

Is Forge able to automatically fix data issues in my pipelines?

Forge doesn’t take action on its own, but it does provide smart, contextual guidance based on past fixes. It helps teams resolve issues faster while keeping you in full control of the resolution process, which is key for maintaining SLA compliance and data quality monitoring.

How does Sifflet use AI to improve data observability?

At Sifflet, we're integrating advanced AI models into our observability platform to enhance data quality monitoring and anomaly detection. Marie, our Machine Learning Engineer, has been instrumental in building intelligent systems that automatically detect issues across data pipelines, making it easier to maintain data reliability in real time.

-p-500.png)