Big Data. %%Big Potential.%%

Sell data products that meet the most demanding standards of data reliability, quality and health.

Identify Opportunities

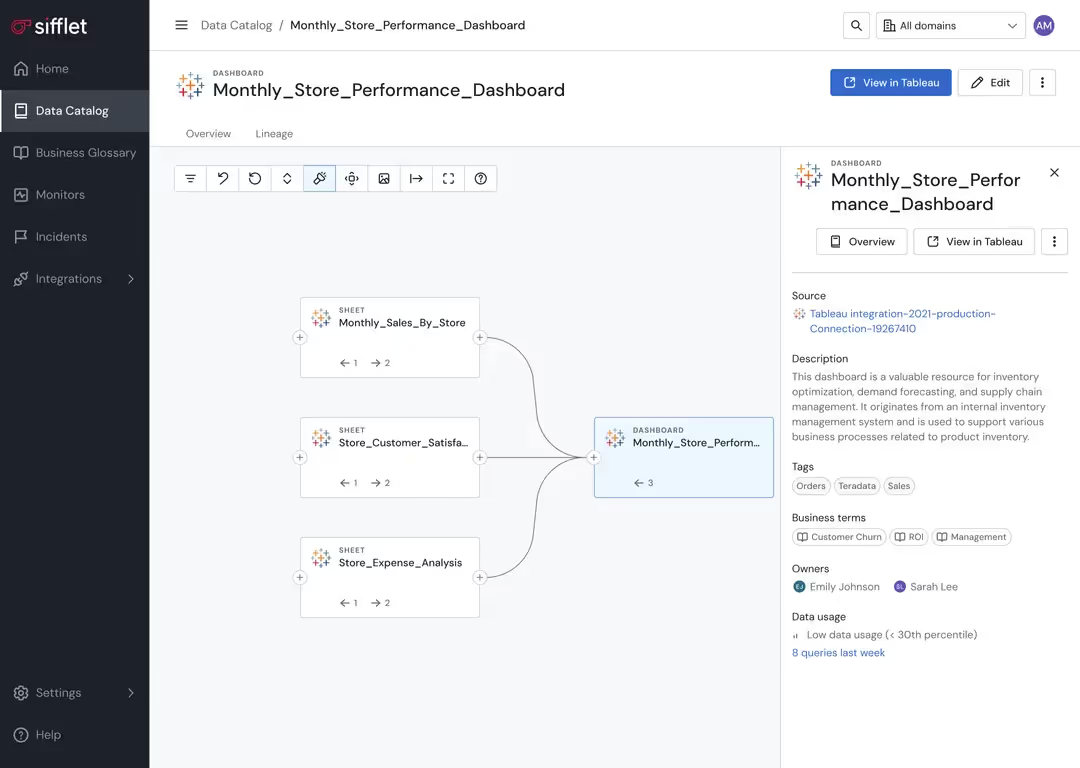

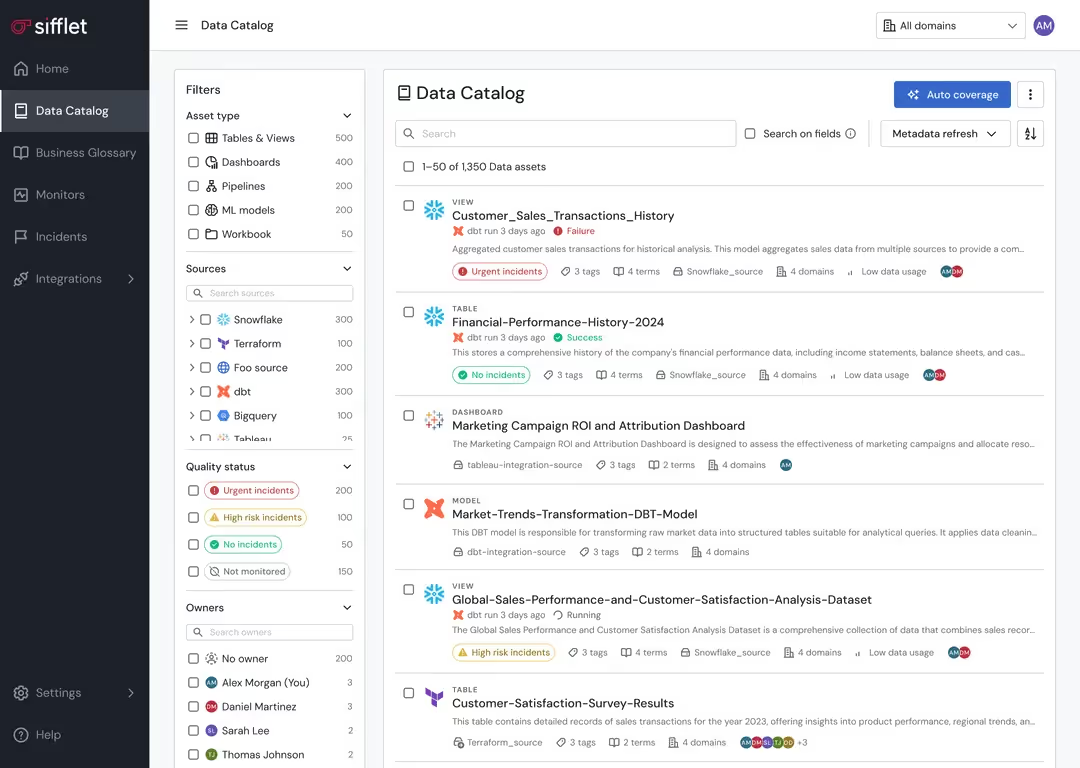

Monetizing data starts with identifying your highest potential data sets. Sifflet can highlight patterns in data usage and quality that suggest monetization potential and help you uncover data combinations that could create value.

- Deep dive into patterns around data usage to identify high-value data sets through usage analytics

- Determine which data assets are most reliable and complete

Ensure Quality and Operational Excellence

It’s not enough to create a data product. Revenue depends on ensuring the highest levels of reliability and quality. Sifflet ensures quality and operational excellence to protect your revenue streams.

- Reduce the cost of maintaining your data products through automated monitoring

- Prevent and detect data quality issues before customers are impacted

- Empower rapid response to issues that could affect data product value

- Streamline data delivery and sharing processes

Frequently asked questions

Yes, Sifflet leverages AI to enhance data observability with features like anomaly detection and predictive insights. This ensures your data systems remain resilient and can support advanced analytics and AI-driven initiatives. Have a look at how Sifflet is leveraging AI for better data observability here

-p-500.png)