Home

Sifflet Vs. Metaplane

Sifflet %%vs%% Metaplane

See How Leading Teams Prevent Failures, Accelerate Analytics, And Turn Observability Into Business Value.

Business impact in context, not just anomalies

Faster root cause with lineage, change history and AI guidance

End-to-end monitoring coverage that adapts as pipelines evolve

Don't Just See Where It Breaks, %%Know%% Why !

Pipeline Monitoring

≠

Data Observability

3 Reasons Teams Switch from Metaplane to %%Sifflet%%

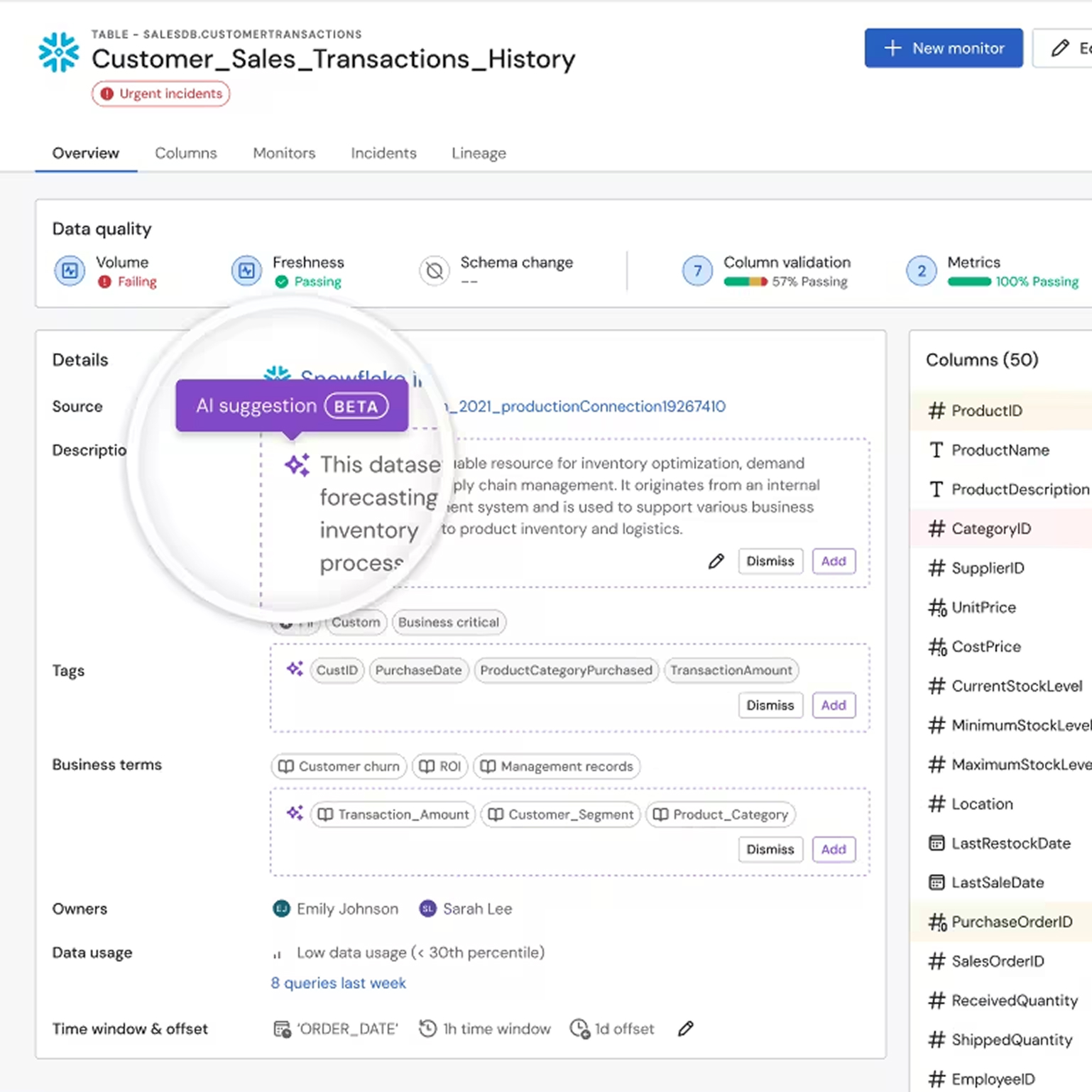

Business Impact

Built In

Sifflet connects anomalies to lineage and business context. Issues get prioritized by downstream impact, not alert volume.

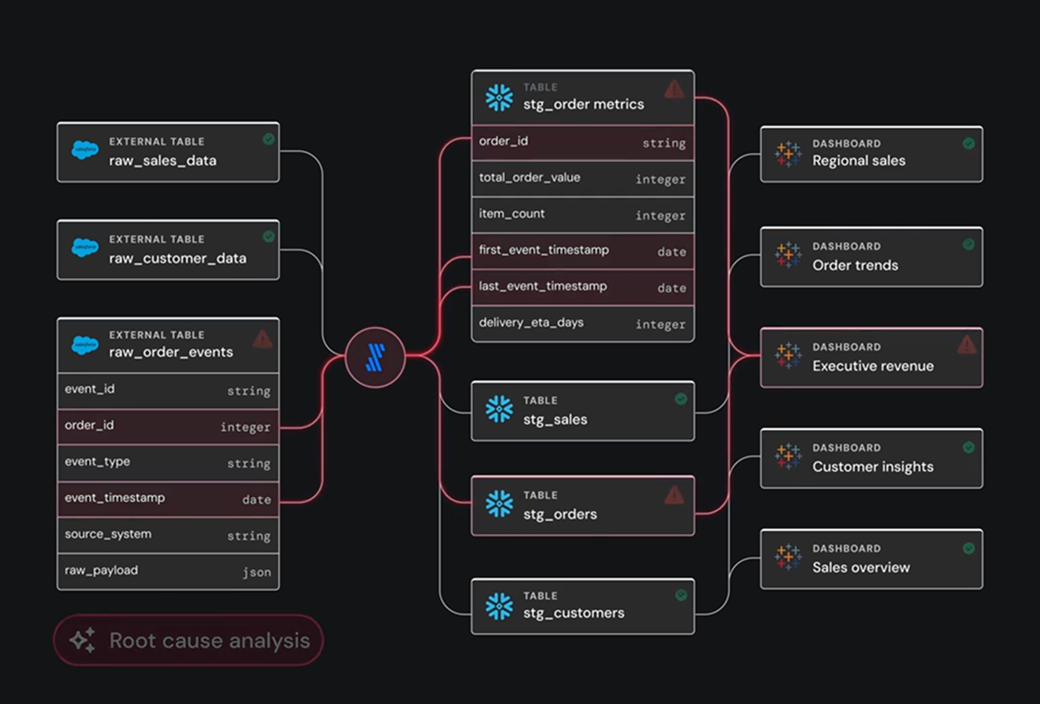

Root Cause in Minutes, Not Hours

Lineage, queries, logs, and change history are linked automatically. Root cause becomes visible without dashboard hunting.

Make Data Reliable for Every Stakeholder

Reliability becomes visible to both technical and business users, with a shared view of health, ownership, and impact.

Move From Reactive Alerts To %%Proactive%% AI Agents

Turn Raw Data Into Trusted Insights

Detects anomalies across the entire data stack

Combines OOTB checks, SQL rules, and NLP monitor creation

Predicts failures before they hit production

Prioritize What Drives Business Value

Alerts tied to business impact and usage

Highlights critical data for analytics & AI

Connects data health to dashboards & KPIs

Fix Issues Before They Spread

Automated RCA with health-aware lineage

Instantly identifies upstream root causes

Real-time collaboration via Insights extension

Empower Every Team — Not Just Engineers

Adaptive UX for tech & business users

Full catalog with glossary & usage tracking

Scales across large, distributed teams

Ready to Choose Between

Sifflet and Monte Carlo?

Both platforms detect anomalies. Sifflet adds business context, impact analysis, and faster root cause workflows - built for teams scaling modern data stacks.

Book a Demo

Scale Monitoring Without %%Without%% Scaling Headcount

Boost %%Reliability%% And Turn Data Into Business Impact With

Enter the %%Proactive%% AI Era of Observability

Get a firsthand look at how Sifflet delivers proactive, intelligent data observability — built for the future.

Takes 30 minutes • No sales pitch • See it in action

-p-500.png)