A Seriously Smart Upgrade.

Prevent, detect and resolve incidents faster than ever before. No matter what your data stack throws at you, your data quality will reach new levels of performance.

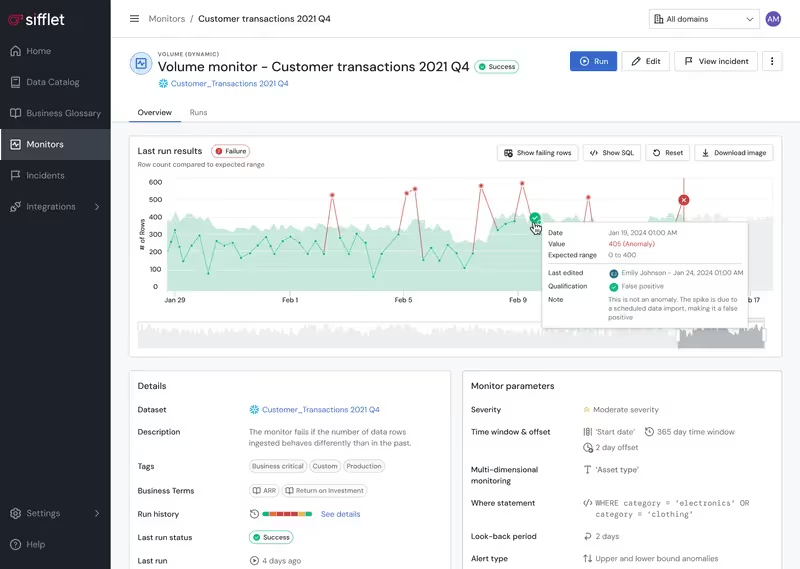

No More Over Reacting

Sifflet takes you from reactive to proactive, with real-time detection and alerts that help you to catch data disruptions, before they happen. Watch your mean time to detection fall rapidly. On even the most complex data stacks.

- Advanced capabilities such as multidimensional monitoring help you seize complex data quality issues, even before breaks

- ML-based monitors shield your most business-critical data, so essential KPIs are protected and you get notified before there is business impact

- OOTB and customizable monitors give you comprehensive, end-to-end coverage and AI helps them get smarter as they go, reducing your reactivity even more.

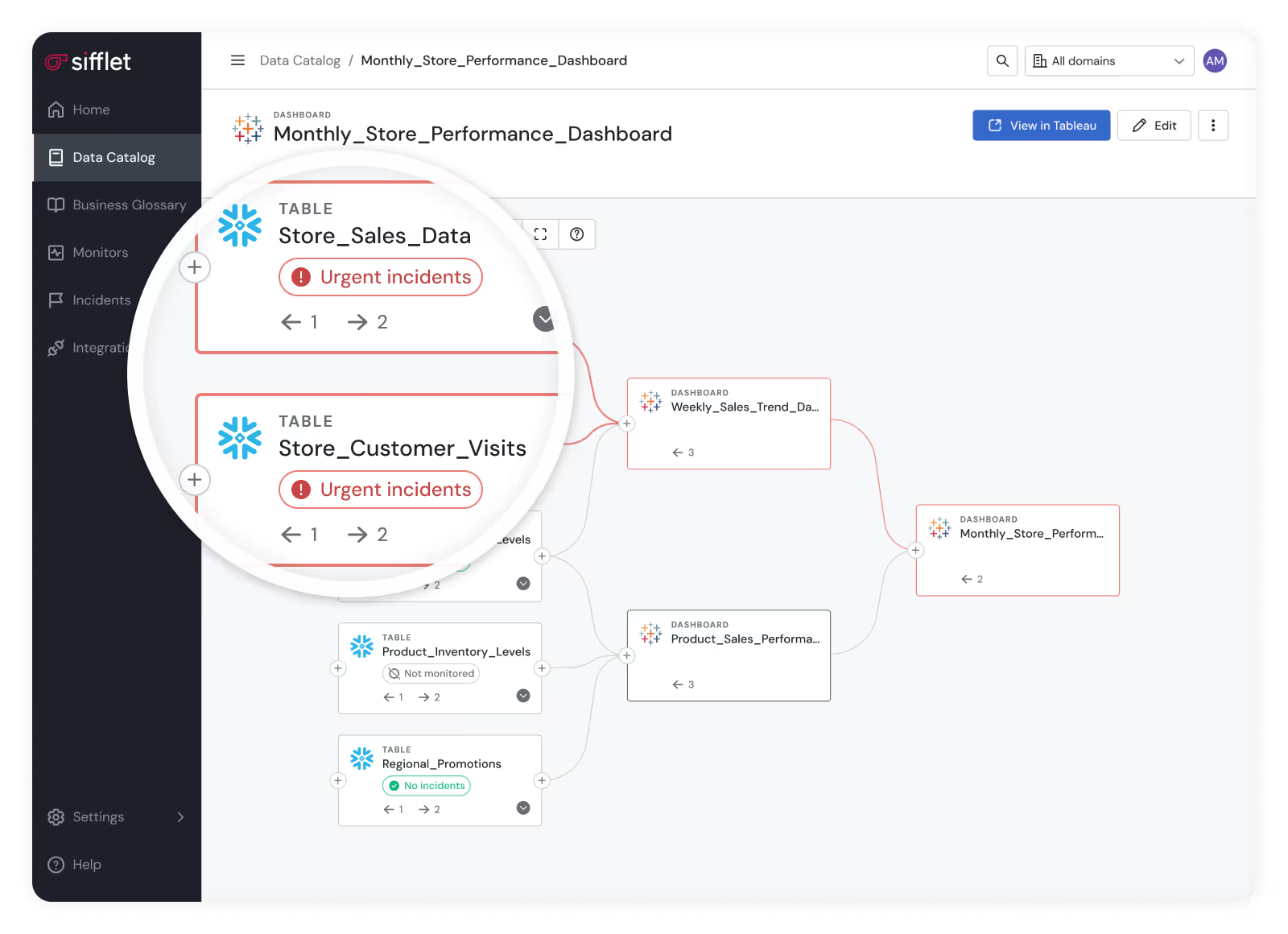

Resolutions in Record Time

Get to the root cause of incidents and resolve them in record time.

- Quickly understand the scope and impact of an incident thanks to detailed system visibility

- Trace data flow through your system, identify the start point of issues, and pinpoint downstream dependencies to enable a seamless experience for business users, all thanks to data lineage

- Halt the propagation of data quality anomalies with Sifflet’s Flow Stopper

Still have a question in mind ?

Contact Us

Frequently asked questions

How does data observability improve the value of a data catalog?

Data observability enhances a data catalog by adding continuous monitoring, data lineage tracking, and real-time alerts. This means organizations can not only find their data but also trust its accuracy, freshness, and consistency. By integrating observability tools, a catalog becomes part of a dynamic system that supports SLA compliance and proactive data governance.

Why is data observability important in a modern data stack?

Data observability is crucial because it ensures your data is reliable, trustworthy, and ready for decision-making. It sits at the top of the modern data stack and helps teams detect issues like data drift, schema changes, or freshness problems before they impact downstream analytics. A strong observability platform like Sifflet gives you peace of mind and helps maintain data quality across all layers.

How did Sifflet support Meero’s incident management and root cause analysis efforts?

Sifflet provided Meero with powerful tools for root cause analysis and incident management. With features like data lineage tracking and automated alerts, the team could quickly trace issues back to their source and take action before they impacted business users.

What kind of insights can I gain by integrating Airbyte with Sifflet?

By integrating Airbyte with Sifflet, you unlock real-time insights into your data pipelines, including data freshness checks, anomaly detection, and complete data lineage tracking. This helps improve SLA compliance, reduces troubleshooting time, and boosts your confidence in data quality and pipeline health.

How does Sentinel help reduce alert fatigue in modern data environments?

Sentinel intelligently analyzes metadata like data lineage and schema changes to recommend what really needs monitoring. By focusing on high-impact areas, it cuts down on noise and helps teams manage alert fatigue while optimizing monitoring costs.

How does aligning data observability with business objectives improve outcomes?

Aligning data observability with business goals transforms data from a technical asset into a strategic one. By setting clear KPIs and linking data quality monitoring to business impact, teams can make smarter decisions, improve SLA compliance, and drive real value from their data investments.

How does Sifflet maintain visual and interaction consistency across its observability platform?

We use a reusable component library based on atomic design principles, along with UX writing guidelines to ensure consistent terminology. This helps users quickly understand telemetry instrumentation, metrics collection, and incident response workflows without needing to relearn interactions across different parts of the platform.

How does Sifflet support real-time data lineage and observability?

Sifflet provides automated, field-level data lineage integrated with real-time alerts and anomaly detection. It maps how data flows across your stack, enabling quick root cause analysis and impact assessments. With features like data drift detection, schema change tracking, and pipeline error alerting, Sifflet helps teams stay ahead of issues and maintain data reliability.

-p-500.png)