Proactive access, quality and control

Empower data teams to detect and address issues proactively by providing them with tools to ensure data availability, usability, integrity, and security.

De-risked data discovery

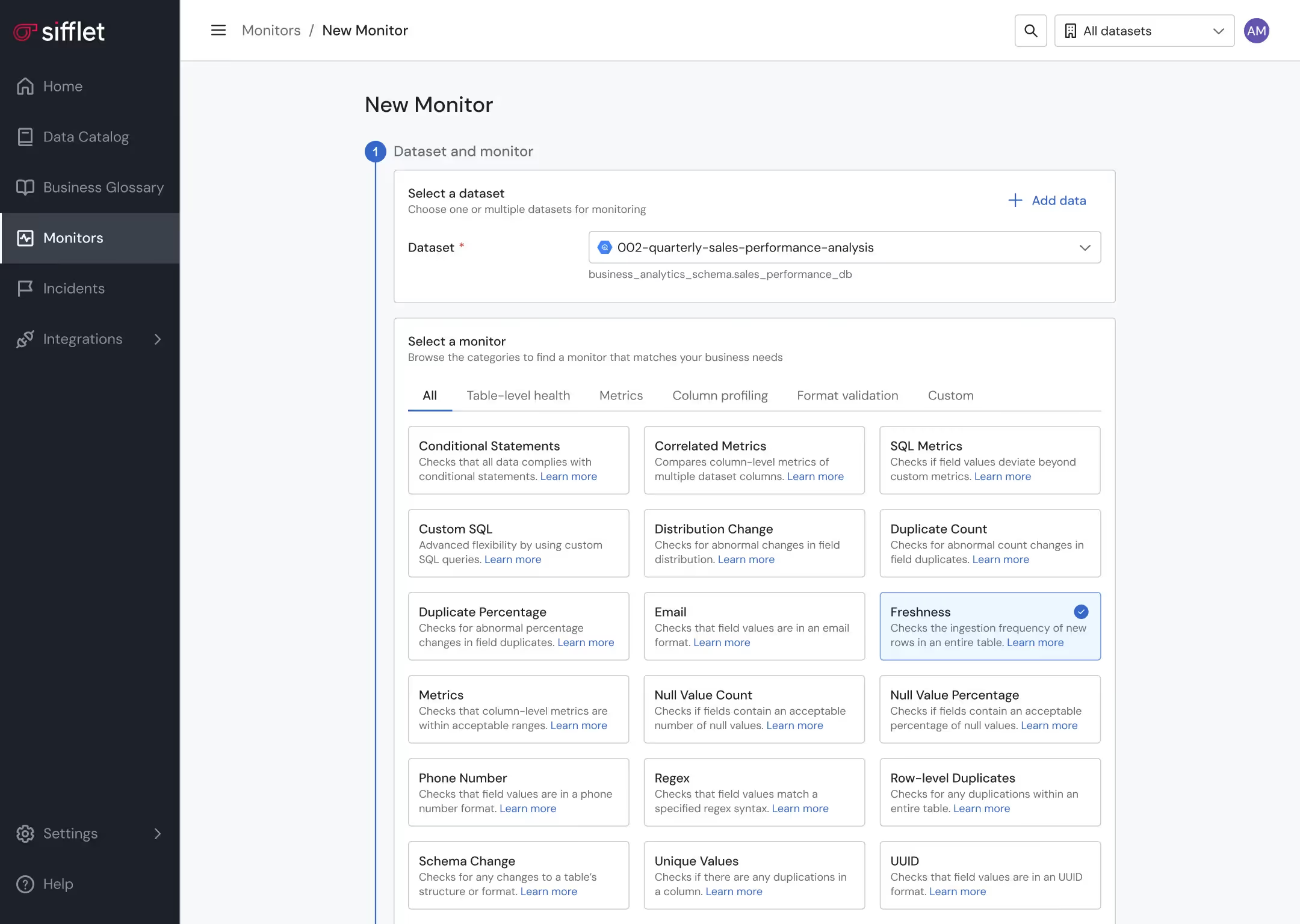

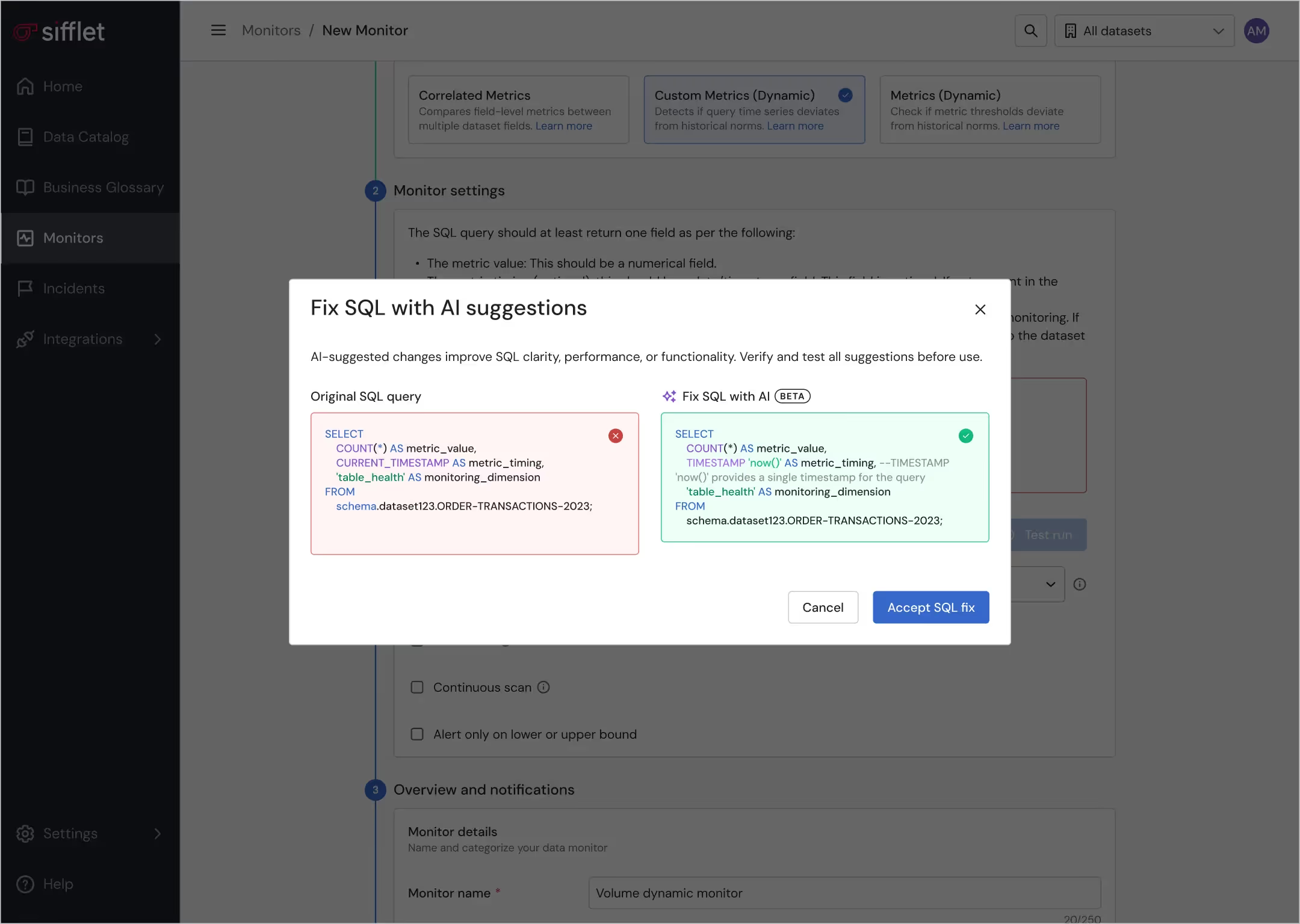

- Ensure proactive data quality thanks to a large library of OOTB monitors and a built-in notification system

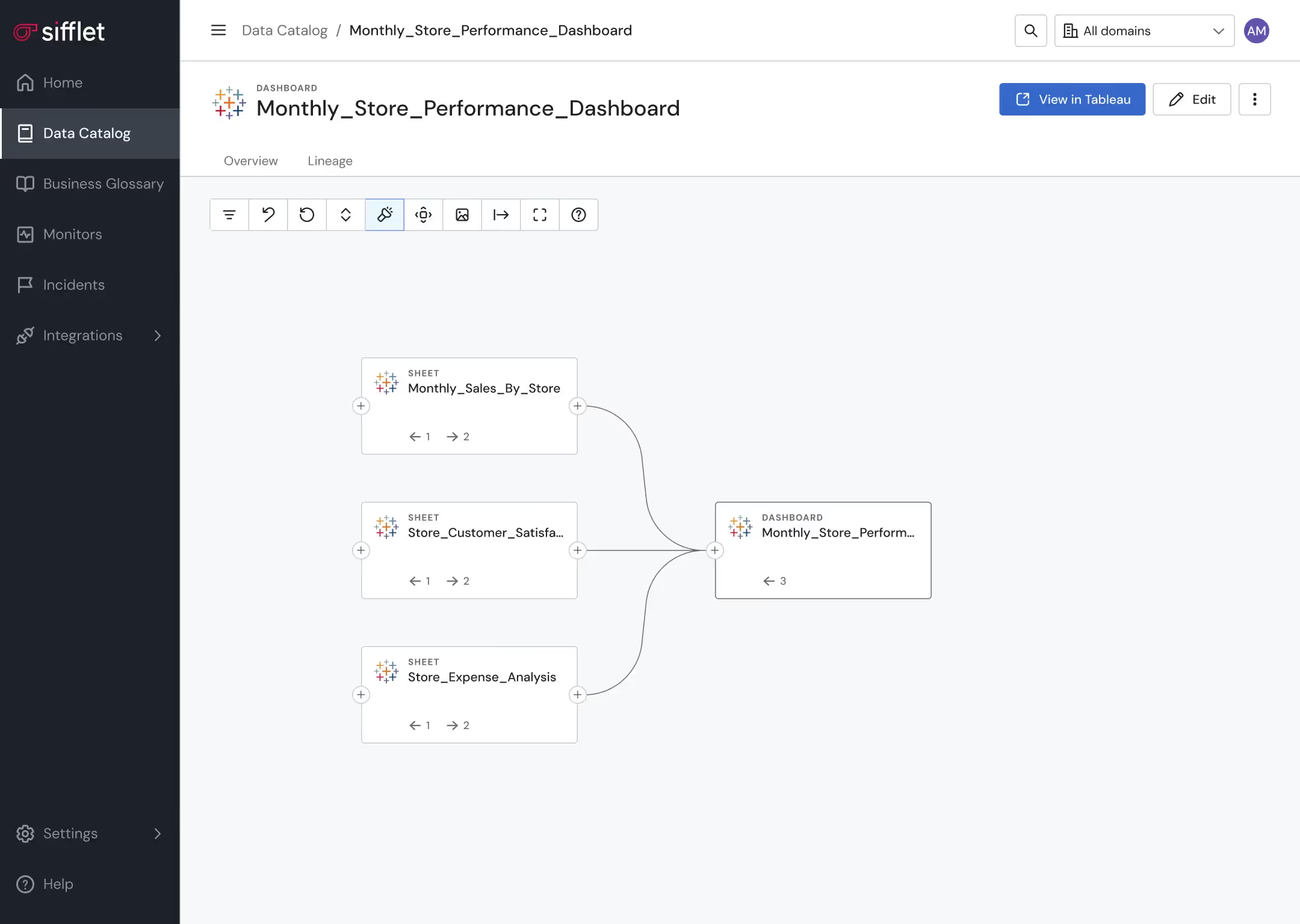

- Gain visibility over assets’ documentation and health status on the Data Catalog for safe data discovery

- Establish the official source of truth for key business concepts using the Business Glossary

- Leverage custom tagging to classify assets

Structured data observability platform

- Tailor data visibility for teams by grouping assets in domains that align with the company’s structure

- Define data ownership to improve accountability and smooth collaboration across teams

Secured data management

Safeguard PII data securely through ML-based PII detection

Still have a question in mind ?

Contact Us

Frequently asked questions

Can better design really improve data reliability and efficiency?

Absolutely. A well-designed observability platform not only looks good but also enhances user efficiency and reduces errors. By streamlining workflows for tasks like root cause analysis and data drift detection, Sifflet helps teams maintain high data reliability while saving time and reducing cognitive load.

What is data volume and why is it so important to monitor?

Data volume refers to the quantity of data flowing through your pipelines. Monitoring it is critical because sudden drops, spikes, or duplicates can quietly break downstream logic and lead to incomplete analysis or compliance risks. With proper data volume monitoring in place, you can catch these anomalies early and ensure data reliability across your organization.

How does data quality monitoring help improve data reliability?

Data quality monitoring is essential for maintaining trust in your data. A strong observability platform should offer features like anomaly detection, data profiling, and data validation rules. These tools help identify issues early, so you can fix them before they impact downstream analytics. It’s all about making sure your data is accurate, timely, and reliable.

How does Sifflet support reverse ETL and operational analytics?

Sifflet enhances reverse ETL workflows by providing data observability dashboards and real-time monitoring. Our platform ensures your data stays fresh, accurate, and actionable by enabling root cause analysis, data lineage tracking, and proactive anomaly detection across your entire pipeline.

Can Sifflet help with root cause analysis when there's a data issue?

Absolutely. Sifflet's built-in data lineage tracking plays a key role in root cause analysis. If a dashboard shows unexpected data, teams can trace the issue upstream through the lineage graph, identify where the problem started, and resolve it faster. This visibility makes troubleshooting much more efficient and collaborative.

How do modern storage platforms like Snowflake and S3 support observability tools?

Modern platforms like Snowflake and Amazon S3 expose rich metadata and access patterns that observability tools can monitor. For example, Sifflet integrates with Snowflake to track schema changes, data freshness, and query patterns, while S3 integration enables us to monitor ingestion latency and file structure changes. These capabilities are key for real-time metrics and data quality monitoring.

Why is smart alerting important in data observability?

Smart alerting helps your team focus on what really matters. Instead of flooding your Slack with every minor issue, a good observability tool prioritizes alerts based on business impact and data asset importance. This reduces alert fatigue and ensures the right people get notified at the right time. Look for platforms that offer customizable severity levels, real-time alerts, and integrations with your incident management tools like PagerDuty or email alerts.

What does Sifflet plan to do with the new $18M in funding?

We're excited to use this funding to accelerate product innovation, expand our North American presence, and grow our team. Our focus will be on enhancing AI-powered capabilities, improving data pipeline monitoring, and helping customers maintain data reliability at scale.

-p-500.png)