Mitigate disruption and risks

Customers choose Sifflet for migrations because it unifies lineage, monitoring, and triage in one place, giving teams clear, business-relevant insights without tool-switching. Its AI speeds up root cause analysis, learns your environment, and cuts manual effort, typically going live in under an hour and scaling fully in six weeks.

Pre-Migration: Baseline and Prepare

Create a complete inventory and establish trust baselines before any data is moved.

What Sifflet enables

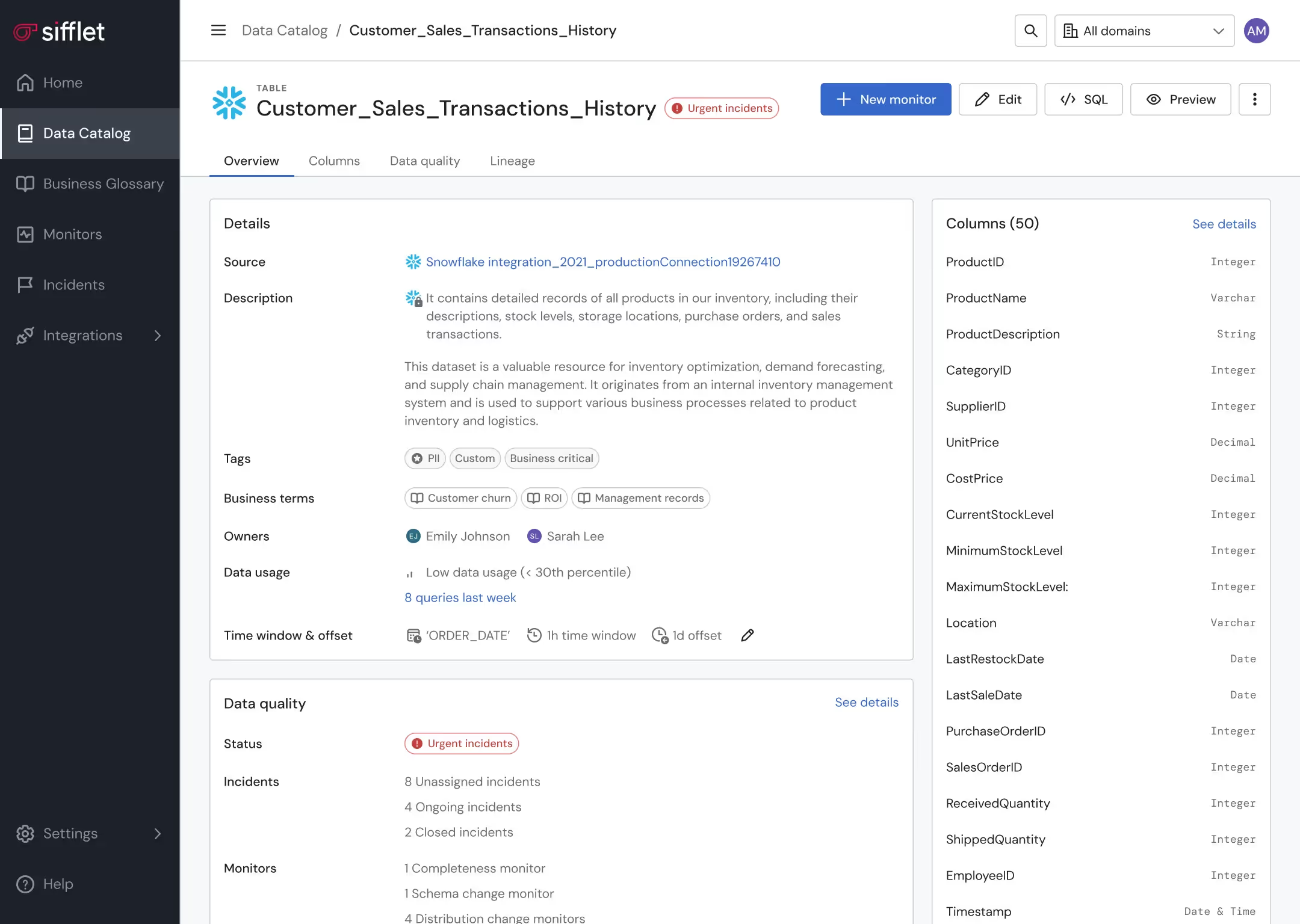

• End-to-end lineage mapping across your on-prem estate, so you know exactly which tables, dashboards, and KPIs depend on each other before changing pipelines.

• Automated data profiling and health scoring to establish quality baselines (volumes, distributions, freshness, schema shape) for every critical asset.

• Domain-level ownership so each business area knows its scope and responsibilities ahead of the migration.

• Monitors as Code to version and package all checks that will run pre- and post-migration.

Outcome: A clear, auditable understanding of what “good” looks like before the first batch of data is moved.

.avif)

During Migration: Parallel Validation and Controlled Cutover

Continuously validate data between your on-prem and Snowflake environments.

What Sifflet enables

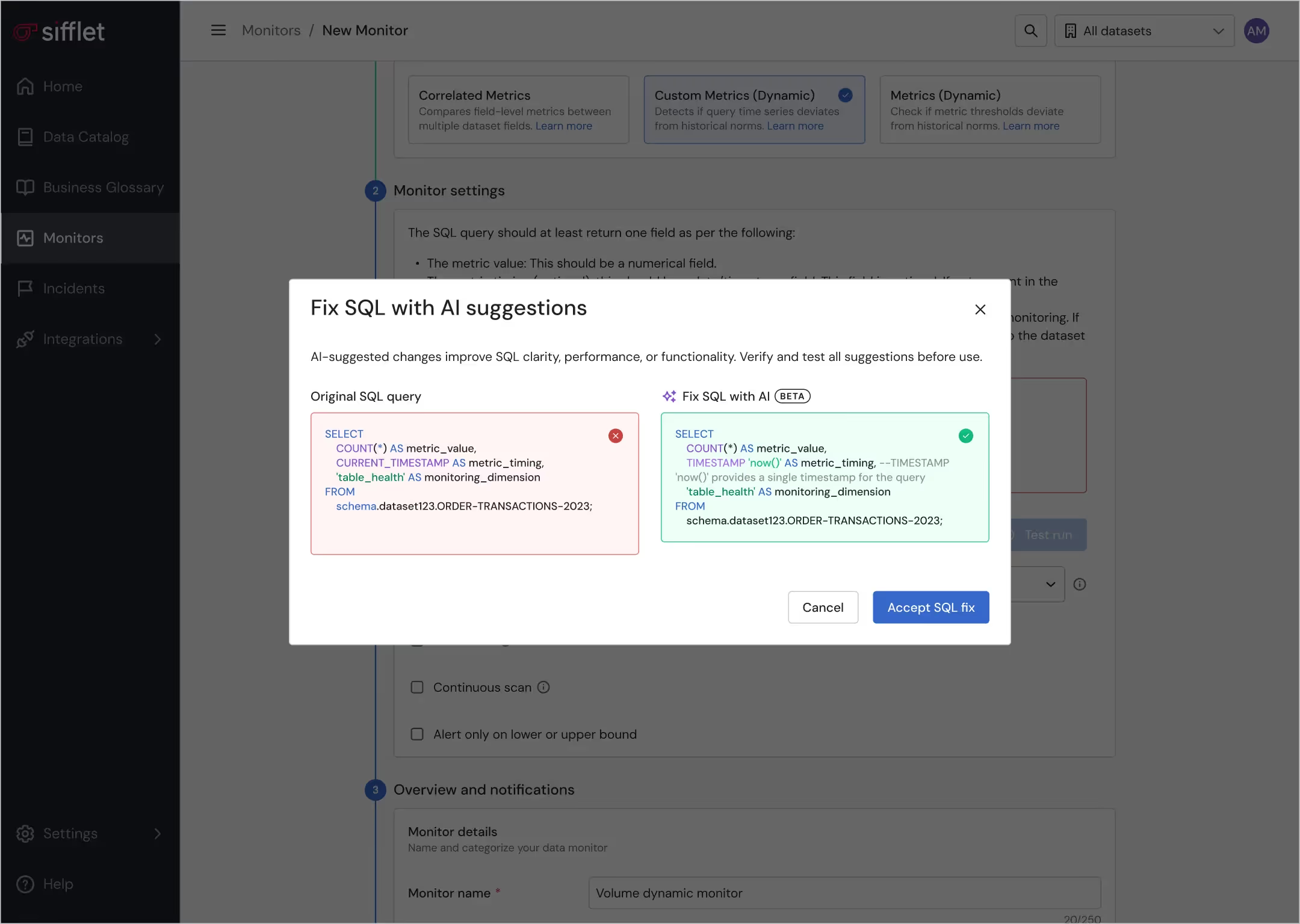

• Automated cross-environment comparison checks using custom SQL monitors, dynamic tests, and Sifflet’s failing-rows view.

• Adaptive anomaly detection with seasonal awareness to catch regressions introduced by new pipelines or refactored logic.

• Incident-centric workflow to consolidate related alerts, generate AI-driven root cause analysis, and route to the right domain team.

• Field-level lineage to understand the blast radius of every upstream change as migration waves progress.

Outcome: Fast detection of mismatches, broken joins, missing data, or schema drift without manual spot-checking.

Post-Migration: Stabilise and Scale

Ensure production-grade reliability in Snowflake after cutover.

What Sifflet enables

• Auto-coverage and Monitor Recommendations (Sentinel) to close blind spots and automatically instrument new Snowflake tables.

• BI-embedded notifications (Power BI, Tableau, Looker) to alert business teams when downstream metrics change.

• Data Product views and SLAs to formalise trust in the new ecosystem and expose quality metrics to stakeholders.

• Cost-efficient observability with workload tagging and percent compute overhead to keep Snowflake spend predictable.

Outcome: A stable, trusted Snowflake environment with observability built in, not bolted on.

-p-500.png)