Cost-efficient data pipelines

Pinpoint cost inefficiencies and anomalies thanks to full-stack data observability.

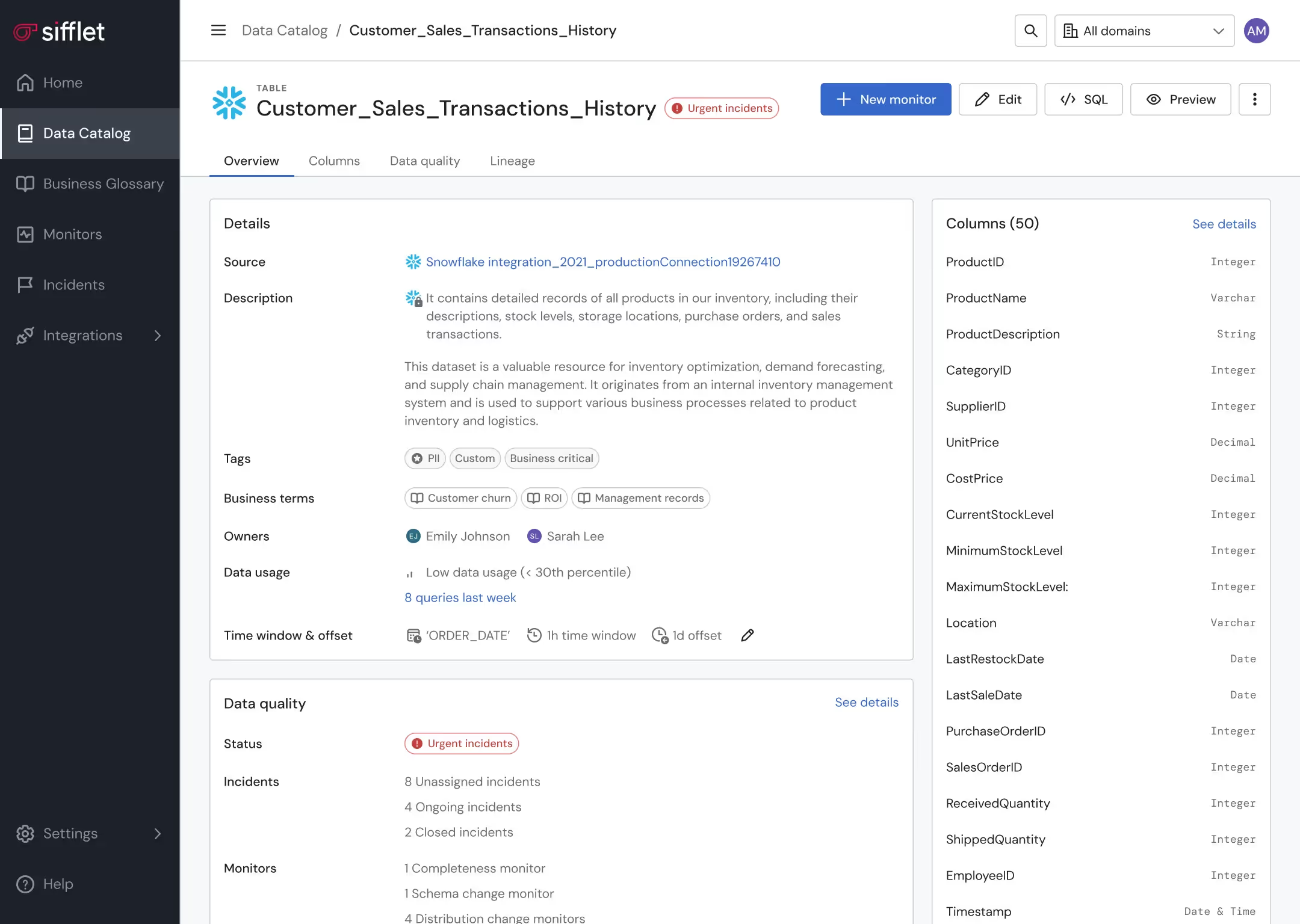

Data asset optimization

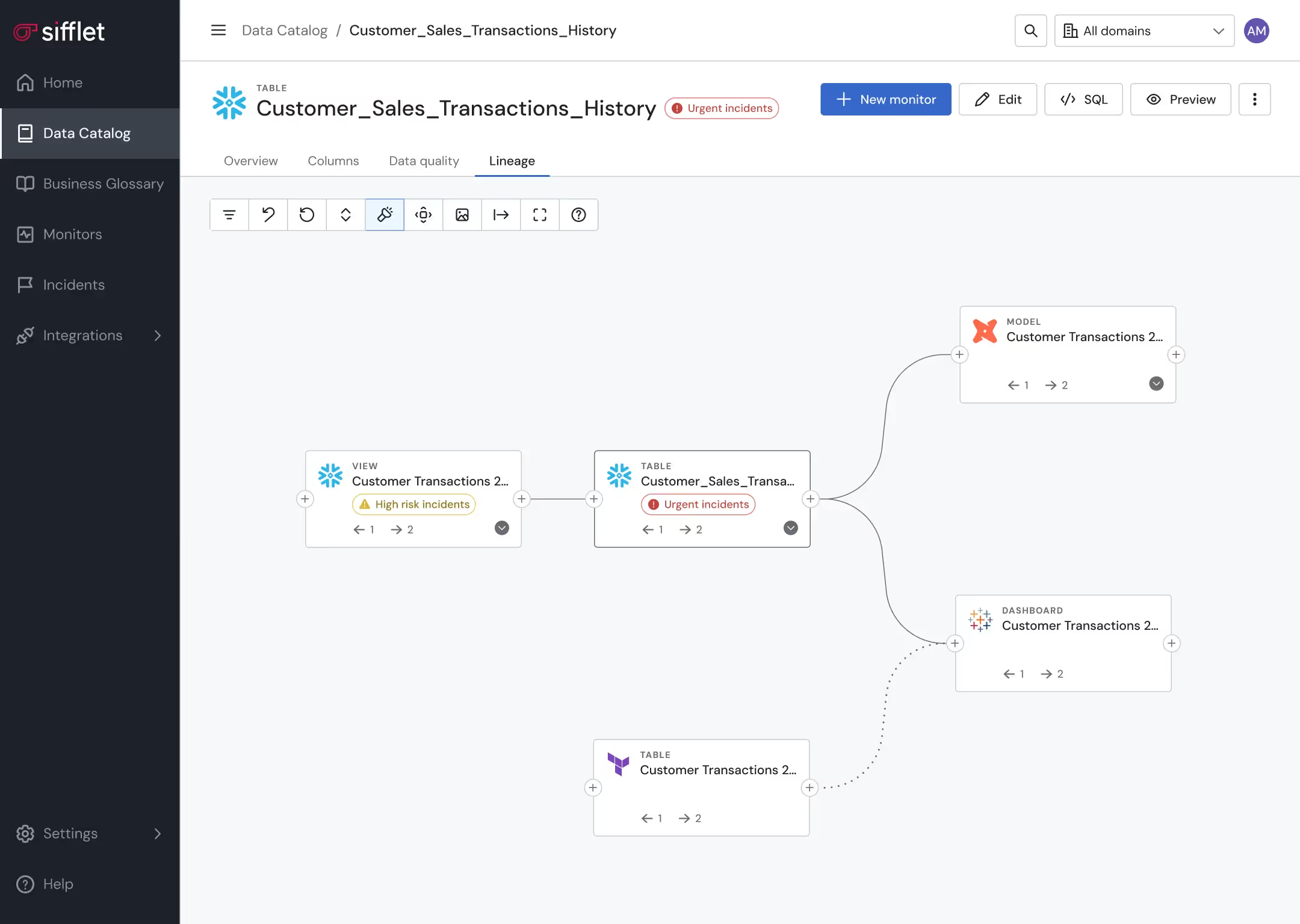

- Leverage lineage and Data Catalog to pinpoint underutilized assets

- Get alerted on unexpected behaviors in data consumption patterns

Proactive data pipeline management

Proactively prevent pipelines from running in case a data quality anomaly is detected

Still have a question in mind ?

Contact Us

Frequently asked questions

What kind of usage insights can I get from Sifflet to optimize my data resources?

Sifflet helps you identify underused or orphaned data assets through lineage and usage metadata. By analyzing this data, you can make informed decisions about deprecating unused tables or enhancing monitoring for critical pipelines. It's a smart way to improve pipeline resilience and reduce unnecessary costs in your data ecosystem.

Is data governance more about culture or tools?

It's a mix of both, but culture plays a big role. As Dan Power puts it, 'culture eats strategy for breakfast.' Even the best observability tools won't succeed without enterprise-wide data literacy and buy-in. That’s why training, user-friendly platforms, and fostering collaboration are just as important as the technology stack you choose.

What are some best practices for ensuring data quality during transformation?

To ensure high data quality during transformation, start with strong data profiling and cleaning steps, then use mapping and validation rules to align with business logic. Incorporating data lineage tracking and anomaly detection also helps maintain integrity. Observability tools like Sifflet make it easier to enforce these practices and continuously monitor for data drift or schema changes that could affect your pipeline.

Can I define data quality monitors as code using Sifflet?

Absolutely! With Sifflet's Data-Quality-as-Code (DQaC) v2 framework, you can define and manage thousands of monitors in YAML right from your IDE. This Everything-as-Code approach boosts automation and makes data quality monitoring scalable and developer-friendly.

Why does AI often fail even when the models are technically sound?

Great question! AI doesn't usually fail because of bad models, but because of unreliable data. Without strong data observability in place, it's hard to detect data issues like schema changes, stale tables, or broken pipelines. These problems undermine trust, and without trust in your data, even the best models can't deliver value.

Is Sifflet suitable for large, distributed data environments?

Absolutely! Sifflet was built with scalability in mind. Whether you're working with batch data observability or streaming data monitoring, our platform supports distributed systems observability and is designed to grow with multi-team, multi-region organizations.

What does Sifflet plan to do with the new $18M in funding?

We're excited to use this funding to accelerate product innovation, expand our North American presence, and grow our team. Our focus will be on enhancing AI-powered capabilities, improving data pipeline monitoring, and helping customers maintain data reliability at scale.

Why is table-level lineage important for data quality monitoring and governance?

Table-level lineage helps you understand how data flows through your systems, which is essential for data quality monitoring and data governance. It supports impact analysis, pipeline debugging, and compliance by showing how changes in upstream tables affect downstream assets.

-p-500.png)