Coverage without compromise.

Grow monitoring coverage intelligently as your stack scales and do more with less resources thanks to tooling that reduces maintenance burden, improves signal-to-noise, and helps you understand impact across interconnected systems.

Don’t Let Scale Stop You

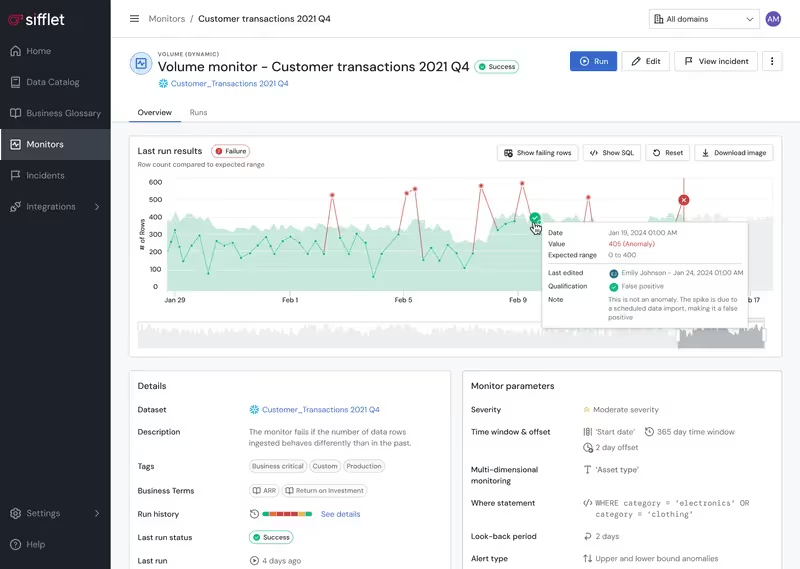

As your stack and data assets scale, so do monitors. Keeping rules updated becomes a full-time job, and tribal knowledge about monitors gets scattered, so teams struggle to sunset obsolete monitors while adding new ones. No more with Sifflet.

- Optimize monitoring coverage and minimize noise levels with AI-powered suggestions and supervision that adapt dynamically

- Implement programmatic monitoring set up and maintenance with Data Quality as Code (DQaC)

- Automated monitor creation and updates based on data changes

- Centralized monitor management reduces maintenance overhead

Get Clear and Consistent

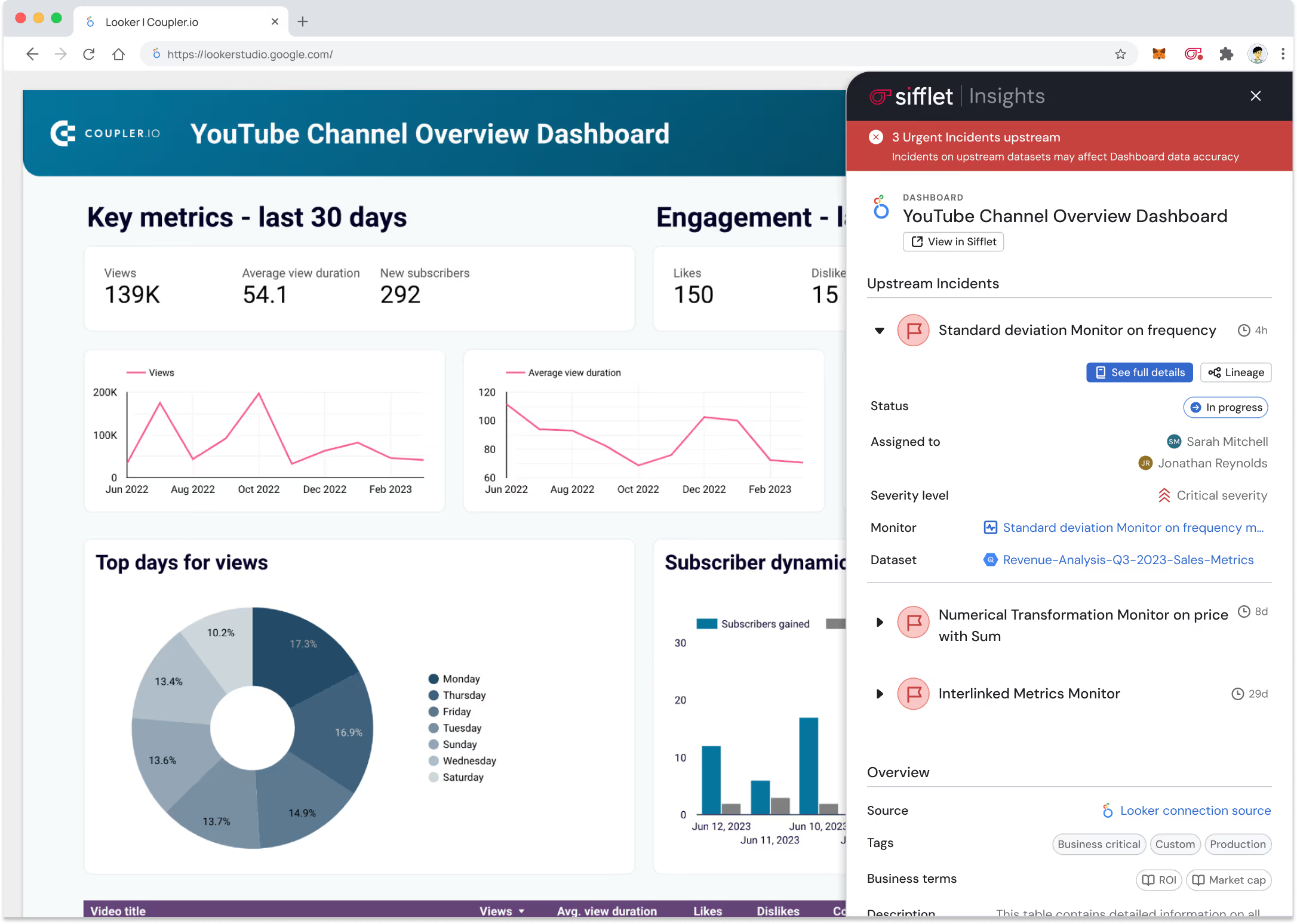

Maintaining consistent monitoring practices across tools, platforms, and internal teams that work across different parts of the stack isn’t easy. Sifflet makes it a breeze.

- Set up consistent alerting and response workflows

- Benefit from unified monitoring across your platforms and tools

- Use automated dependency mapping to show system relationships and benefit from end-to-end visibility across the entire data pipeline

Still have a question in mind ?

Contact Us

Frequently asked questions

What is Flow Stopper and how does it help with data pipeline monitoring?

Flow Stopper is a powerful feature in Sifflet's observability platform that allows you to pause vulnerable pipelines at the orchestration layer before issues reach production. It helps with proactive data pipeline monitoring by catching anomalies early and preventing downstream damage to your data systems.

How does the checklist help with reducing alert fatigue?

The checklist emphasizes the need for smart alerting, like dynamic thresholding and alert correlation, instead of just flooding your team with notifications. This focus helps reduce alert fatigue and ensures your team only gets notified when it really matters.

How does Sifflet make data observability more accessible to BI users?

Great question! At Sifflet, we're committed to making data observability insights available right where you work. That’s why we’ve expanded beyond our Chrome extension to integrate directly with popular Data Catalogs like Atlan, Alation, Castor, and Data Galaxy. This means BI users can access real-time metrics and data quality insights without ever leaving their workflow.

Who should use the data observability checklist?

This checklist is for anyone who relies on trustworthy data—from CDOs and analysts to DataOps teams and engineers. Whether you're focused on data governance, anomaly detection, or building resilient pipelines, the checklist gives you a clear path to choosing the right observability tools.

How does Shippeo’s use of data pipeline monitoring enhance internal decision-making?

By enriching and aggregating operational data, Shippeo creates a reliable source of truth that supports product and operations teams. Their pipeline health dashboards and observability tools ensure that internal stakeholders can trust the data driving their decisions.

What kind of monitoring capabilities does Sifflet offer out of the box?

Sifflet comes with a powerful library of pre-built monitors for data profiling, data freshness checks, metrics health, and more. These templates are easily customizable, supporting both batch data observability and streaming data monitoring, so you can tailor them to your specific data pipelines.

What role does data pipeline monitoring play in Dailymotion’s delivery optimization?

By rebuilding their pipelines with strong data pipeline monitoring, Dailymotion reduced storage costs, improved performance, and ensured consistent access to delivery data. This helped eliminate data sprawl and created a single source of truth for operational teams.

What should I look for in a data quality monitoring solution?

You’ll want a solution that goes beyond basic checks like null values and schema validation. The best data quality monitoring tools use intelligent anomaly detection, dynamic thresholding, and auto-generated rules based on data profiling. They adapt as your data evolves and scale effortlessly across thousands of tables. This way, your team can confidently trust the data without spending hours writing manual validation rules.

-p-500.png)