Redshift

Integrate Sifflet with Redshift to access end-to-end lineage, monitor assets like Spectrum tables, enrich metadata, and gain insights for optimized data observability.

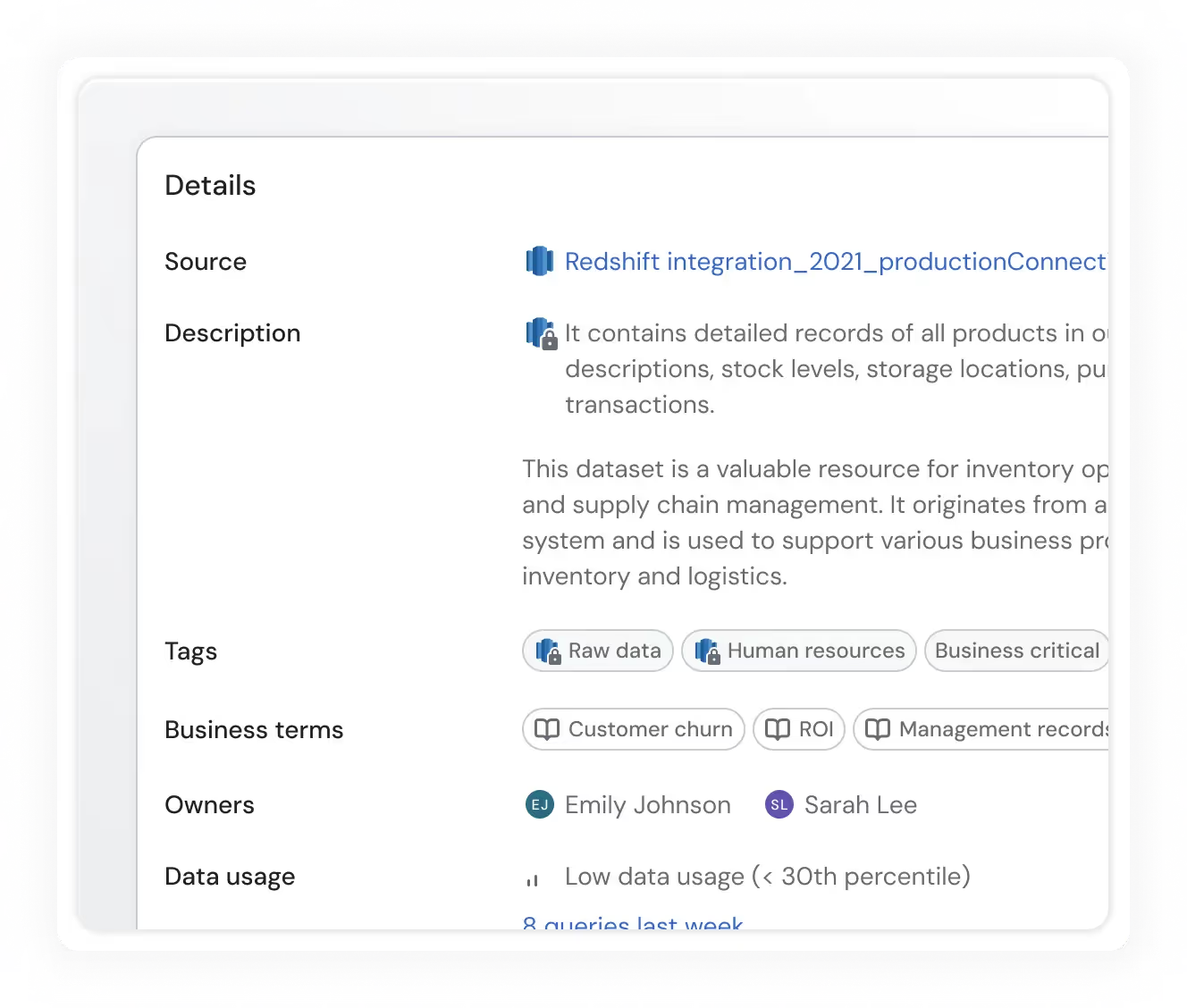

Exhaustive metadata

Sifflet leverages Redshift's internal metadata tables to retrieve information about your assets and enhance it with Sifflet-generated insights.

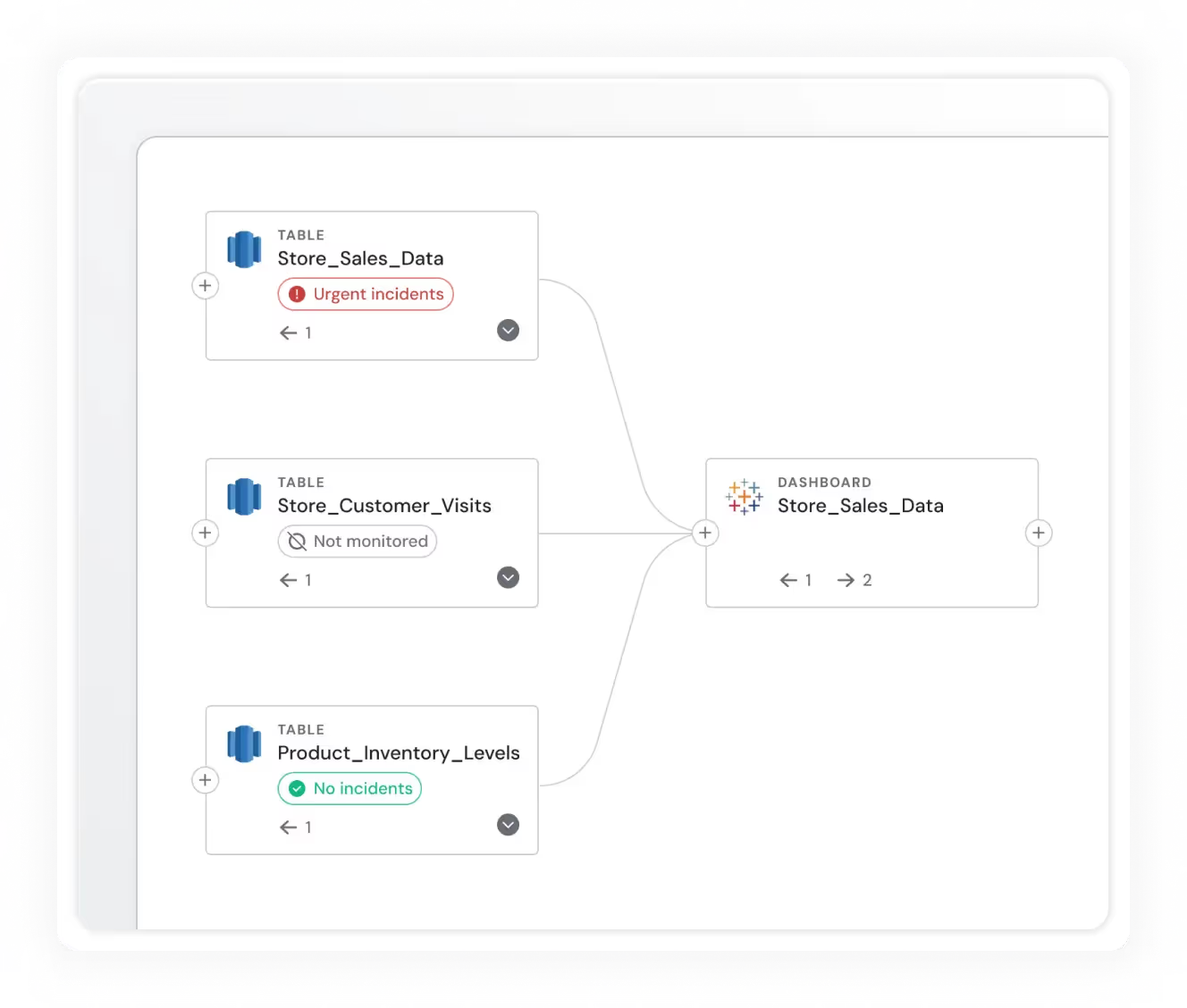

End-to-end lineage

Have a complete understanding of how data flows through your platform via end-to-end lineage for Redshift.

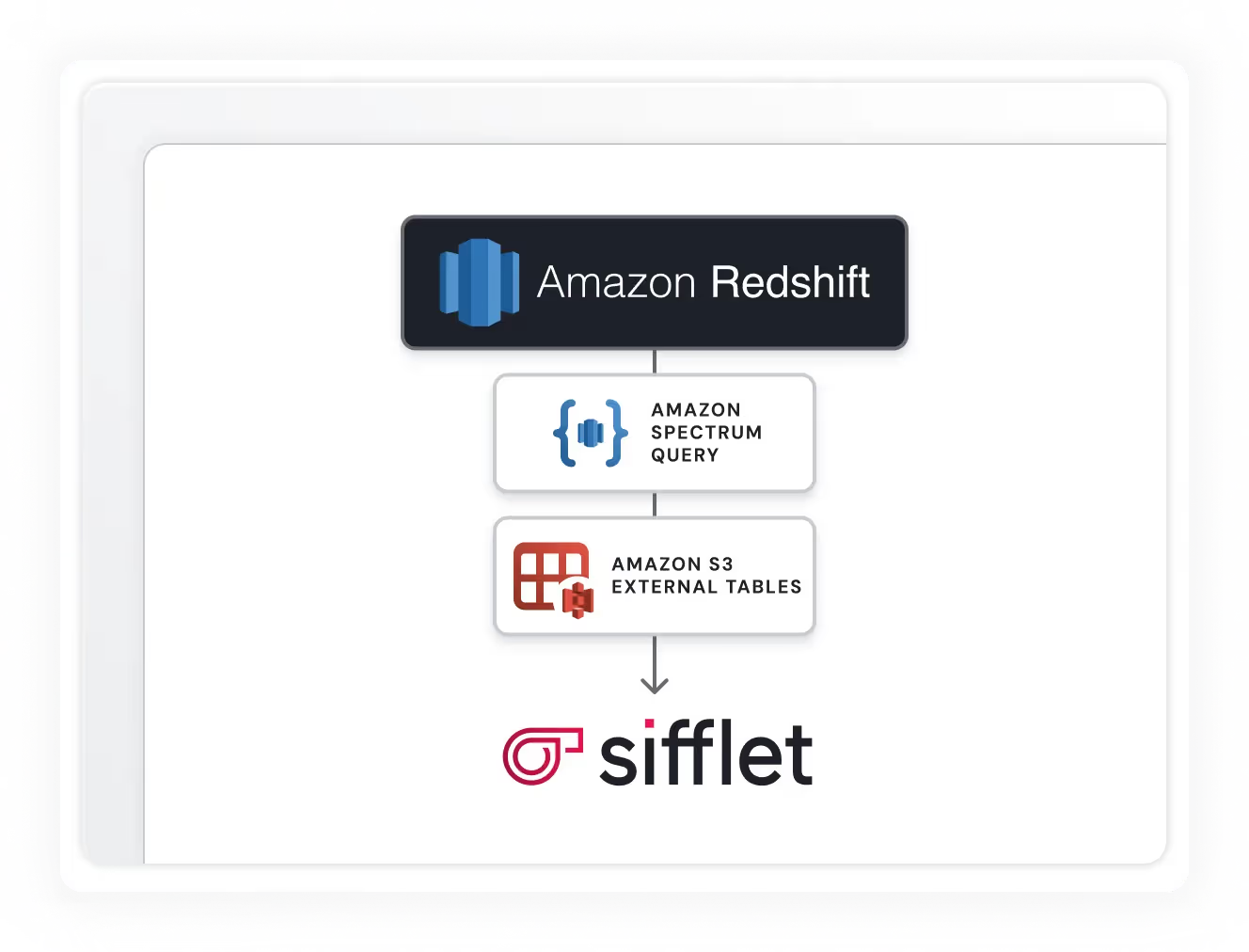

Redshift Spectrum support

Sifflet can monitor external tables via Redshift Spectrum, allowing you to ensure the quality of data stored in other systems like S3.

Still have a question in mind ?

Contact Us

Frequently asked questions

What role does real-time monitoring play in Sifflet’s platform?

Real-time metrics are essential for proactive data pipeline monitoring. Sifflet’s observability tools provide real-time alerts and anomaly detection, helping teams quickly identify and resolve issues before they impact downstream systems or violate SLA compliance.

Can better design really improve data reliability and efficiency?

Absolutely. A well-designed observability platform not only looks good but also enhances user efficiency and reduces errors. By streamlining workflows for tasks like root cause analysis and data drift detection, Sifflet helps teams maintain high data reliability while saving time and reducing cognitive load.

How does Sifflet support diversity and innovation in the data observability space?

Diversity and innovation are core values at Sifflet. We believe that a diverse team brings a wider range of perspectives, which leads to more creative solutions in areas like cloud data observability and predictive analytics monitoring. Our culture encourages experimentation and continuous learning, making it a great place to grow.

Why are data consumers becoming more involved in observability decisions?

We’re seeing a big shift where data consumers—like analysts and business users—are finally getting a seat at the table. That’s because data observability impacts everyone, not just engineers. When trust in data is operationalized, it boosts confidence across the business and turns data teams into value creators.

How does Flow Stopper support root cause analysis and incident prevention?

Flow Stopper enables early anomaly detection and integrates with your orchestrator to halt execution when issues are found. This makes it easier to perform root cause analysis before problems escalate and helps prevent incidents that could affect business-critical dashboards or KPIs.

How can data observability help improve the happiness of my data team?

Great question! A strong data observability platform helps reduce uncertainty in your data pipelines by providing transparency, real-time metrics, and proactive anomaly detection. When your team can trust the data and quickly identify issues, they feel more confident, empowered, and less stressed, which directly boosts team morale and satisfaction.

What practical steps can companies take to build a data-driven culture?

To build a data-driven culture, start by investing in data literacy, aligning goals across teams, and adopting observability tools that support proactive monitoring. Platforms with features like metrics collection, telemetry instrumentation, and real-time alerts can help ensure data reliability and build trust in your analytics.

What role does data lineage tracking play in data observability?

Data lineage tracking is a key part of data observability because it helps you understand where your data comes from and how it changes over time. With clear lineage, teams can perform faster root cause analysis and collaborate better across business and engineering, which is exactly what platforms like Sifflet enable.

-p-500.png)