Google BigQuery

Integrate Sifflet with BigQuery to monitor all table types, access field-level lineage, enrich metadata, and gain actionable insights for an optimized data observability strategy.

Metadata-based monitors and optimized queries

Sifflet leverages BigQuery's metadata APIs and relies on optimized queries, ensuring minimal costs and efficient monitor runs.

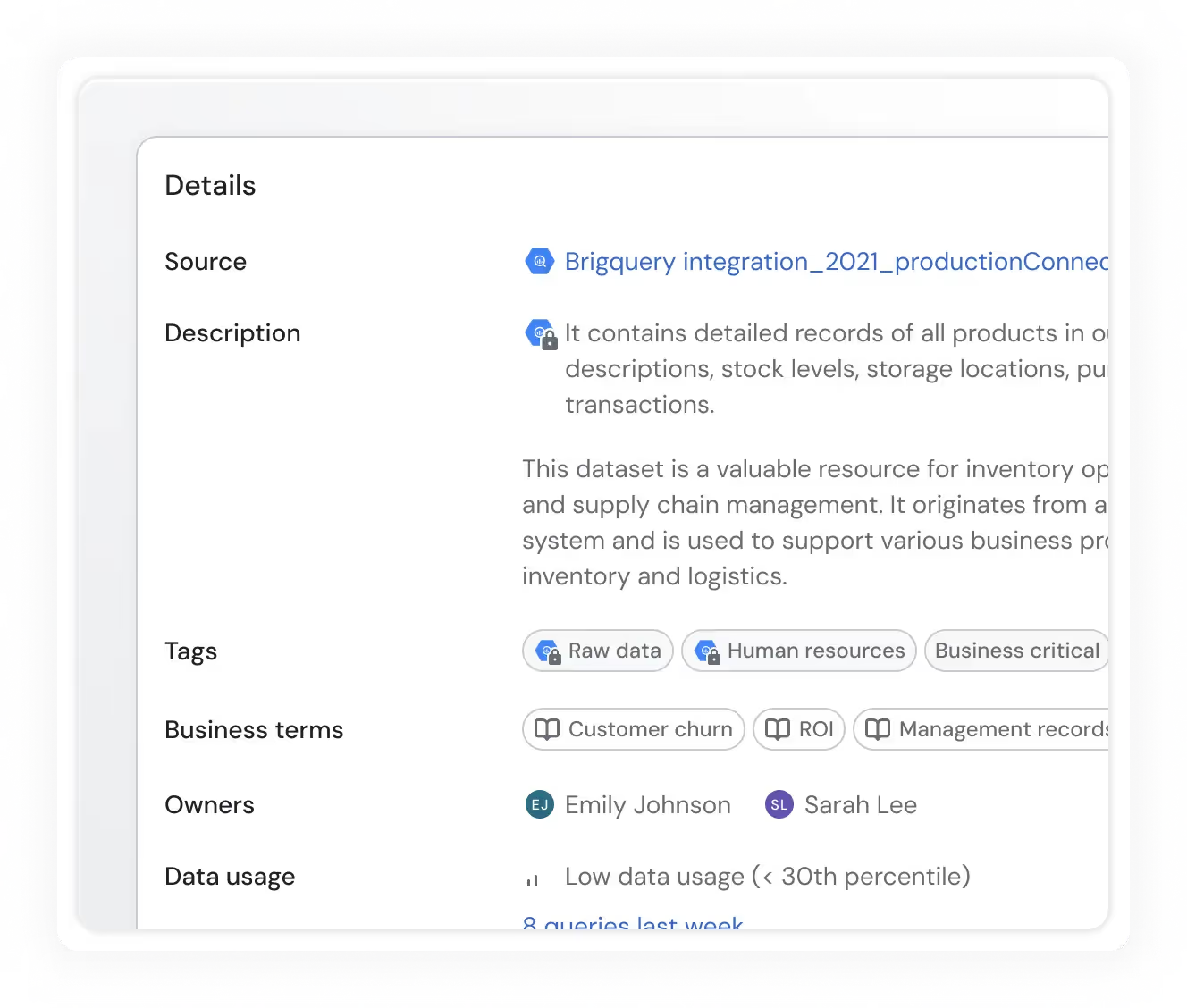

Usage and BigQuery metadata

Get detailed statistics about the usage of your BigQuery assets, in addition to various metadata (like tags, descriptions, and table sizes) retrieved directly from BigQuery.

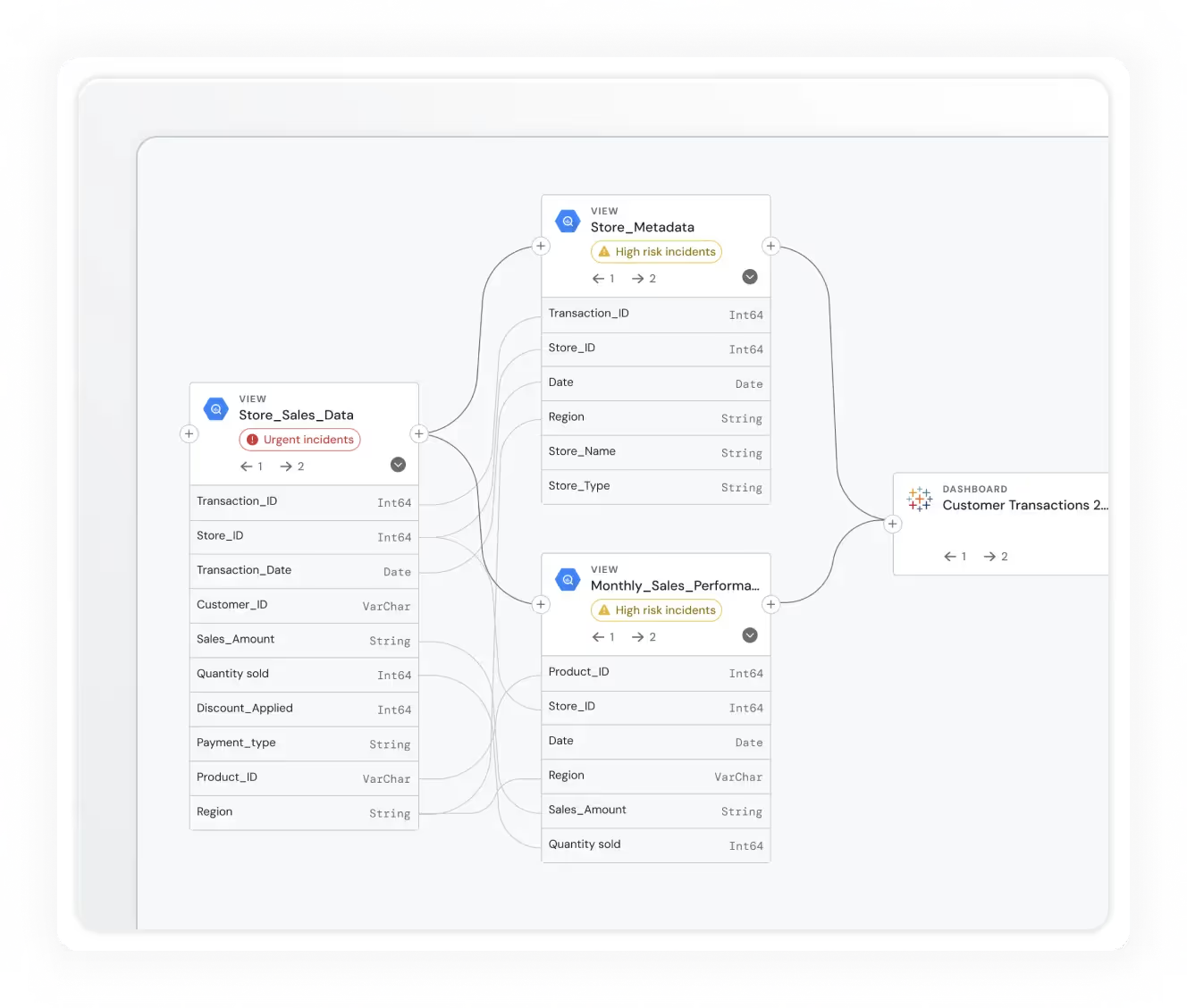

Field-level lineage

Have a complete understanding of how data flows through your platform via field-level end-to-end lineage for BigQuery.

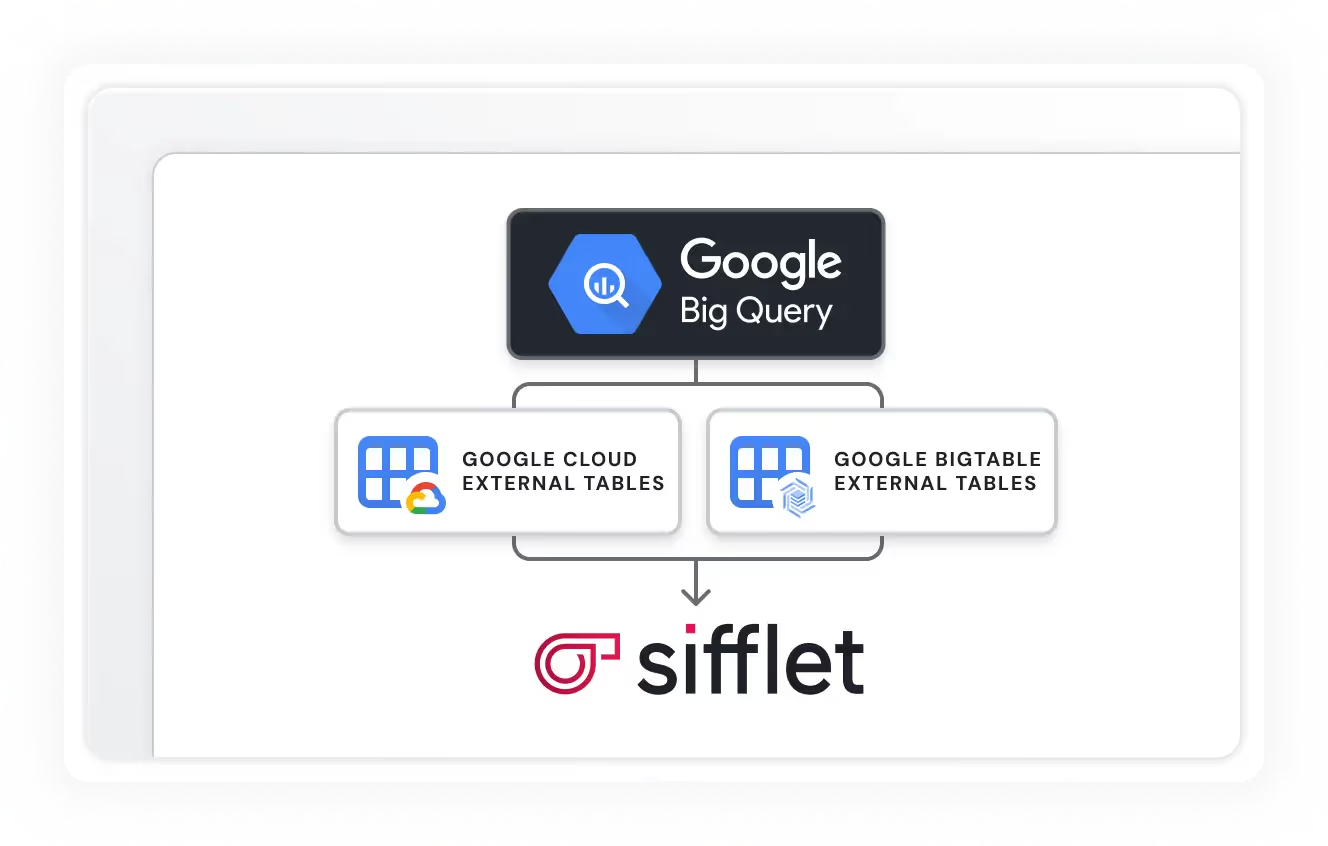

External table support

Sifflet can monitor external BigQuery tables to ensure the quality of data in other systems like Google Cloud BigTable and Google Cloud Storage

Still have a question in mind ?

Contact Us

Frequently asked questions

What role does data lineage tracking play in data discovery?

Data lineage tracking is essential for understanding how data flows through your systems. It shows you where data comes from, how it’s transformed, and where it ends up. This is super helpful for root cause analysis and makes data discovery more efficient by giving you context and confidence in the data you're using.

Is this integration helpful for teams focused on data reliability and governance?

Yes, definitely! The Sifflet and Firebolt integration supports strong data governance and boosts data reliability by enabling data profiling, schema monitoring, and automated validation rules. This ensures your data remains trustworthy and compliant.

How does data quality monitoring help improve data reliability?

Data quality monitoring is essential for maintaining trust in your data. A strong observability platform should offer features like anomaly detection, data profiling, and data validation rules. These tools help identify issues early, so you can fix them before they impact downstream analytics. It’s all about making sure your data is accurate, timely, and reliable.

What is Flow Stopper and how does it help with data pipeline monitoring?

Flow Stopper is a powerful feature in Sifflet's observability platform that allows you to pause vulnerable pipelines at the orchestration layer before issues reach production. It helps with proactive data pipeline monitoring by catching anomalies early and preventing downstream damage to your data systems.

How can data observability help with SLA compliance and incident management?

Data observability plays a huge role in SLA compliance by enabling real-time alerts and proactive monitoring of data freshness, completeness, and accuracy. When issues occur, observability tools help teams quickly perform root cause analysis and understand downstream impacts, speeding up incident response and reducing downtime. This makes it easier to meet service level agreements and maintain stakeholder trust.

What role does machine learning play in data quality monitoring at Sifflet?

Machine learning is at the heart of our data quality monitoring efforts. We've developed models that can detect anomalies, data drift, and schema changes across pipelines. This allows teams to proactively address issues before they impact downstream processes or SLA compliance.

How does the new Custom Metadata feature improve data governance?

With Custom Metadata, you can tag any asset, monitor, or domain in Sifflet using flexible key-value pairs. This makes it easier to organize and route data based on your internal logic, whether it's ownership, SLA compliance, or business unit. It's a big step forward for data governance and helps teams surface high-priority monitors more effectively.

What is a 'Trust OS' and how does it relate to data governance?

A Trust OS is an intelligent metadata layer where data contracts are enriched with real-time observability signals. It combines lineage awareness, semantic context, and predictive validation to ensure data reliability at scale. This approach elevates data governance by embedding trust directly into the technical fabric of your data pipelines, not just documentation.

-p-500.png)