5 Principles to Rule Them All

Have you ever suffered from heavy dashboards or timed-out queries? The issue may not come from your visualization tool or your data warehouse but from your SQL query itself.

As a heavy data consumer in my past roles, I spent a significant amount of time querying thousands of terabytes of analytical data to make the right decisions. Complex queries need to be built with a lot of care. And whether interacting with a data warehouse, a data lake, or a streaming service, it comes down to applying the same principles to ensure performance in executions.

Below are some of the tricks I’ve collected over the years and narrowed down to 5 main principles:

1 - The more is NOT the merrier

To avoid mobilizing unnecessary system resources, start by scoping the data you need for your use case, and leverage all the SLQ tricks to get there:

- Always use WHERE statements in your query to filter out irrelevant data

- Leverage partition keys in the WHERE clause in infrastructures where large tables are stored as multiple files and directories

- Avoid SELECT * and specify the column names you need instead

- Use LIMIT to sample query results in the output when possible

2 - Words are free, but it’s how you use them that may cost you

Despite the apparent overlap, every SQL function is designed for a specific need, and using the right one is essential to prevent inefficiencies. Here is a non-exhaustive list of tips where even a small adjustment can have a significant performance impact:

- Don’t replace a WHERE by HAVING unless needed in conjunction with a GROUP BY.

- Don’t deduplicate your data by default, DISTINCT is a very expensive operation.

- Don’t go wild with wildcards and use them at the end of the input (‘xxx%’) to reduce the possible results of the search.

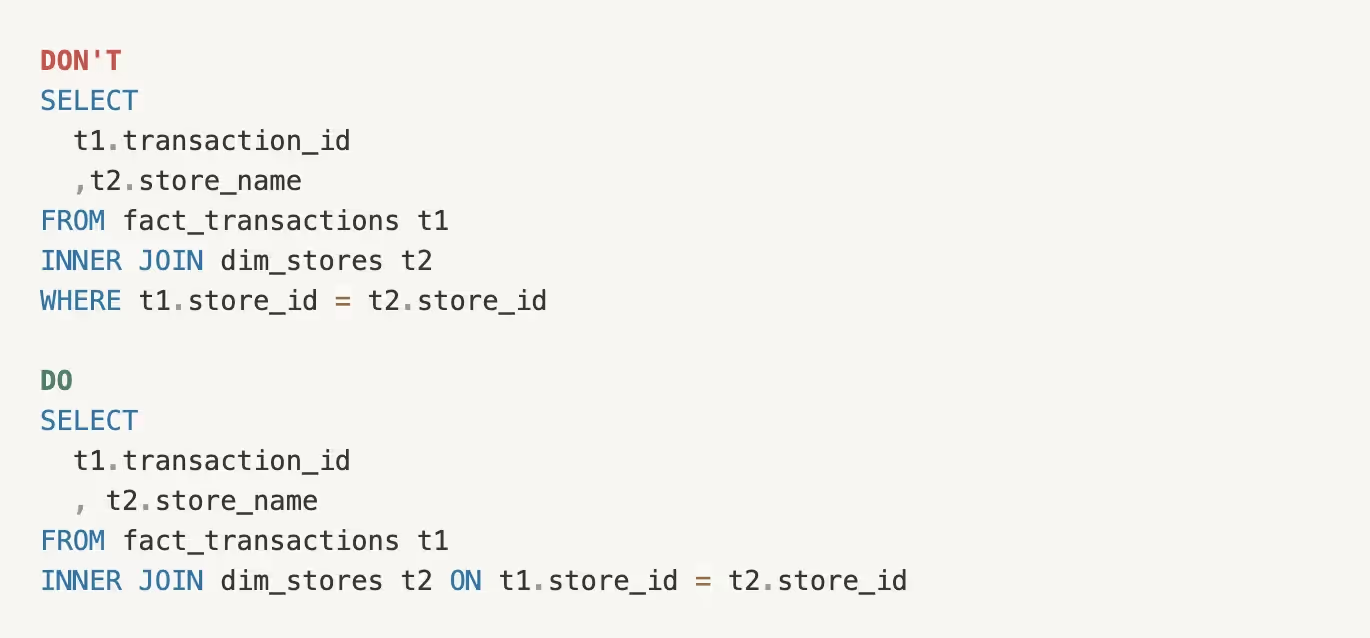

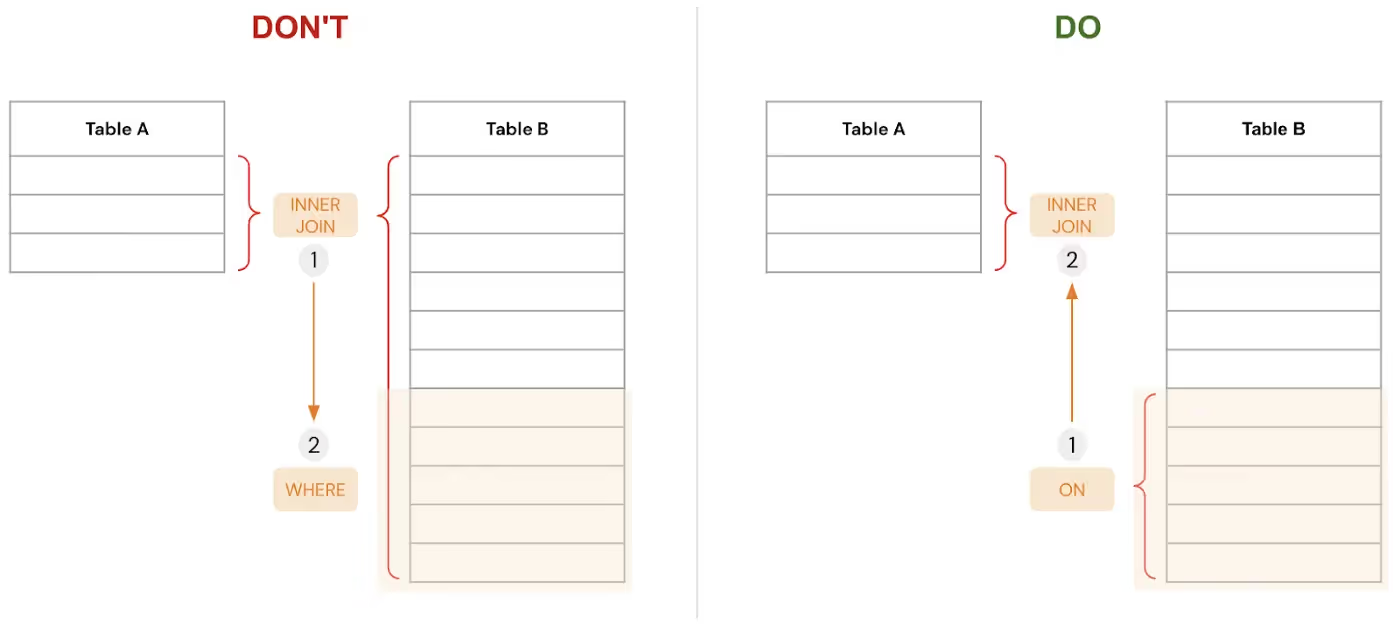

3 - Make your JOIN work with you, not against you

Combining columns from different tables is very recurrent. In some cases, you are joining every row from the first table to every row from the second table, and the query might not even finish. Be wise by:

- Choosing the right joining type for your needs.

- Inserting the joining condition in the ON expression and not in the WHERE conditions to narrow down the rows joined since it is a very expensive operation.

Source: Sifflet

4 - Divide to conquer, aka using Common Tables Expressions (CTE)

Common Table Expressions (CTE) is a temporary table that can be defined once and used many times within the same query. CTEs are very helpful in improving your code readability, reducing its complexity, and optimizing its execution resources.

CTEs can be defined at the beginning of your queries with the instructions WITH table_name AS

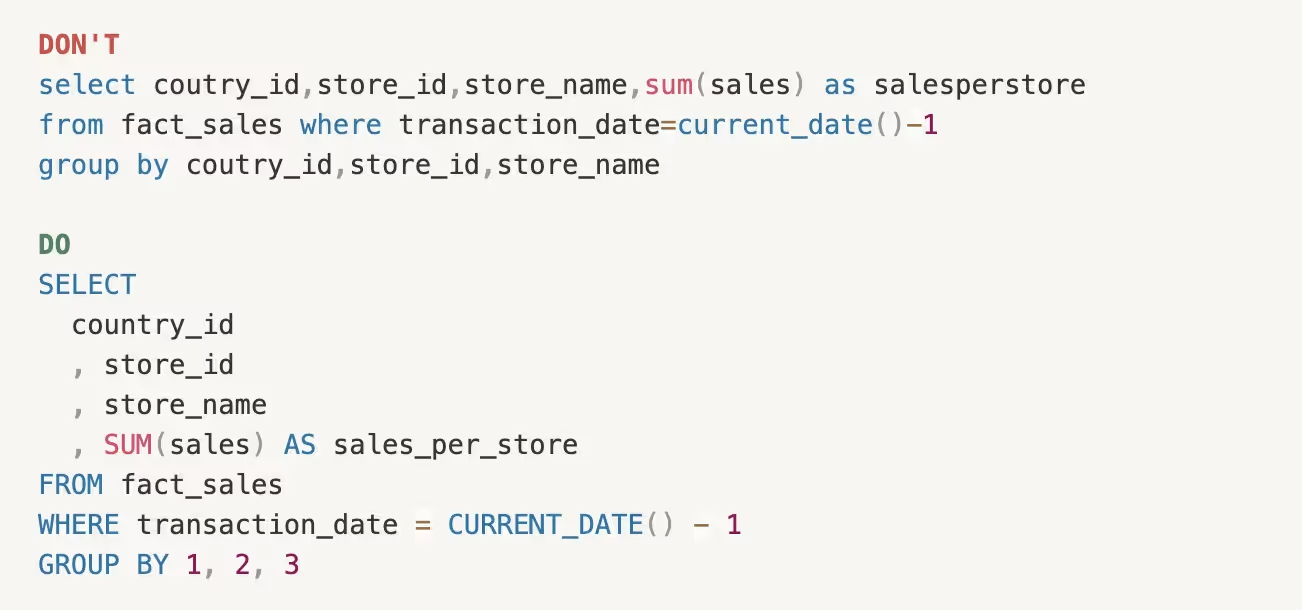

5- Mens Sana in Corpore Sano, aka a healthy mind in a healthy body

Caring about your query format is essential. These simple best practices can make your queries readable, easily debuggable, and shareable:

- Capitalize SQL keyword

- Use snake case for your table and column names and aliases

- Indentation and spaces

With new technologies shaping the modern data stack, data is becoming more and more accessible to users regardless of their level of technical expertise. Democratizing data is great but ensuring the operational efficiency and health of the data platform is a real challenge. Although there is no magic manual or one-size-fits-all approach, implementing some best practices as the ones covered in this article can play an integral role in ensuring the sustainability of your data operations.

Get in touch if you'd like to go in depth on any of the topics. wissem@siffletdata.com; contact@siffletdata.com

-p-500.png)