The challenges of modern data environments

In today’s data-driven world, modern data environments have become indispensable for organizations relying on data to make informed decisions. These environments have grown increasingly complex, demanding robust solutions for data control and management. Managing vast amounts of diverse data sets effectively has become a critical challenge for organizations.

Traditionally, data catalogs have been relied upon as comprehensive repositories, enabling users to discover, understand, and access various data assets within an organization. However, these catalogs have faced scrutiny within the data community due to their resource-intensive nature.

In a thought-provoking blog post by Ananth Packkildurai, he raises concerns about data catalogs, stating,

“Data catalogs are the most expensive data integration systems you never intended to build”.

This statement encapsulates the difficulties and costs associated with implementing and maintaining data catalogs, making it increasingly challenging for teams across organizations to adopt and fully utilize these systems.

Whether you are an experienced data professional, an organizational leader seeking data management solutions, or a curious enthusiast looking to stay ahead of the curve, this blog post will serve as a valuable resource. Let’s explore the specificities of the data catalog and its evolution.

A brief history of the data catalog

Let's begin by understanding what a data catalog is and its purpose. A data catalog is a collection of metadata combined with data management and search tools that help data consumers find the data they need.

Essentially, data catalogs serve as an inventory of an organization's available data, and they have become a standard for metadata management.

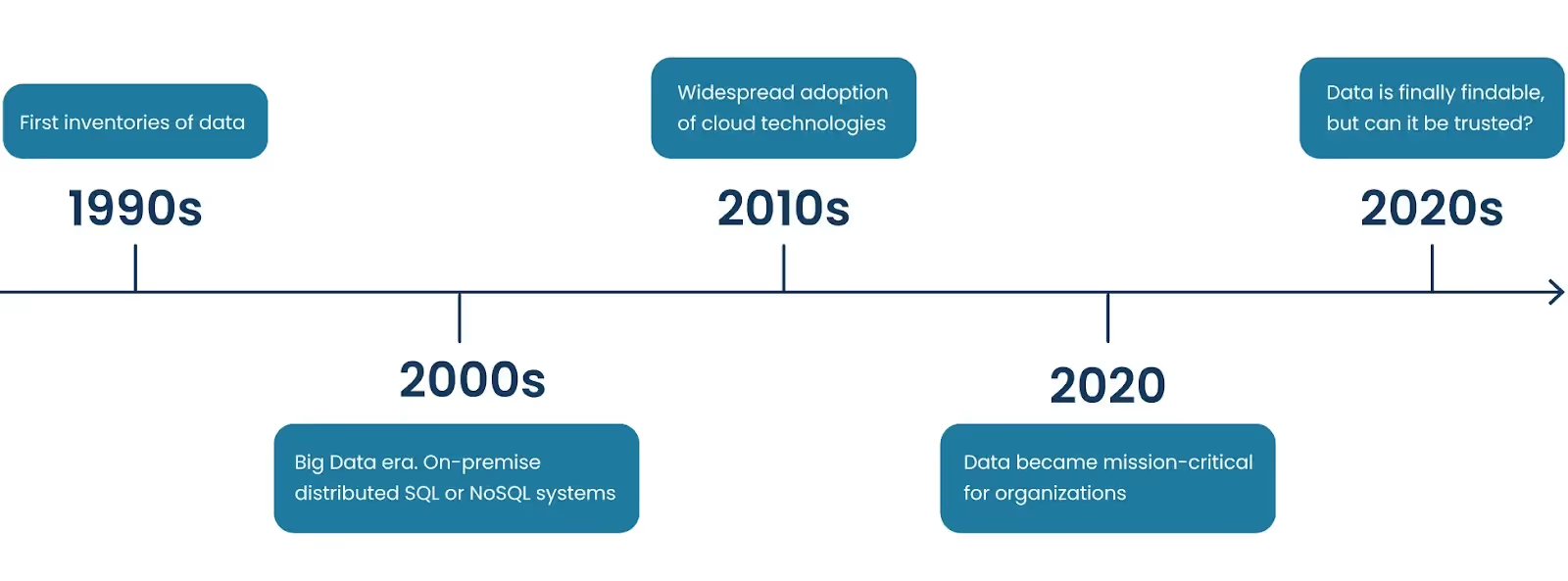

The history of data catalogs can be traced back over the past three decades, with different phases marking their development.

The 1990s—2000s: The emergence of metadata management

The modern concept of metadata emerged in the late 1990s when the internet gained widespread acceptance, and organizations began managing their growing volumes of data. During this time, companies like Informatica and Talend pioneered metadata management by creating the first data inventories.

The 2010s: The rise of data stewardship and enhanced catalogs

Starting in 2010, data became mission-critical for organizations, leading to a broader recognition of its importance beyond the IT team. This shift prompted the adoption of Data Stewardship, emphasizing the need for dedicated teams to handle metadata.

With the growing volumes of data, it became so that simply having an inventory of data was no longer sufficient. And that additional business context within data catalogs was needed. This led to the innovation of the second generation of data catalogs, developed by organizations such as Collibra and Alation, which offered enhanced features and capabilities.

The 2020s: Trustworthy data in a complex landscape

In recent years, data catalogs have evolved to become unified access layers for all data sources and stores. However, relying solely on a data catalog as a single source of truth is insufficient.

While a data catalog provides a comprehensive inventory of available assets, it primarily serves as a metadata repository rather than actively assessing the quality and accuracy of the data it catalogs. Without data quality monitoring, organizations risk basing critical decisions on potentially flawed or incomplete data.

Data quality monitoring plays a critical role in ensuring the reliability and integrity of the data used for analysis, reporting, and decision-making. It involves continuously assessing data for accuracy, completeness, consistency, and timeliness, among other quality dimensions. This monitoring process helps identify and rectify issues such as data duplication, inconsistency, outdated information, and missing values.

By neglecting data quality monitoring and relying solely on a data catalog, organizations face several potential pitfalls:

- Data inconsistencies or inaccuracies may go unnoticed, leading to erroneous analyses and flawed business insights. Such inaccuracies can propagate throughout the organization, resulting in misguided strategic decisions and wasted resources.

- Without ongoing data quality monitoring, organizations may lack visibility into changes or updates in the underlying data sources. New data sets may be introduced, existing data may be modified, or data quality issues may emerge over time. Failing to track and address these changes can compromise the trustworthiness of the data catalog and diminish its value as a single source of truth.

- Data quality issues may have cascading effects on downstream processes and applications. For example, if data fed into automated decision-making systems or machine learning models are of poor quality, the results and recommendations generated by these systems will likely be flawed. This can lead to adverse outcomes, erode trust in the technology, and hinder the organization’s ability to leverage data for innovation and growth.

Data observability, which includes data cataloging and data quality monitoring, is a powerful addition to a data management strategy. Data observability refers to the ability to gain comprehensive insights into the data. By incorporating data observability, organizations can gain a deeper understanding of their data infrastructure, ensuring its reliability. Additionally, it helps detect anomalies in data pipelines, allowing for timely troubleshooting.

Data observability tools encompass more than just a data catalog. They include metadata monitoring, data lineage, and monitoring features, providing a comprehensive approach to data management. By combining these capabilities, they offer a holistic and comprehensive approach to data management. With data observability tools, organizations can ensure the integrity, quality, and accessibility of their data throughout its lifecycle, enabling effective decision-making and enhancing overall data governance.

In order to maximize the effectiveness and efficiency of data management, the future of data catalogs hinges on their seamless integration with data observability tools, enabling organizations to not only understand their data but also to continuously monitor, validate, and govern its quality, lineage, and usage throughout their lifecycle.

Establishing reliability: Beyond the data catalog

Implementing a comprehensive data management strategy is vital for organizations to thrive in the long run. While a data catalog serves as a valuable starting point, it’s important to recognize that it cannot be solely relied upon as the ultimate authority for data reliability.

To establish a true single source of truth, organizations must consider incorporating additional initiatives, such as data quality monitoring and data observability. By integrating these practices into their data catalog implementation, businesses can ensure that the information stored within the data catalog is not only easily accessible but also trustworthy.

Data quality initiatives focus on maintaining the integrity and accuracy of data throughout its lifecycle. They involve implementing processes to identify and rectify inconsistencies, anomalies, and errors, thereby enhancing the overall reliability of the data catalog. Through continuous monitoring and evaluation, organizations can improve the quality of their data assets, enabling informed decision-making while minimizing the risk of drawing erroneous conclusions.

Similarly, data observability initiatives play a vital role in guaranteeing the reliability and transparency of data within the catalog. By implementing monitoring systems and tools, businesses can gain real-time insights into the health and performance of their data infrastructure. This proactive approach allows for the identification and resolution of issues, such as data pipeline failures or data quality deviations, ensuring that the data catalog remains up-to-date and trustworthy.

In conclusion, while a data catalog is a valuable tool, it should not be viewed as a standalone solution for establishing a single source of truth. To fully leverage the benefits of a data catalog and ensure the reliability of data assets, organizations must embrace data quality and data observability initiatives as integral components of their overall data management strategy. By doing so, businesses can foster trust in their data, make well-informed decisions, and position themselves for sustained success in today’s data-driven landscape.

%2520copy%2520(3).avif)

-p-500.png)