If I were to choose three thematics to describe the state of the Modern Data Stack and what has been top of mind for most data leaders in 2021, they would be speed, autonomy, and reliability.

- Speed: everyone wants to access data NOW. Many new technologies are making this possible and allowing organizations to support even the most sophisticated analytics use cases as they scale.

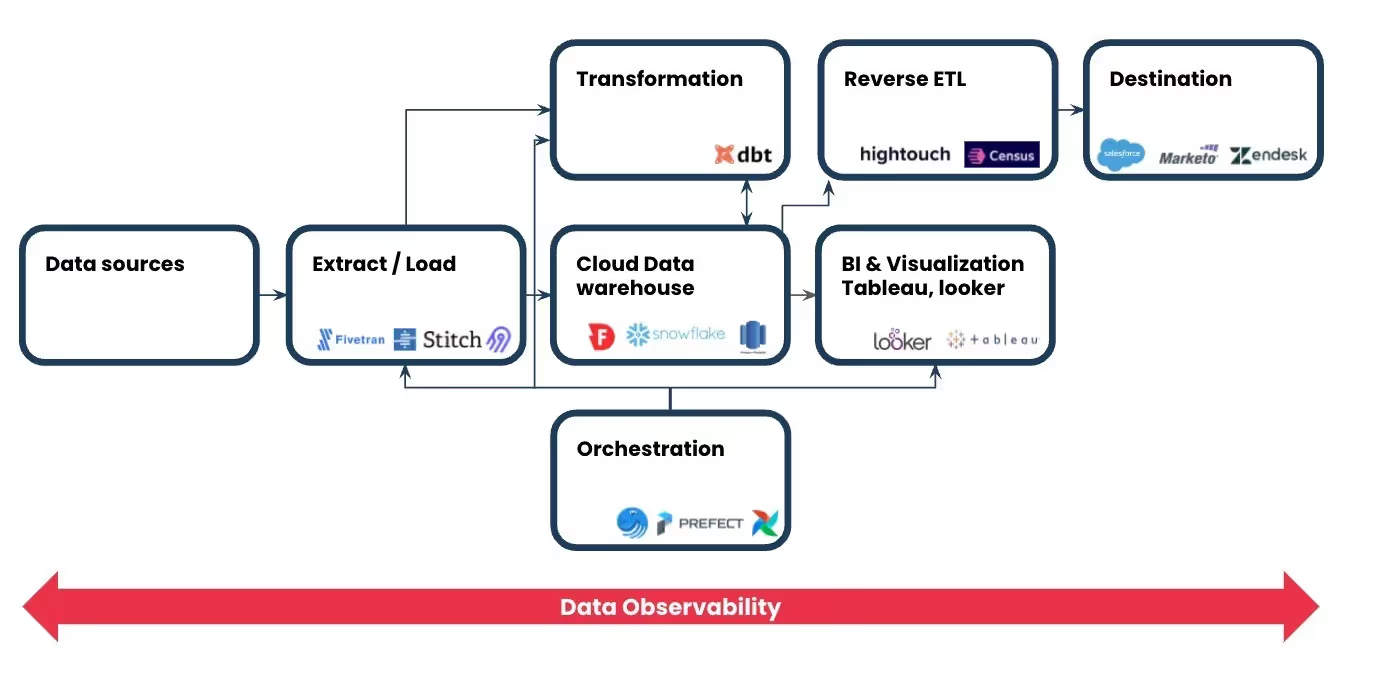

- Autonomy: self-service analytics continued to thrive in 2021 and was made even more possible by some fantastic tools that emerged in the space. With ELT becoming the new ETL and reverse ETL taking it further by enabling business users to action the data sitting in the warehouse, data integration has never been more straightforward.

- Reliability: every company wants to become data-driven, but almost every company struggles with a lack of trust when working with data. Reliability in data is an umbrella term that encompasses concepts from the reliability of the infrastructure to the reliability of the data itself. While we may not wholly dissociate the two, the latter gained significant exposure over the past couple of years and established itself as a new category within the data infrastructure and tooling space.

Following along those same lines, here are 5 trends that shaped the Modern Data Stack in 2021 and how some of them will continue to prevail in 2022.

- Democratization of both the data AND the data stack

- Data Integration made easier: ETL to ELT and reverse ETL

- Performance and speed: Everyone wants data, yesterday

- Data mesh

- Data observability

Democratization: Both the data and the data stack.

By definition, democratization is the action of making something accessible to everyone. As companies strive to become more data-driven, they have made considerable efforts to ensure relevant data is accessible to everyone in the organization. Emphasis on “relevant” and “easily”. Democratization starts with setting up the right processes internally and ensuring that everyone speaks the same language when it comes to data, the infamous single source of truth.

Data literacy plays a massive role in adopting a data-driven culture. For decades working with data was and still is in some cases only reserved for a tiny group of people. Today many companies and technologies are emerging in the data infrastructure and tooling space that allow even the least technical personas to leverage data (more on reverse ETL later). You no longer need to be a SQL ninja to support a basic operational analytics use case.

Data Integration made easier: ELT is the new ETL.

ETL, an acronym for Extract Transform Load, is the process by which data is moved from one point to another via an ETL pipeline according to 3 steps: E, T, and L. In practice, first, the data is extracted from one or multiple sources (typically a SQL or NoSQL database). It is then transformed to match what the destination requires in format, structure, or shape. Finally, the data is loaded into the destination system, typically a cloud data warehouse.

ELT, is, on the other hand, Extract Load Transform, and the critical difference between the two processes lies in the transformation stage, precisely when and where it is being done. In a traditional ETL pipeline, the transformation occurs in a staging environment outside the data warehouse where the whole data is “temporarily” loaded for transformation; the transformed data is then loaded into the warehouse. In the case of ELT, data is first loaded into the target system in its raw form before it gets transformed within the data warehouse, hence unlike ETL, not relying on any remote environment. More on this by the team at Qlik.

I won’t go into further detail on why you should pick one process over the other, but in short: the need for speed, cost optimization, and overall more flexibility when working with data in its raw form propelled the move from ETL to ELT.

Reverse ETL is…the new ETL?

In 2021, we witnessed the growth of a new Data Integration methodology: the Reverse ETL. And no, it’s not LTE; although that is tempting, reverse ETL is the process of moving data from the data warehouse to other cloud-based business applications (CRM, Marketing, Finance tools, etc.) so it can be used for analytics purposes. In a way, as the name suggests, reverse ETL does the opposite of what a traditional ETL pipeline would do, or in other words, it is an ETL but for the right-hand side of the data stack if the data warehouse is the center. More on this by Hightouch.

Although the concept might seem simple, reverse ETL gained significant visibility this year thanks to its ability to power operational analytics use cases and enable further data enablement within the organization.

Performance and speed: Everyone wants data, yesterday.

Fast and good don’t necessarily equate to high cost when it comes to data storage and processing, and Snowflake played a significant role in making that possible by segregating storage and computing. As general-purpose analytics became accessible to most organizations, the focus nowadays is on performance and speed. We’ve all enjoyed following the Databricks vs. Snowflake saga, Rockset vs. Apache Druid vs. ClickHouse, and then ClickHouse vs. TimescaleDB. But if there is a common theme to these modern data stack street battles, it is speed; everyone is claiming to be the fastest and most cost-optimized tool.

This trend is very prevalent in the data storage space as it has more maturity and the user expectations KPIs are relatively well defined. This trend will undoubtedly expand to other less mature areas of the modern data stack in the future.

Decentralization, Data as a Product, data mesh.

It wouldn’t be a 2021 data trends recap if we didn’t mention the trend that took the data world by storm and was the subject of numerous debates: the data mesh. Although technically speaking, early concepts of the data mesh were first introduced in 2020; the latter had developed into a fundamental data architecture paradigm shift with some very data mature organizations adopting the idea and sharing their learnings with the broader community. For the uninitiated, data mesh — as explained by its creator Zhamak Dehghani — is a decentralized socio-technical approach to accessing, managing, and sharing the data within an organization. Dehghani built her framework around four key principles: a. Domain-driven design applied to data or domain-oriented ownership, b. Data as a product to be shared within and outside the organization, c. Self Serve Infrastructure or IaaS to allow for further autonomy and broader democratization of the data, and d. Federated governance to balance out the independence of each team while harmonizing the standards of quality and control within the organization.

Here are some other useful resources on the topic:

- Zalando case study: link

- JP Morgan case study: link

- Zhamak’s latest take on the topic via Data Engineering podcast: link

Data Observability.

I will start by declaring that I am biased regarding this particular topic. That being said, this area in the Data Infrastructure and Tooling space gained significant momentum this year with some amazing new technologies and companies attempting to solve this complex problem.

Initially, a concept borrowed from the DevOps world, Data Observability is quickly becoming an essential part of any modern data stack, ensuring data teams can drive business initiatives using reliable data.

In DevOps, the notion of Observability is centered around three main pillars: traces, logs, and metrics that represent the Golden Triangle of any infrastructure and application monitoring framework. Solutions like Datadog, NewRelic, Splunk, and others have paved the way for what has become standard practice in software development; it only makes sense that some of their best practices would translate into Data Engineering, a subset of Software Engineering.

Data observability, data quality monitoring, data reliability, pipeline monitoring, etc, are all terms that are often used interchangeably and wrongfully so. I won’t go into much detail as to why; that is a topic for another debate, but you can make your data fully observable by breaking down the process around the data into key “observable” steps across the enterprise data pipeline. Surely your data warehouse as “the single source of truth” should be at the epicenter of any data observability framework. However, it shouldn’t stop at that. And this is where the concept of the Modern Data Stack is very powerful as it enables fragmentation while allowing documentation of each step of the process using code and metadata. This is why I think extensive data lineage across the whole data pipeline is an essential feature of any Data Observability tool.

Like anything new, this category is fascinating, with a lot of room for innovation. And you know what they say: the best way to predict the future is to build it!

Conclusion

The past couple of years have been challenging for many industries. When companies were forced to pivot, downsize or create new revenue lines, data-driven decision-making became key to survival.

For decades we kept hearing about organizations wanting to become data-driven; it is only recently that we started to witness actual initiatives and work being put to research, implement and adopt new technologies to reach data maturity. And in 2021, the Data & Analytics industry did not disappoint.

Collecting data is no longer a challenge; moving the data from the source to the storage and then actually utilizing it for operational analytics is the focus of many organizations today. While ETL is nothing new, further performance enhancement and the shift to ELT allowed further scale and enabled more modern analytics use cases. And as Reverse ETL gained in popularity, operational analytics became simple.

The developments in data integration also favored further data & tooling democratization which remains on the agenda for most data leaders.

Last but not least, Data Observability as a category gained immense momentum in 2021; we witnessed it become an essential part of any modern data stack (or rather top of stack) and will surely continue to grow and contribute to the adoption of data-driven decision making in 2022 and beyond.

-p-500.png)