Cost Observability

Cost-efficient data pipelines

Pinpoint cost inefficiencies and anomalies thanks to full-stack data observability.

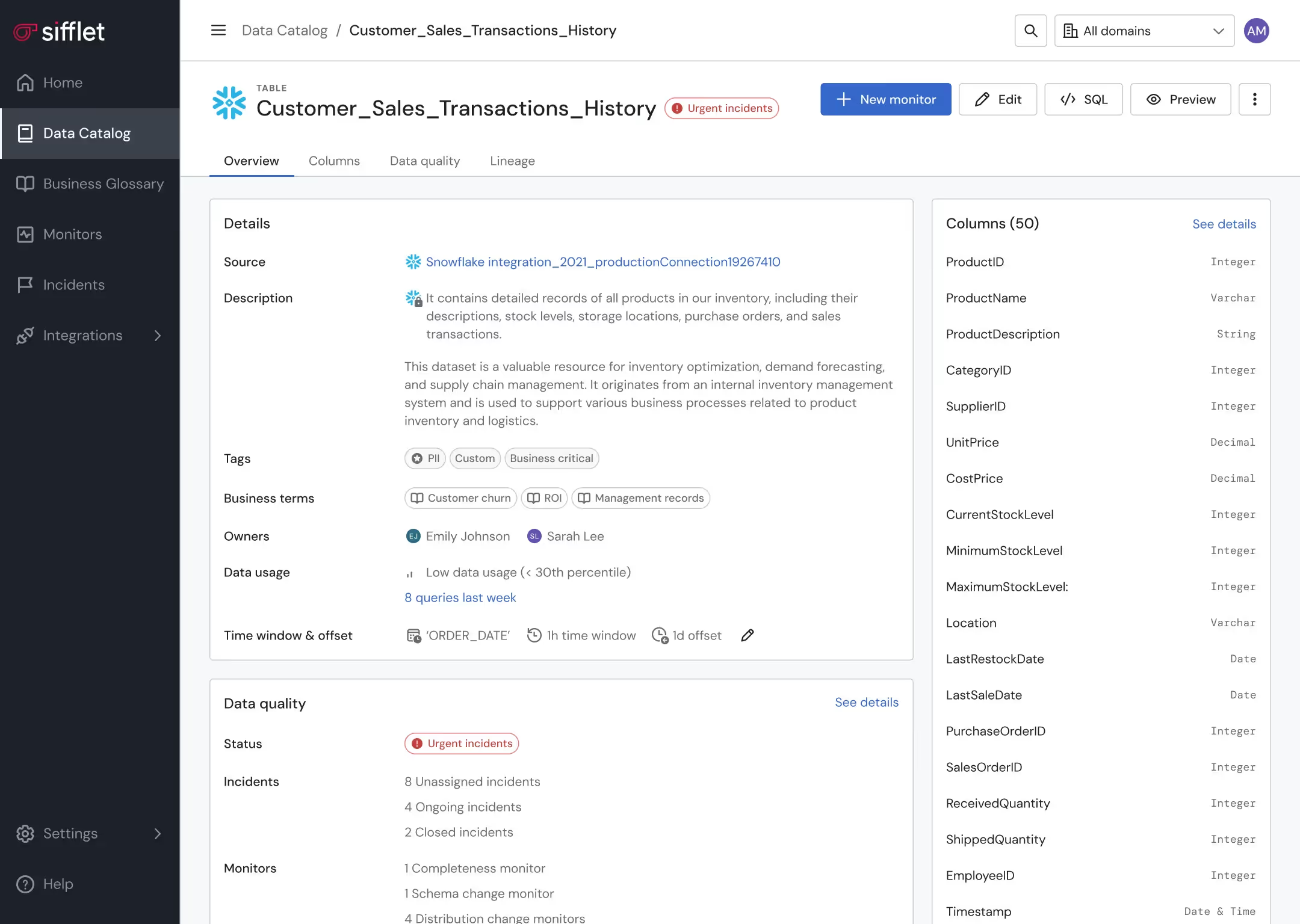

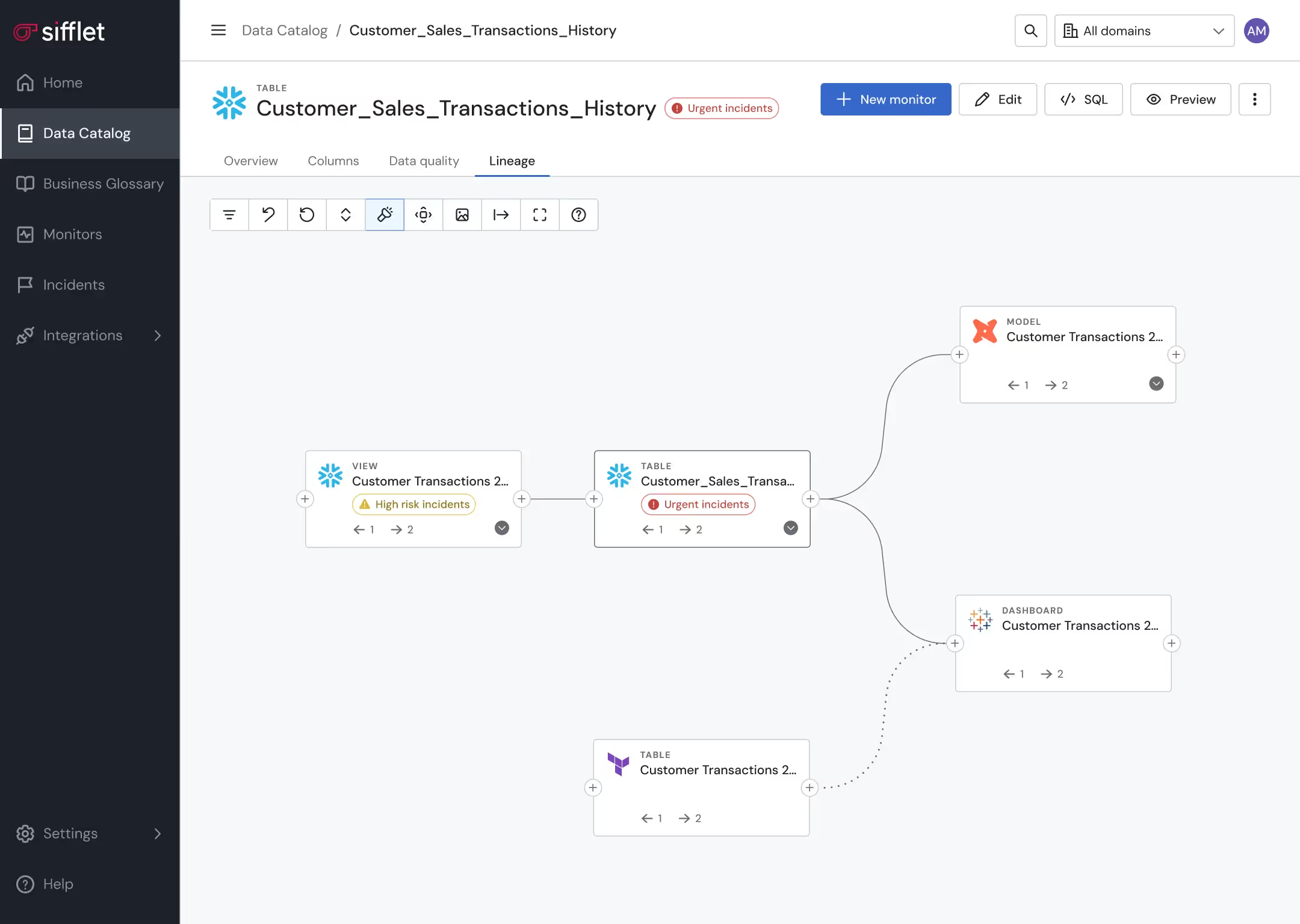

Data asset optimization

- Leverage lineage and Data Catalog to pinpoint underutilized assets

- Get alerted on unexpected behaviors in data consumption patterns

Proactive data pipeline management

Proactively prevent pipelines from running in case a data quality anomaly is detected

Frequently asked questions

What’s next for Sifflet’s metrics observability capabilities?

We’re expanding support to more BI and transformation tools beyond Looker, and enhancing our ML-based monitoring to group business metrics by domain. This will improve consistency and make it even easier for users to explore metrics across the semantic layer.

What should a solid data quality monitoring framework include?

A strong data quality monitoring framework should be scalable, rule-based and powered by AI for anomaly detection. It should support multiple data sources and provide actionable insights, not just alerts. Tools that enable data drift detection, schema validation and real-time alerts can make a huge difference in maintaining data integrity across your pipelines.

How does Sifflet’s dbt Impact Analysis improve data pipeline monitoring?

By surfacing impacted tables, dashboards, and other assets directly in GitHub or GitLab, Sifflet’s dbt Impact Analysis gives teams real-time visibility into how changes affect the broader data pipeline. This supports better data pipeline monitoring and helps maintain data reliability.

What is data distribution deviation and why should I care about it?

Data distribution deviation happens when the distribution of your data changes over time, either gradually or suddenly. This can lead to serious issues like data drift, broken queries, and misleading business metrics. With Sifflet's data observability platform, you can automatically monitor for these deviations and catch problems before they impact your decisions.

How did jobvalley improve data visibility across their teams?

jobvalley enhanced data visibility by implementing Sifflet’s observability platform, which included a powerful data catalog. This centralized hub made it easier for teams to discover and access the data they needed, fostering better collaboration and transparency across departments.

How does Sifflet help improve data reliability for modern organizations?

At Sifflet, we provide a full-stack observability platform that gives teams complete visibility into their data pipelines. From data quality monitoring to root cause analysis and real-time anomaly detection, we help organizations ensure their data is accurate, timely, and trustworthy.

How can organizations improve data governance with modern observability tools?

Modern observability tools offer powerful features like data lineage tracking, audit logging, and schema registry integration. These capabilities help organizations improve data governance by providing transparency, enforcing data contracts, and ensuring compliance with evolving regulations like GDPR.

How does Sifflet support both technical and business teams?

Sifflet is designed to bridge the gap between data engineers and business users. It combines powerful features like automated anomaly detection, data lineage, and context-rich alerting with a no-code interface that’s accessible to non-technical teams. This means everyone—from analysts to execs—can get real-time metrics and insights about data reliability without needing to dig through logs or write SQL. It’s observability that works across the org, not just for the data team.

-p-500.png)