Up to 73% of data in an enterprise is not used or even analyzed.

This means there is information that could tip the scales in your decisions, that business team will never know.

The solution? A well structured data platform.

A data platform is like building a high-performance kitchen.

But if the tools are scattered, the ingredients are stale, or the workflows are unclear, even the best chefs can’t work their magic.

The promise of a great data platform lies in what it enables. If you treat it like a strategic investment, it’ll keep delivering long after the setup is done.

What is a Data Platform?

A data platform is the central system that brings together all of a company's data, ingesting it from different sources, cleaning and organizing it, and making it accessible for analysis and decision-making.

It’s the foundation that powers reports, dashboards, AI models, and operational workflows.

Without a data platform, teams often struggle with mismatched metrics, siloed spreadsheets, and hours wasted searching for answers. With the help of a data platform, they can move faster, stay aligned, and build data-driven products or strategies with confidence.

How does a data platform work in practice?

Imagine you’re the data lead at a growing e-commerce startup in 2026.

You notice that your marketing, product, and operations teams all use data, but they each rely on different dashboards, track different metrics, and spend hours debating “which number is right.”

You decide it’s time to centralize everything. You invest in a modern data stack: Fivetran to ingest data from apps like Shopify and Stripe, Snowflake to store it, dbt to clean and transform it, and Looker for reporting.

You also set up Sifflet to monitor data quality and alert your team when something breaks.

By 2028, teams across the company are using the same source of truth. Marketing automates its campaign reporting. Product uses clean funnel data to prioritize features. Finance can close the books in days, not weeks. Your investment in the data platform is now fueling smarter, faster decisions across the company.

Why is a Data Platform Important?

A strong data platform transforms raw data into information all business teams can actually use.

Instead of data living in silos or being locked away in spreadsheets and dashboards that are reviewed too late, a modern data platform makes information accessible, timely, and actionable.

It becomes the foundation that allows all teams across the organization to make better decisions based on current and actual facts.

With real-time or near–real-time insights, leaders no longer have to rely on weekly or monthly reports that describe what already happened. Teams can spot trends as they emerge, respond quickly to changes in customer behavior, and adjust strategy before small issues turn into expensive problems.

A well-designed data platform also enables stronger products.

By centralizing and organizing customer and usage data, companies can build personalized experiences, smarter recommendations, and features that adapt to user needs.

For example, product teams gain a deeper understanding of how customers interact with the product, which leads to more informed roadmaps and higher customer satisfaction.

Additionally, a well structured data platforms leads to higher operational efficiency.

Accurate, reliable data improves forecasting, inventory planning, and resource allocation. It helps businesses reduce churn by identifying at-risk customers earlier and streamlining internal processes. Over time, this efficiency compounds into lower costs and better margins.

Finally, a data platform is critical for AI and machine learning readiness.

Clean, well-labeled, and well-governed data is a prerequisite for experimentation and model development. Without it, AI initiatives stall before they begin. With it, teams can move faster from ideas to production.

So, basically, having a well structured and complete data platform is the past, present, an future of your business decisions.

How Does a Data Platform Work?

At a high level, most platforms follow a flow:

- Ingest data from apps, APIs, and systems (e.g. Salesforce, Stripe, internal databases)

- Store it in a central place like a warehouse or lake

- Transform it by cleaning, normalizing, and joining it into useful models

- Govern and secure access, lineage, and quality

- Serve the data to users through dashboards, queries, or APIs

- Monitor with tools like Sifflet to make sure nothing breaks behind the scenes

The magic is that once this flow is in place, data becomes a reliable, reusable asset, not just something that lives in a spreadsheet.

Types of Data Platforms

Data platforms can be classified according to the type of data (structured or unstructured) and capabilities, or their business functiond.

f we take data type into consideration, cloud data warehouses focus on structured data and analytics, making them ideal for reporting, business intelligence, and fast SQL-based querying.

They provide reliability, performance, and scalability for decision-making across the organization.

Examples: Snowflake, BigQuery, Redshift

Data lakes take a more flexible approach by storing raw, unstructured, or semi-structured data in its original form.

This makes them especially useful for data science, experimentation, and handling large volumes of diverse data such as logs, events, and media files.

Examples: Databricks Lakehouse, AWS S3 with Athena

Hybrid platforms blend the strengths of warehouses and lakes, offering both structured analytics and advanced AI or machine learning capabilities in a single environment.

These platforms aim to reduce complexity by supporting multiple workloads without forcing teams to choose one architecture over another.

Examples: Databricks, Starburst

Finally and most importantly, end-to-end data platforms are not single tools but thoughtfully assembled systems.

By combining specialized tools for ingestion, transformation, governance, and quality, companies create a cohesive data ecosystem tailored to their goals.

You assemble your platform using tools like:

- Dbt for transforming and modeling data

- Collibra or Atlan for governance and data cataloging

- Sifflet to monitor data quality and system health

If we take business function into account, we have 4 other types o data platform:

Enterprise Data Platform

An enterprise data platform is built to serve the entire organization. Its primary focus is on standardization, governance, security, and reliability at scale.

These platforms integrate data from multiple departments, such as finance, operations, sales, and HR, into a single, trusted source of truth.

They emphasize compliance, access control, and data consistency, making them especially important for large or regulated organizations that need strong oversight and cross-functional reporting.

Big Data Platform

A big data platform is designed to handle very large volumes of data (as you might have guessed by its name), often arriving at high speed and in many formats.

It supports use cases like real-time analytics, event processing, and advanced machine learning.

These platforms prioritize scalability and performance over simplicity, allowing teams to process streaming data, logs, IoT signals, and massive datasets that traditional systems can’t handle efficiently.

Cloud Data Platform

A cloud data platform is optimized for flexibility and speed of deployment. Instead of managing physical infrastructure, teams rely on cloud-native services that scale on demand and follow a pay-as-you-go model.

These platforms make it easier to experiment, onboard new data sources, and support remote or distributed teams. They are often the foundation for modern analytics, AI initiatives, and rapid business growth.

Customer Data Platform (CDP)

A customer data platform is purpose-built to unify customer information across touchpoints such as websites, mobile apps, CRM systems, and marketing tools.

The goal of a CDP is to create a single, persistent customer profile that can be used for personalization, segmentation, and targeted engagement. CDPs are especially valuable for marketing, product, and growth teams looking to deliver consistent, data-driven customer experiences across channels.

Components of an End-to-End Data Platform

A data platform provides powerful solutions to manage both event-based data and session-based data.

Event-based data are individual occurrences or actions logged by a system, such as a button click, a page view, or a purchase transaction. A data platform offers solutions to collect, store, and process this high-volume, high-velocity data in real time or near real time.

Session-based data, in contrast, includes user interactions into a cohesive unit, often defined by a period of user activity such as a visit to a website or a session in an app. Data platforms support sessionization logic to group event data by user and time window, enabling deeper analysis of user engagement, navigation paths, and behavioral patterns.

A data platform is a layered system, with each component playing a specific role in turning raw data into trusted, usable insights.

Here’s how the pieces and different software fit together and what they actually:

1. Data ingestion

Getting your data in.

This is where it all begins. Ingestion tools connect to your external data sources, like Salesforce, Stripe, or internal databases, and bring that data into your platform.

Ingestion can happen in two main ways:

- Batch ingestion: Data is pulled at scheduled intervals (e.g., hourly, daily).

- Real-time ingestion: Data is streamed continuously as new events happen (e.g., user clicks, transactions).

This layer ensures that your platform has access to the freshest possible data without requiring teams to manually move files around.

Popular tools: Fivetran, Airbyte, Stitch, Kafka

2. Data storage

Once ingested, data needs a home. This is your storage layer, designed to scale as your data grows.

There are two primary types:

- Data warehouses store clean, structured data optimized for analytics and reporting.

- Data lakes store raw, semi-structured, or unstructured data (logs, images, clickstreams), offering more flexibility for exploration and ML.

Some companies use both, or a hybrid "lakehouse" model that combines the strengths of each.

Popular tools: Snowflake, BigQuery, Databricks, Redshift, Delta Lake

3. Data transformation

Raw data is messy and needs to be organized.

Transformation tools clean it up by removing duplicates, correcting formats, and modeling it into useful tables for downstream teams.

This typically follows the ELT approach:

- Extract and load data first,

- then transform it inside the warehouse using SQL or Python.

This is where business logic lives (e.g., “What is a customer?”), so consistency and documentation matter.

Popular tools: dbt, Spark, Dataform, Trino

4. Data orchestration

Automating your workflows.

Data pipelines often depend on each other (e.g., sales data needs to load before revenue can be calculated). Orchestration tools help manage the order, timing, and dependencies of these jobs.

They monitor success/failure, retry failures, and allow you to build DAGs (directed acyclic graphs) that map out how your data flows.

Popular tools: Airflow, Dagster, Prefect

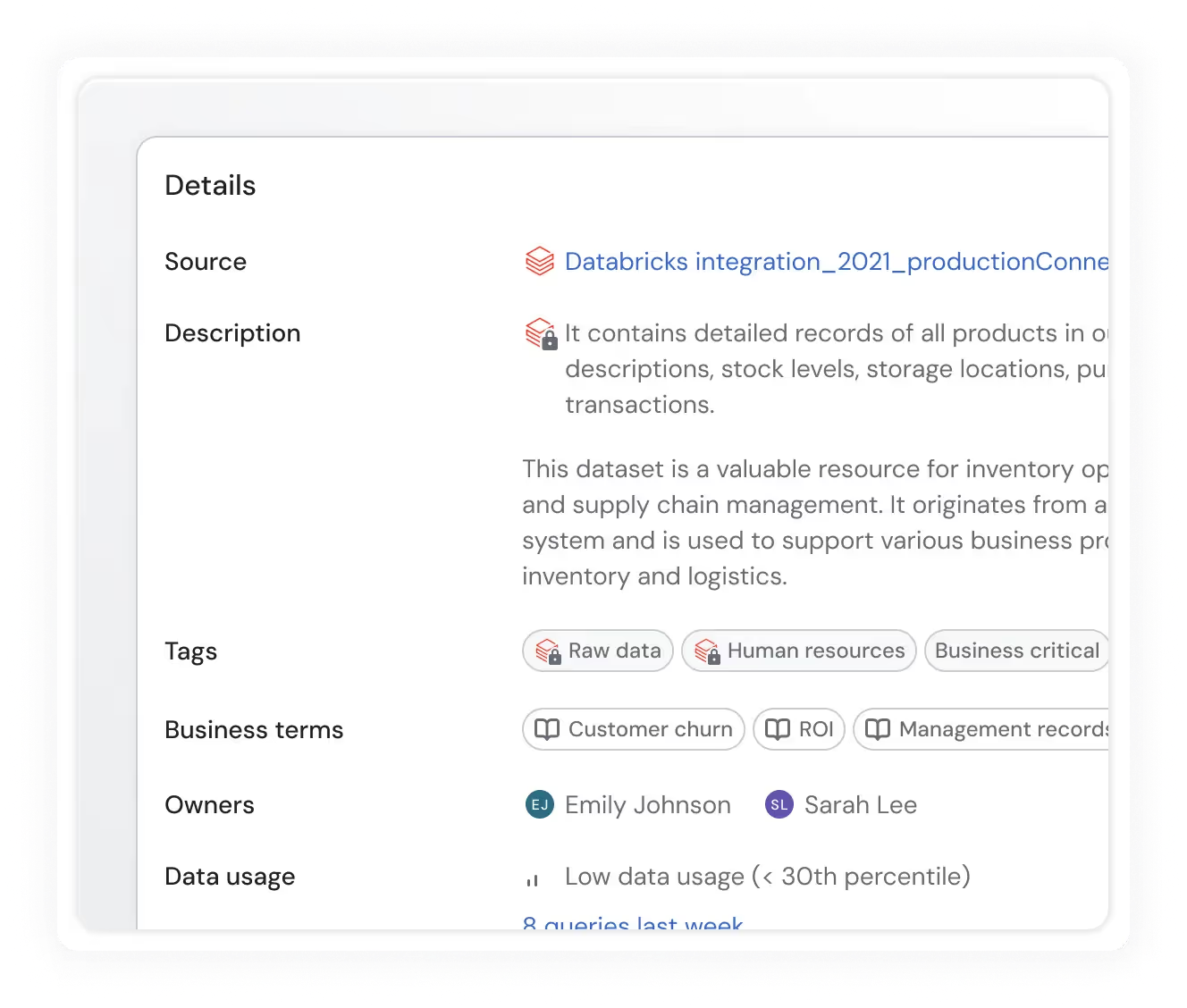

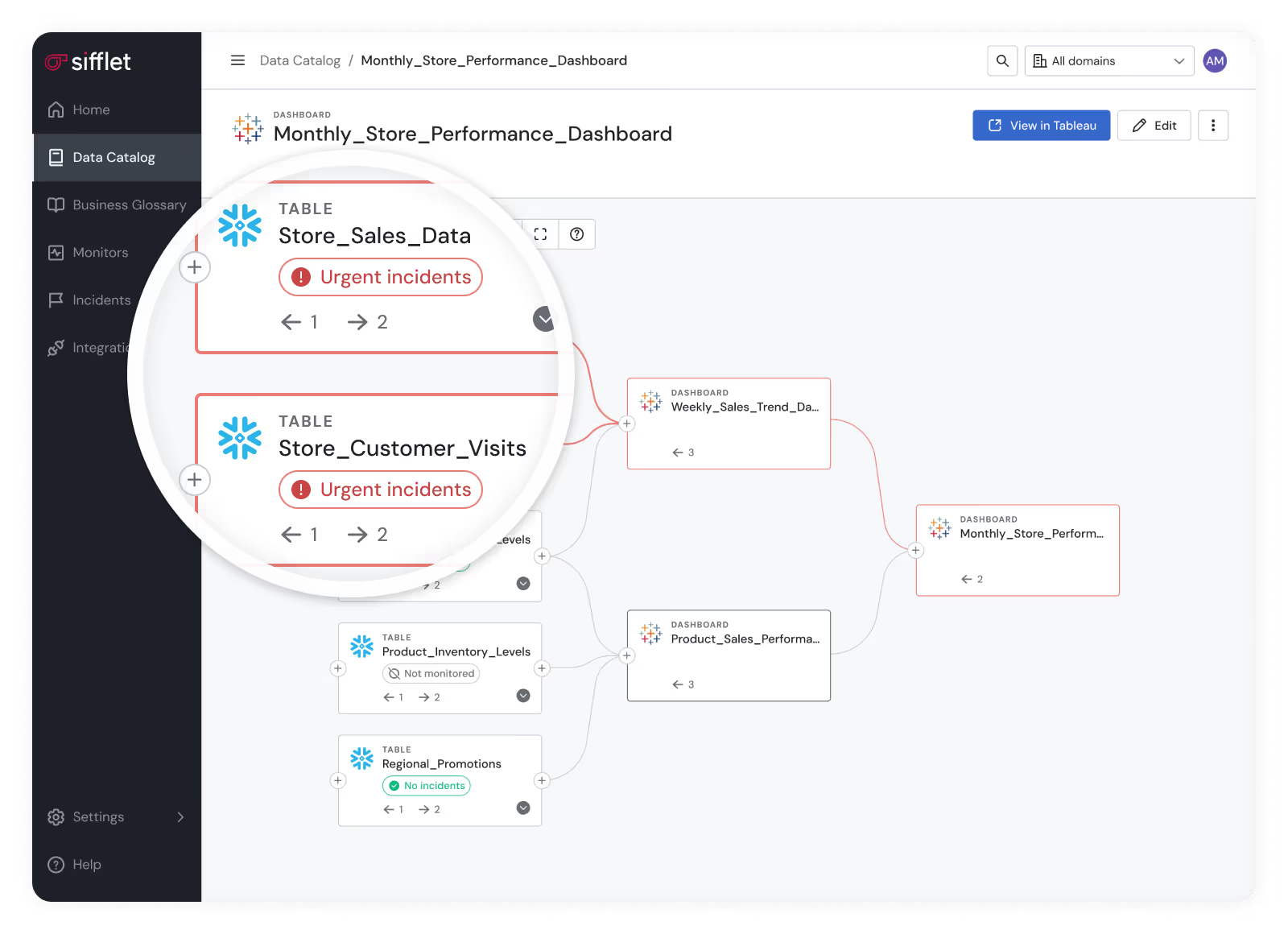

5. Data catalog and governance (Making data discoverable and trustworthy)

As your platform grows, it's easy to lose track of what data exists and who owns it.

A data catalog solves this by acting as a searchable inventory of datasets, columns, and their metadata, while data governance are the rules that guarantee your data stays organized winside the catalog.

Features typically include:

- Data discovery – Search across datasets

- Lineage tracking – See where data came from and where it’s used

- Ownership and documentation – Know who to ask and what it means

- Access policies – Control who sees what

Catalogs are key to building a data culture of trust and accountability.

Popular tools: Alation, Collibra, Atlan, Datahub, Amundsen

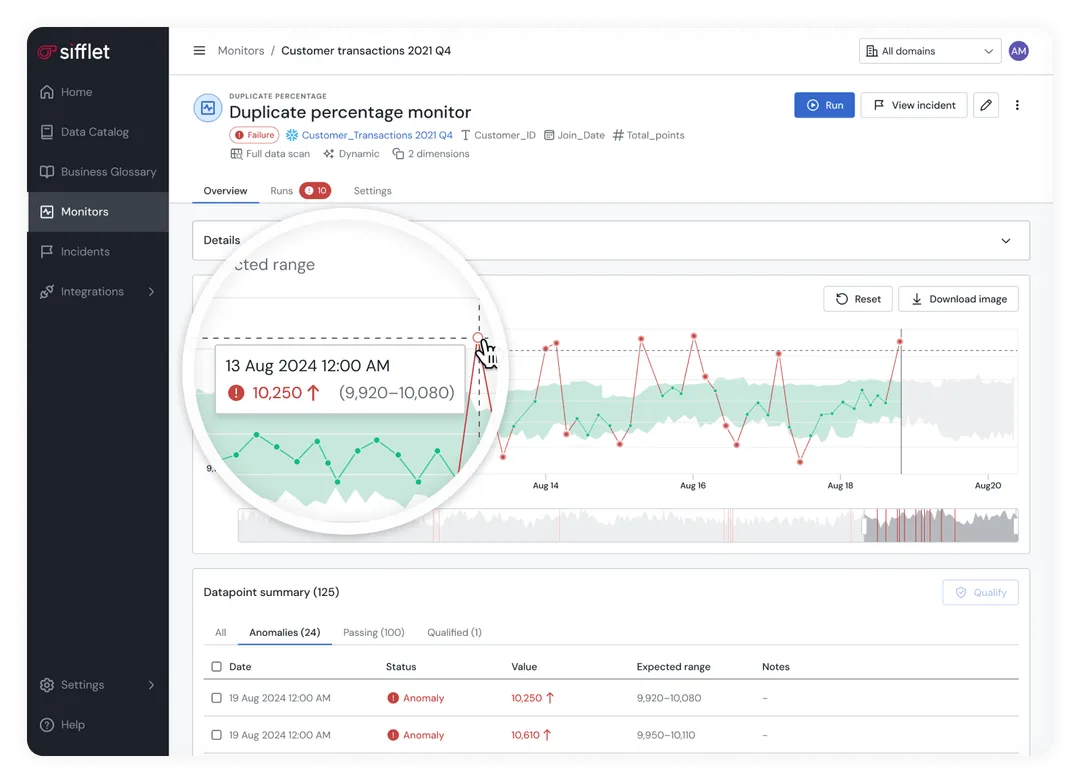

6. Observability (Making sure it works)

Even the best pipelines can break, schemas change, data stops flowing, or values spike unexpectedly.

Observability tools monitor the health and behavior of your data assets and alert you when something looks off.

Data observability answers questions like:

- Is this dashboard still reliable?

- Did something silently fail upstream?

- Why did this metric suddenly drop?

Modern platforms like Sifflet go beyond detection to help with root cause analysis and resolution by including technologies such as:

- Data catalog

- Data lineage

- Data monitoring

- AI Agents

Popular tools: Sifflet, Monte Carlo, Anomalo

7. Analytics and BI

They deliver insights.

This is where the magic becomes visible. BI tools sit on top of the platform and let teams explore, visualize, and share insights.

Whether it's building dashboards, running ad-hoc queries, or feeding data into product features, this layer brings the data platform to life for end users.

Popular tools: Looker, Tableau, Mode, Hex, Metabase

How to Build a Data Platform?

You don’t need to go from zero to Snowflake overnight. Most companies evolve in stages:

Step 1: Choose your use case

You can’t solve every problem straight off the bat. Start with a concrete business problem like marketing attribution, product analytics, or revenue reporting.

This ensures you focus on the data that matters, who will use it, and what “success” looks like.

Step 2: Ingest relevant data sources

Bring in only the data needed for your use case using managed ETL tools like Fivetran or Airbyte. This could include CRM data, application events, billing systems, or customer interactions.

Step 3: Store your data

Store the ingested data in a cloud-based warehouse such as Snowflake, BigQuery, or Redshift.

Prioritize reliability and scalability over complexity at this stage.

Step 4: Transform and model data

Turn raw data into actionable insights by defining clear data models, business metrics, and naming conventions.

Use tools like dbt to automate testing, documentation, and transformations so teams can trust the data.

Step 5: Make insights accessible and visible

Expose the transformed data through dashboards, notebooks, or embedded analytics using tools like Looker, Mode, or Hex.

Focus on usability and relevance to end users, not flashy reports.

Step 6: Monitor quality and scale

Implement monitoring and data quality checks with tools like Sifflet to catch broken pipelines, missing data, or metric drift early. Scale the platform gradually, guided by real usage and evolving business needs.

Each step of this process should solve a real problem, not just check a box. A successful data platform grows organically, driven by business needs, not buzzwords.

Data Platforms in a Nutshell…

A data platform is like building a high-performance kitchen for a gourmet chef.

When you invest in the right tools, organize your workspace, and stock it with fresh ingredients, your team can cook up anything, whether it’s a fast insight or a multi-course machine learning model.

Over time, that kitchen becomes a creative engine: empowering every team to serve up results faster, cleaner, and more consistently.

But if the tools are scattered, the ingredients are stale, or the workflows are unclear, even the best chefs can get bogged down and the quality of dishes can suffer…bye, bye, crème brûlée.

The promise of a great data platform lies in what it enables. If you treat it like a strategic investment, it’ll keep delivering long after the setup is done.

If you’re looking for a solid data observability tool that watches over your entire data platform, spots and prioritizes errors and predicts shifts, try out Sifllet.

-p-500.png)