Definition

Definition: Data quality management (DQM) is the ongoing practice of ensuring your organization's data remains accurate, complete, and aligned with business needs. It combines technical processes, governance frameworks, and collaborative practices to maintain data that teams can trust for decision-making, operations, and strategic initiatives.

Understanding Data Quality Management

Data quality management is about building the systems and processes that prevent quality issues from occurring in the first place. It’s the difference between constantly patching leaks in your roof versus installing proper drainage and weatherproofing from the start.

By embedding data quality practices into their data workflows, organizations can create sustainable competitive advantages versus companies that treat data quality management as a reactive cleanup effort and find themselves constantly fighting fires.

Example

A regional logistics company has expanded from local deliveries to cross-country freight. Its route optimization system has been its competitive advantage, but lately, delivery times are getting worse and fuel costs are spiraling. Customers are complaining about missed deliver windows more frequently, while drivers are reporting that the “optimal” routes send them through traffic jams or to incorrect and outdated customer locations.

As the Head of Operations, you start digging into the data with your analytics team and realize this isn’t an algorithmic routing problem, but a data quality management breakdown.

On the technical side, your GPS tracking data is inconsistent across your mixed fleet of vehicles. Newer trucks report location every 30 seconds, while older vehicles update every 10 minutes, making it impossible to accurately predict arrival times.

Moreover, customer address data is fragmented across multiple systems. Some addresses come from your sales team's CRM, others from customer self-service portals, and freight pickup locations from third-party logistics partners, each with different formatting standards.

The business quality management issues are more complex. Your route optimization was built when you handled mostly residential deliveries with predictable time windows. Now, 70% of your business is B2B freight with loading dock constraints, appointment-only deliveries, and weight restrictions that your system doesn't account for.

Your dispatch team defines "on-time delivery" as arriving within the scheduled day, while your customer success team promises specific 2-hour windows to clients, creating constant conflicts over performance metrics.

You implement a comprehensive data quality management strategy:

→ Technical management: Standardized telematics across all vehicles, automated address validation against postal databases, and real-time monitoring that flags route deviations and data inconsistencies immediately.

→ Business management: Separate routing logic for residential vs. commercial deliveries, unified delivery window definitions across all customer-facing teams, and dynamic optimization that accounts for actual loading dock availability and driver hours-of-service regulations.

→ Governance framework: Operations managers as data stewards for route and customer data, daily performance reviews that connect data quality metrics to delivery performance, and quarterly reviews to ensure routing algorithms adapt to changing customer mix.

Ten months later, your on-time delivery rate improved by 30%, fuel costs dropped by 18%, and customer satisfaction scores reached all-time highs. Your drivers now trust the routing recommendations enough that you've reduced route planning time by 40%.

The result? Your data quality management framework turns your growing complexity into a competitive advantage instead of an operational burden.

Takeaway

Data quality management isn’t a one-off initiative, it’s the foundation that determines whether your organization can scale its data initiatives successfully. The organizations winning with data aren’t necessarily those with the most sophisticated technology, they’re the ones that have built sustainable processes that keep their data quality aligned with their evolving business needs.In short, your data initiatives are only as strong as the quality management practices that support them.

What is Data Quality?

Data quality defines whether or not data is fit for its intended business use and it requires two perspectives working together.

Technical quality refers to measurable attributes like accuracy, completeness, freshness, and consistency. This is often called technical metadata and covers foundational questions: Are formats correct? Are values present? Is the data up to date?

Business quality, on the other hand, considers whether data aligns with how your organization defines success. Even if revenue figures are technically correct, they’re not useful if they ignore returns or don’t match finance’s reporting logic.

When data quality breaks down, whether technically or contextually, the impact compounds: teams waste time troubleshooting, leaders lose trust in reports, and systems make bad decisions.

But when data quality is strong, organizations move faster, automate with confidence, and act on insights they can trust.

What is Data Quality Management?

With this definition in mind, effective data quality management operates across both technical and business dimensions, recognizing that data can be technically sound but business-inappropriate, or business-relevant but technically flawed.

Technical data quality management focuses on the measurable, structural aspects of data health.

This includes implementing validation rules that catch formatting errors, monitoring data pipelines for completeness and timeliness, and establishing automated checks that flag anomalies before they propagate downstream.

Technical management ensures that customer email addresses follow proper formats, that foreign keys maintain referential integrity, and that data volumes stay within expected ranges.

Business data quality management addresses whether data aligns with organizational reality and supports actual decision-making needs.

This dimension asks harder questions: Does our definition of "active customer" match how the sales team actually operates? Are we measuring customer satisfaction at the right points in the customer journey? Do our data models reflect the way our business has evolved over the past year?

The most successful organizations recognize that these dimensions must work together.

Who Manages Data Quality?

Data quality management succeeds best when it’s treated as a shared responsibility rather than a single team's mandate.

Data stewards typically serve as the primary coordinators, bridging business requirements with technical implementation.

They own the day-to-day oversight of specific datasets, working with business users to understand quality requirements and with technical teams to implement monitoring and remediation processes.

Data governance teams establish the frameworks and policies that guide quality management across the organization.

They define standards for data definitions, create processes for resolving conflicts when different teams define metrics differently, and ensure that quality initiatives align with broader data strategy goals.

Engineering and analytics teams implement the technical infrastructure that makes quality management scalable.

They build the monitoring systems, create automated validation rules, and develop the pipelines that ensure quality checks are embedded throughout data workflows rather than bolted on as an afterthought.

Business users contribute domain expertise that technical teams simply cannot replicate.

They understand the nuanced ways that data should behave, can identify when technically correct data doesn't align with business reality, and provide the context needed to prioritize quality issues based on business impact.

This collaborative approach prevents the biggest mistake organizations make when it come to data quality, namely, that it becomes an IT responsibility that business teams ignore until issues become unavoidable.

How to Manage Data Quality

Effective data quality management starts with defining what “quality” means in context.

Rather than applying blanket rules, leading organizations tailor quality standards to how each dataset is actually used.

From a practical standpoint, that means looking at data quality in both technical and business context across dimensions like accuracy, completeness, consistency, timeliness, validity, uniqueness, integrity, freshness, lineage and traceability, and relevancy.

From there, there are four key data quality management steps:

1. Establishing meaningful metrics

This entails setting metrics that bridge technical health and business relevance.

For example, tracking “98% completeness of customer emails” only matters if it's tied to outcomes like email campaign reach or customer engagement.

2. Data profiling and monitoring

These are technical foundations that help detect anomalies early, but they’re only impactful when interpreted through a business lens.

3. Validation and standardization processes

These ensure data meets expectations as it enters and moves through your systems. This includes preventive checks (ex. format validation) and corrective actions (ex. cleansing and normalization).

4. Continuous feedback loops

By implementing feedback loops, organizations can connect quality monitoring back to business outcomes, helping teams understand which quality issues matter most and how improvements translate to better business results.

This feedback helps prioritize quality investments and demonstrates the value of quality management initiatives.

Components of a Data Quality Management Framework

Every strong data quality management framework starts with data governance.

Governance is simply the policies and standards that define what “good quality” data means for different use cases, who owns data definitions, and how to resolve conflicts should they arise.

Three additional components ensure that data quality management can come to life:

- Organizational roles and responsibilities

These bring governance to life.

There are multiple types of roles, including data owners, data stewards, and governance committees. While data stewards champion quality in their domains, bridging business needs and technical execution for example, a cross-functional council helps to align priorities as requirements evolve to ensure that quality stays relevant and adaptable.

- Technical infrastructure and processes

These will provide the operational backbone for quality management.

This includes monitoring systems that continuously assess data health, validation rules that prevent quality issues at ingestion points, and remediation workflows that address problems when they occur. The most effective technical infrastructure is designed to surface business-relevant quality issues rather than generating noise about minor technical anomalies.

- Measurement and feedback mechanisms

These mechanisms connect quality activities to business outcomes, helping organizations understand which quality investments deliver the greatest return.

Measurement and feedback mechanisms track both technical metrics and business impact, creating visibility into how data quality affects customer satisfaction, operational efficiency, and strategic decision-making.

Example of a Data Quality Management Framework

Returning to our logistics company example, their framework shows how governance, roles, processes, and feedback work together.

They began by assigning clear data ownership: operations managers became stewards of route and delivery data, while customer success managed address and preference information. Escalation paths and quarterly reviews ensured definitions stayed aligned as the business grew.

With roles clarified, teams took accountability: operations tracked route accuracy and delivery performance, customer success monitored address and preference data, and IT supported the infrastructure, while business teams defined what “quality” meant in their domains.

Technical processes focused on what mattered: real-time GPS monitoring, automated address validation, and alerts for delivery deviations. Instead of tracking everything, they prioritized metrics tied to customer satisfaction and operational efficiency.

Finally, feedback loops linked operational metrics to business outcomes,showing how better address accuracy improved delivery rates, and how optimized routes reduced fuel costs.

This clarity helped prioritize efforts and prove the impact of data quality to leadership.

Benefits of Data Quality Management

Organizations that invest in systematic data quality management unlock capabilities that extend far beyond cleaner datasets:

- Improvement in operational efficiency

When teams trust their data, they can automate routine decisions and spend less time validating information before acting on it, making operations much more efficient.

- Faster and more accurate strategic decisions

When leadership has confidence in the data underlying critical business choices, they are able to make strategic decisions much faster.

Companies with strong data quality management can identify market opportunities faster, respond to competitive threats more effectively, and make larger strategic bets based on data insights.

- Better results in advanced analytics and AI initiatives

Machine learning models trained on clean, representative data perform more accurately and require less ongoing maintenance.

Organizations with mature data quality management can deploy AI applications more successfully because they've already solved the data foundation challenges that often derail these projects.

- Manageable regulatory compliance

If data quality processes meet accuracy and completeness requirements, reaching and keeping compliance becomes much simpler.

Rather than scrambling to clean data for audits or regulatory submissions, organizations with systematic quality management maintain compliance-ready data as part of their normal operations.

Best Practices in Data Quality Management

Implementing data quality management is about building systems that prevent issues, support business goals, and scale with your organization.

We’ll leave you with 4 best practices that help teams move from reactive cleanup to proactive, business-aligned data quality:

- Preventive measures consistently outperform reactive cleanup efforts.

Implementing validation rules at data ingestion points prevents quality issues from propagating through downstream systems, while establishing clear data entry standards reduces errors at the source.

The most mature organizations embed quality checks into their normal data workflows rather than treating quality as a separate activity.

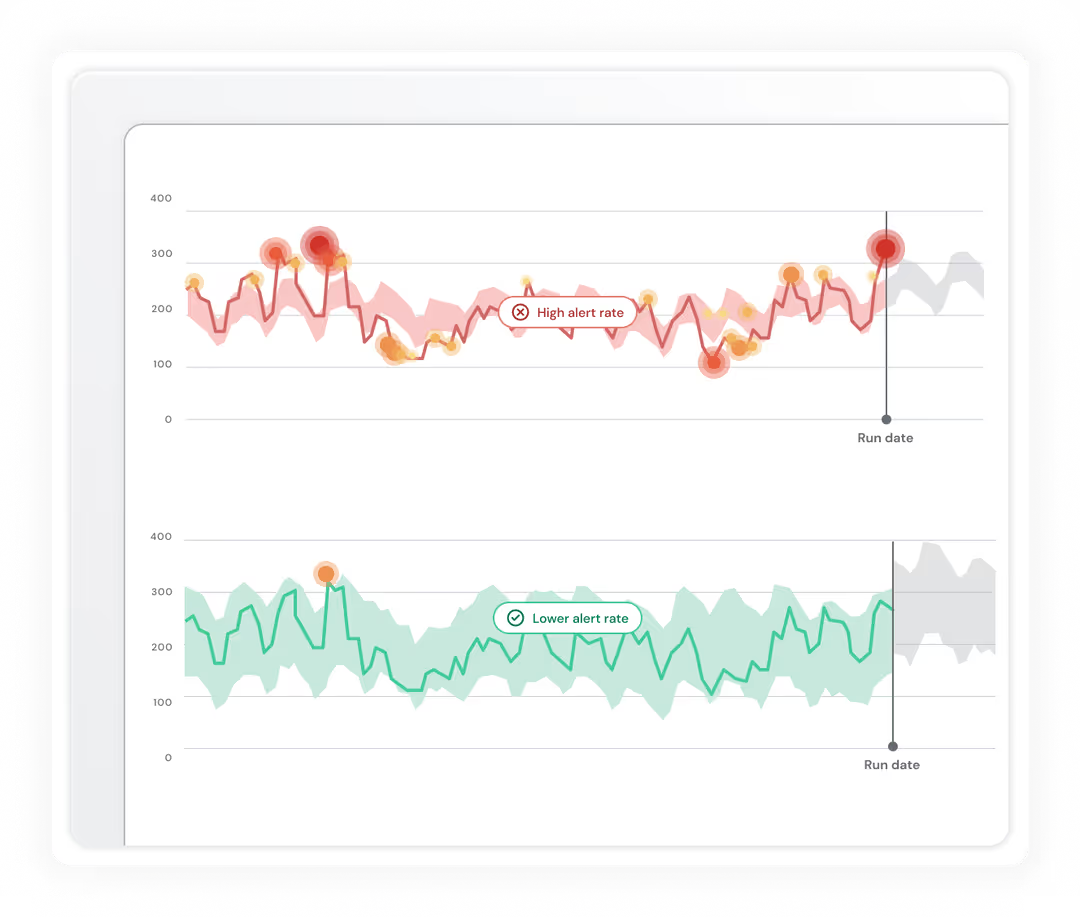

- Continuous monitoring enables faster problem resolution than periodic quality assessments.

If you’re monitoring your data quality only when your performance doesn’t meet expectations, chances are you will days or weeks finding the root cause.

However, implementing automated alerts will help your team solve the issue in a matter of hours.

Real-time visibility into quality metrics allows you to maintain quality standards rather than recovering from quality failures.

- Cross-functional collaboration produces more sustainable quality improvements than technical teams working in isolation.

When business users help define what constitutes quality data for their domains, the resulting standards actually support business operations rather than just meeting technical requirements.

This collaboration also ensures that quality initiatives adapt as business needs evolve.

- Gradual expansion from high-impact use cases builds confidence and demonstrates value before scaling.

Starting with data that directly affects customer experience or regulatory compliance provides clear business justification for quality investments and helps refine approaches before applying them to less critical datasets.

Best Data Quality Management Tools

Choosing the right tool can make or break your data quality initiative.

The best platforms go beyond technical checks to surface issues that impact business outcomes, helping teams act faster and more effectively.

Below are five leading tools that support scalable, business-aware data quality management:

1. Sifflet

Sifflet provides business-aware data observability that connects technical data quality metrics to business impact, helping organizations prioritize quality issues based on their effect on customer experience and operational performance.

Sifflet’s automated data quality monitoring and impact analysis capabilities allow you to focus on quality issues that matter most to business outcomes.

Sifflet’s data quality monitoring is designed to deliver reliable, scalable, and customizable observability for modern data teams. With a mix of out-of-the-box monitors and flexible customization options, users can tailor monitoring to their specific business needs. The platform prioritizes smart, AI-driven alerting to reduce noise and eliminate alert fatigue, ensuring that teams are only notified when issues truly matter.

Sifflet empowers both technical and non-technical users to implement and manage data quality with ease.

Ready-to-use templates allow for fast deployment and immediate value, enabling checks at both the field and table levels.

Continuous anomaly detection powered by machine learning provides end-to-end lifecycle monitoring, while low-code/no-code interfaces and LLM-based setup give business users greater ownership. For organizations looking to scale efficiently, features like Data Quality as Code (DQaC) and AI-assisted optimization ensure monitoring stays accurate, efficient, and easy to maintain over time. The result is stronger data reliability, less manual effort, and faster responses to data issues.

2. Monte Carlo

Monte Carlo offers data observability focused on preventing data downtime through machine learning-based anomaly detection and automated monitoring across data pipelines.

Their platform helps engineering teams identify and resolve quality issues before they impact downstream applications and business processes.

Monte Carlo offers a data reliability dashboard that will help you asses the quality of your data.

3. Ataccama

Ataccama offers an AI-powered data quality monitoring solution.

The platform simplifies the process of defining and monitoring rules for key datasets and business KPIs, enabling teams to track data quality trends, catch anomalies, and ensure consistency across systems, particularly during data migrations.

Ataccama offers AI-powered rule creation.

Instead of writing data quality rules manually, users can simply provide a natural language prompt, and the system will generate the rules and even test data to validate them. Its AI agent further automates bulk rule creation and mapping, saving significant time and reducing the need for technical input.

For ongoing monitoring, Ataccama provides automated quality tracking and reporting, allowing you to observe data trends over time and receive alerts when quality declines.

Additionally, Ataccama includes reconciliation checks to validate data integrity during migrations. You can map and compare source and target tables easily, identifying mismatches early and ensuring synchronization between systems.

4. Informatica Data Quality

Informatica’s data quality solution is part of the Intelligent Data Management Cloud (IDMC) and delivers enterprise‑scale tools to ensure that your data is accurate, reliable, consistent, and fit for purpose.

The tool offers a data profiling engine that automates ongoing analysis to uncover quality gaps, anomalies, and structural issues across all data sources . Data engineers and analysts can define or customize data quality rules using a combination of prebuilt templates and no‑code/low‑code configurations that enforce accuracy, completeness, consistency, uniqueness, validity, and timeliness across datasets.

IDQ includes end-to-end monitoring, incident management, and observability to provide visibility into data pipeline health, quality trends over time, and real-time anomalies. Dashboards, scorecards, and reporting interfaces deliver insights that can be embedded into BI tools or shared with stakeholders for strategic alignment .

Informatica’s offering is especially valuable for large, complex environments.

5. Talend

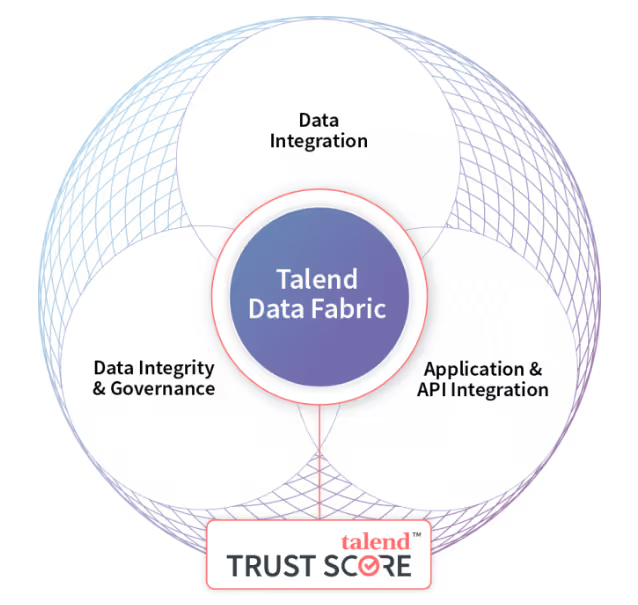

Talend’s Data Quality and Governance is part of Talend Data Fabric platform, delivering an integrated, enterprise-grade solution for discovering, cleaning, monitoring, and governing data.

Talend’s automated data profiling, analyzes structure, completeness, consistency, accuracy, and uniqueness across data sources. This insight feeds into the Talend Trust Score™, a continuous data health score that provides visibility into data trends, quality degradation, and regulatory compliance readiness.

The platform supports automated data quality checks, standardization, deduplication, and enrichment.

Talend enables self-service stewardship, where business users can collaborate using browser‑based tools to classify, document, and remediate data, embedding domain knowledge directly into quality workflows.

With governance, automation, and audit trail capabilities, Talend is particularly well-suited to organizations needing to scale data quality oversight across teams, regulatory boundaries, and evolving analytic needs.

Final thoughts…

Data quality is more than a box to check, it’s the foundation for speed, accuracy, and trust in every data-driven decision your organization makes.

By aligning technical rigor with business context, building the right roles and processes, and using tools that highlight what matters most, you can move from firefighting data issues to proactively enabling innovation.

Data quality management is crucial in order to achieve high quality data throughout its entire pipeline.

If you’re ready to start monitoring the quality of your data, try Sifflet today.

-p-500.png)