A brief introduction to freshness

Detecting freshness anomalies in data is essential to ensure data reliability, support accurate decision-making, and improve the overall effectiveness of data-driven processes. It enables businesses and organizations to stay agile, adapt to rapidly changing conditions, and make informed choices based on the most current information available.

Freshness monitoring stands as one of the most fundamental and essential types of data quality monitoring. To ensure accurate and reliable data analysis, it becomes imperative to prioritize verifying the presence of complete and up-to-date data. Before tackling any other aspects of data quality, the currency and completeness of the data lay the foundation for sound decision-making and actionable insights.

Missing data refers to the absence of values in a dataset for certain attributes or records. It can happen due to various reasons, such as data collection errors, technical issues during data transfer, or intentional data omissions. Missing data can lead to biased analyses and incomplete insights, as important information may be overlooked or underrepresented. Missing data can take the form of outdated data being repeated and that’s something we want to be sure to detect as well.

In many domains, decisions are made based on insights derived from data analysis. If the data is stale or outdated, the decisions made using that data can be flawed, leading to potential negative consequences for businesses or organizations.

A data freshness issue can negatively impact your business in multiple ways:

- Model performance: In machine learning and AI applications, the performance of models heavily depends on the quality and freshness of the data used to train and update them. Stale or inconsistent data can negatively impact model accuracy and generalization.

- Data integrity: Maintaining data freshness helps ensure data integrity and prevents the propagation of errors. As data gets updated and modified, detecting anomalies in freshness can help identify potential data corruption or unauthorized access.

- Compliance and regulatory requirements: In some industries, like finance and healthcare, regulatory authorities impose strict guidelines on data handling and reporting. Ensuring data freshness is essential to comply with these regulations and avoid penalties.

- Customer experience: For businesses dealing with customer information, having fresh data can lead to better customer experiences. For example, if a customer's contact information is outdated, it can lead to failed communications and bad customer experience.

- Efficient resource allocation: Freshness anomaly detection can help organizations allocate resources more efficiently. For example, in inventory management, having fresh data about stock levels enables better decisions on restocking.

Sifflet’s Freshness Monitor

Sifflet offers a Freshness Monitor that can be linked to any of your data assets.

Flexibility in data granularity

Sifflet provides three modes of time aggregation to suit your needs. You can choose the frequency that best fits your use case:

- Daily aggregation: One observation per day.

- Hourly aggregation: One observation per hour.

- Intra-hourly aggregation: In this mode, data is rounded up to 10, 15, 20, or 30 minutes. For example, if you choose a 30-minute granularity, we create two observations per hour: one between minute 0 and minute 30 and another one for the rest of the hour.

This level of customization empowers users to adapt their monitoring strategy by setting the time granularity that best suits their needs.

Different companies may be affected by delays in data arrival to varying extents. For instance, e-commerce companies that operate in a time-sensitive environment need to process orders and shipments quickly. Even a 1-hour delay in data arrival can have serious consequences for them.

On the other hand, companies that primarily use data for internal decisions and strategy may not be as concerned about data freshness. For them, it might be sufficient to ensure that data arrives at some point during the day, and a minor delay of 20 minutes may not warrant an alert.

At Sifflet, we recognize these differences in data urgency, and our Freshness Monitor allows you to set up alerts based on the specific impact levels that matter most to your business.

Two modes: static and dynamic

Sifflet provides two modes of monitoring: static and dynamic.

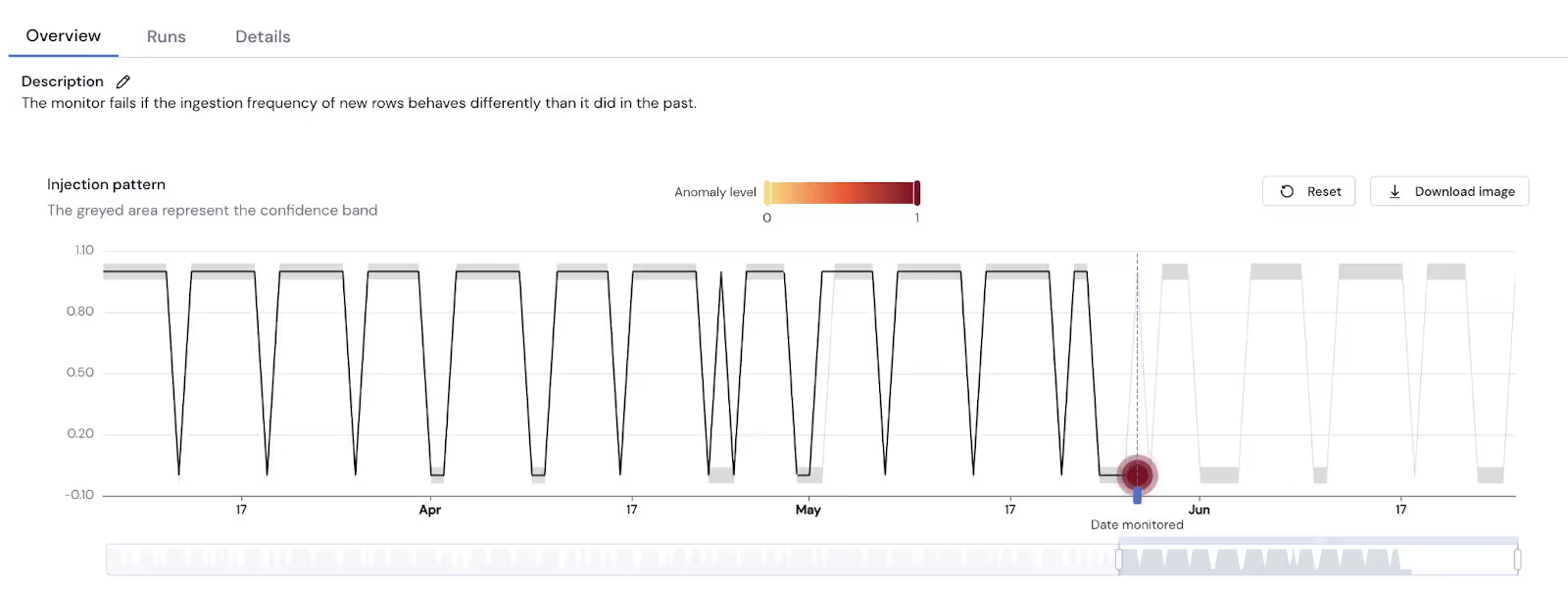

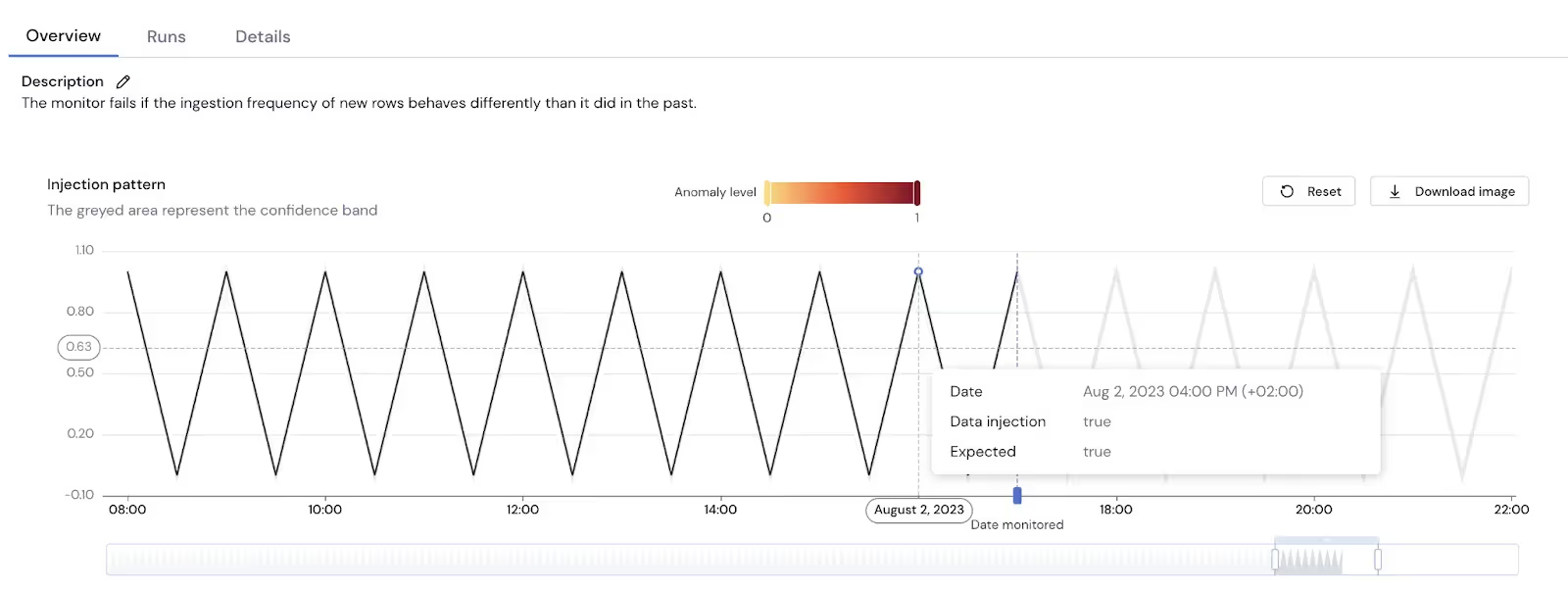

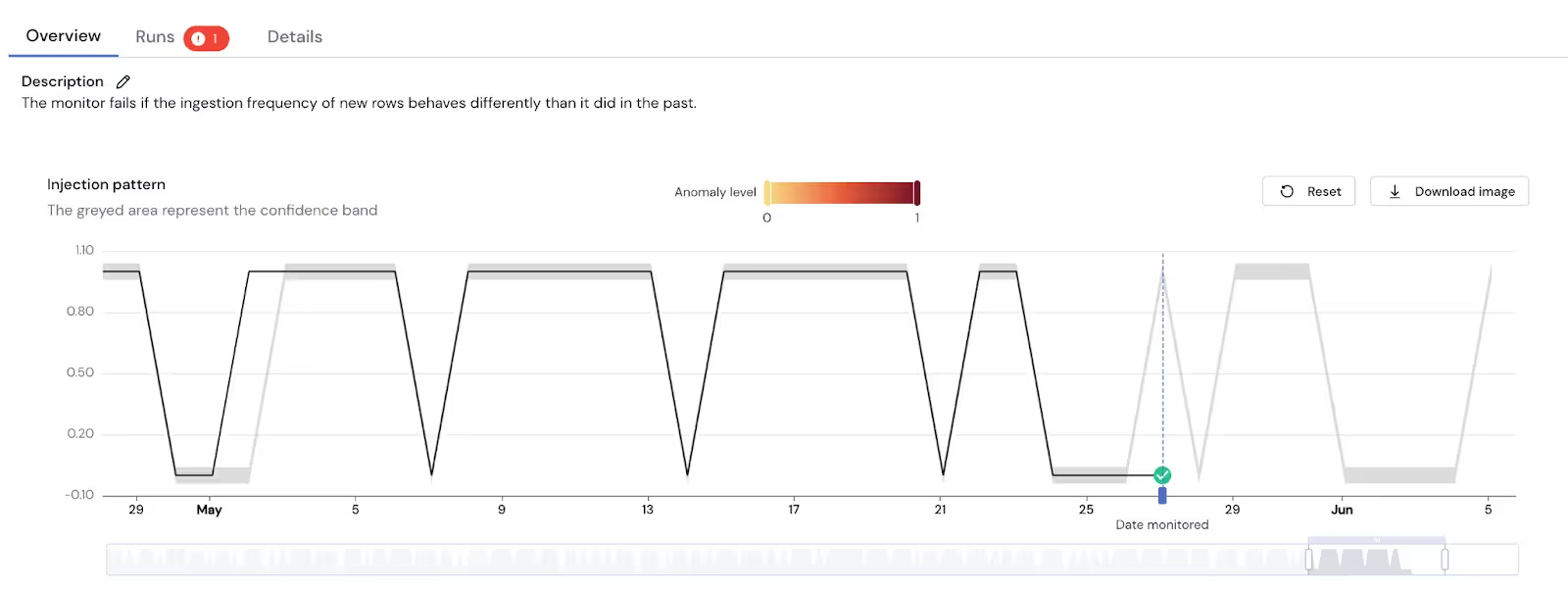

Dynamic Mode: In the dynamic mode, Sifflet automatically detects a data injection pattern based on your historical data arrival times. For example, if you have a daily pipeline running at 6:00 AM and 2:00 PM, the freshness monitor will recognize this pattern and anticipate data only at these two specific times of the day.

To achieve this, Sifflet has developed an in-house model specifically designed to understand your data pattern of arrival. This model is continuously improved and re-trained at every run, utilizing the historical arrival times of data freshness. Using this knowledge, the model predicts whether data should be expected on a given day, at a specific hour, or within a particular time slot.

By leveraging our dynamic monitoring mode, you can be confident that our freshness monitor will raise alerts only when necessary, avoiding unnecessary notifications and allowing you to focus on critical data-related issues that truly require your attention

With the dynamic mode, our users can easily scale their data quality checks and promptly initiate freshness monitoring. This mode empowers users to effortlessly monitor thousands of data assets without the need for meticulous selection of tables or scheduling checks based on update frequencies.

Static Mode: In the static mode Sifflet ensures that your data is always fresh at any given run. If, during the last slot, data has not arrived as expected, Sifflet promptly raises an alert. The static mode proves valuable for users seeking precise control over alerting triggers, as it allows them to receive instant notifications when their expectations are not met.

Choose your sensitivity

In the context of machine learning predictions, sensitivity refers to the level of strictness or tolerance with which we interpret the model's confidence in its predictions. Specifically, it determines the threshold at which we consider a prediction as anomalous or worthy of raising an alert.

To choose the appropriate sensitivity, one needs to consider the historical performance and reliability of the model, as well as the potential consequences of false positives and false negatives in the predictions.

Here's the technical explanation:

In the domain of Machine Learning predictions, confidence levels are often associated with the likelihood of a particular event or outcome occurring. For instance, a model predicting the arrival of data on Friday at 10:00 AM may assign a high probability, say 99.5%, if historical data consistently supports this timing.

On the other hand, if there have been occasional discrepancies in data arriving at exactly 10:00 AM on Fridays, the model might assign a lower probability, such as 80%, to account for this variability.

The sensitivity of the model refers to the degree of strictness applied to the prediction process. In other words, it determines how cautious or alert we want the model to be in identifying potential anomalies. A higher sensitivity indicates a more stringent approach, where even predictions with lower confidence levels, such as 80%, will trigger alerts. This cautious stance aims to capture even minor deviations from expected behavior.

Conversely, a lower sensitivity means a more lenient approach. In this case, the model would require a higher confidence level to raise an alert, say 95% or more. This approach acknowledges that some deviations in the data's historical pattern may not necessarily indicate anomalies, and thus, the model exercises caution before triggering alerts.

However, it is crucial to consider the context and implications of the model's past misses. It is possible that these discrepancies were due to internal data anomalies rather than actual shifts in the underlying pattern. In such cases, reassessing the sensitivity and potentially recalibrating the model may be necessary to improve its overall performance.

How to enrich the machine with human knowledge?

To enrich the machine with human knowledge and improve its performance, we have implemented a functionality that allows users to provide feedback on the model's predictions. This feedback can be in the form of labeling data points as either errors, false positives, or false negatives.

When a user labels a data point from the past, say last Friday at 10:00 am, as a known error, it imparts valuable information to the model. This information indicates that the user expected data to arrive at that specific time, but the model's prediction was incorrect. To incorporate this knowledge, the data point is reintroduced into the training process, and the model is explicitly re-trained on these labeled points. By doing so, we reinforce the model's understanding of specific patterns and correct any misinterpretations.

Continuous scan

Now that the monitoring system is fully configured and operational, the next consideration is how to schedule the monitoring effectively. The scheduling process is essential to ensure timely detection of anomalies without overwhelming the data-personas with the burden of constantly monitoring pipelines.

To address this concern, we have introduced the concept of "Continuous scan." This approach allows the monitoring system to scan for data arrivals (or non-arrivals) since the last monitoring run.

The monitor automatically scans for data events since its last execution, ensuring that no data anomalies are missed.

By adopting the Continuous scan mode, data-consumers can have peace of mind, knowing that the monitoring system is continuously vigilant and up-to-date with the latest data arrivals. This feature eliminates the need for users to keep track of specific monitoring schedules and guarantees comprehensive coverage of the data streams.

Conclusion

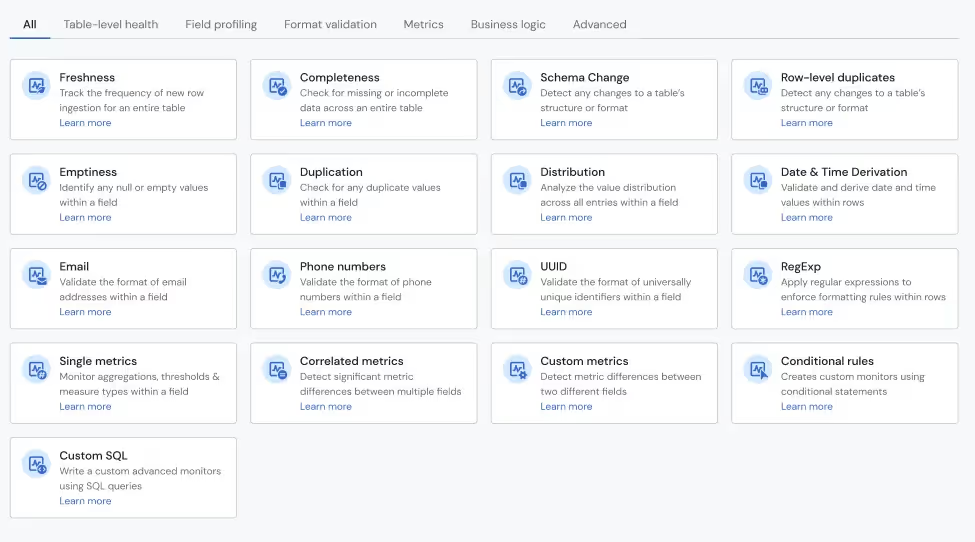

Effective monitoring of missing data is an indispensable aspect of any data-driven business. To meet this need, we have meticulously crafted our freshness monitor, enabling users to effortlessly oversee all their data assets at scale, effortlessly and automatically. By simply creating the monitor, our users can rest assured that missing data will be continuously detected, prompting timely alert signals to keep them informed and in control.

Our freshness monitor serves as the first cornerstone among a plethora of monitoring possibilities we offer our users. Beyond this initial step, they gain access to a diverse range of monitoring set-ups tailored to their unique requirements. From global comprehensive monitoring (like data-assets completeness monitoring) to business-specific checks (like tracking expected revenue correlated with observed revenues over time) we provide a suite of out-of-the-box monitors to serve all data teams, technical and non-technical, monitoring their data quality based on their context, designed to empower our users with actionable insights and data confidence.

-p-500.png)