Finding your data is one thing, but do you fully trust the information you use?

Thanks to its high user accessibility and scalability, the modern data stack can quickly help organizations meet their growing data needs. On top of this, the modern data stack lowers the technical complexity, enabling data analysts and business users to use the available data. Powerful tools exist to deal with every stage of the pipeline. Think of Snowflake and Firebolt for data warehousing, Fivetran, and Airbyte for data integration, dbt for data transformation, etc. However, the modern data stack is not as agile when it comes to data governance and giving context to data. This is now changing. Recently we have seen an increasing interest in metadata solutions, with the emergence of tools as fast and scalable as the rest of the modern data stack.

But let's start from the beginning. What are metadata management and data discovery? What's their importance within companies? What are the main metadata management tools that are currently being used? Let's dive into it.

A brief history of metadata management: The prefix "meta" comes from Ancient Greek and is now commonly used in English as "about the thing itself". Metadata can then be defined as data that provides information about other data, generally a dataset. In other words, metadata is data that has the purpose of defining and describing the data object it is linked to. Examples of metadata are titles and descriptions, tags and categories, information on who created or modified the data, who can access or update the data, etc.

Different types of metadata

Organizations deal with different types of metadata. These are the most common types:

- Descriptive metadata gives information about a data object, like title, author, keywords, etc. This type of metadata is most commonly used for data discovery and identification.

- Structural metadata is information about the structure of the data object. This metadata informs the data users about how resources and files are organized.

- Administrative metadata provides more technical information regarding a data object. It provides data users with administrative information like the owner of a particular data asset, how and by whom the data can be used, etc. This type of metadata is used to manage data and adhere to usage rights and intellectual property regulations.

Adopting a metadata strategy

Do you really need a metadata strategy? The short answer is yes.

People tend to assume that metadata is not as important as digital data. What they fail to understand is that metadata is essential for making the most of your data. In fact, when appropriately handled, metadata facilitates the search, retrieval, and discovery of relevant information, enabling users to make the most out of their data. These are some use cases of metadata in business:

- Classification: metadata plays an essential role in data organization.

- Findability: metadata can improve search and retrieval processes, enabling data users to quickly find what they are looking for.

- Information security: metadata can be used to control data distribution by validating access and edit rights.

What is Metadata Management?

As data continues to grow exponentially and become more distributed, metadata management now plays a central and strategic role in businesses. Metadata management is crucial to building data-driven companies. So, metadata management can be defined as the practice of managing the data about data. In more practical words, this practice allows you to give meaning and assign descriptions to the information assets within organizations, unlocking value by improving data usability and findability. As a result, metadata provides the context required to understand and govern your systems, data, and business.

Why should organizations want to document and manage their metadata?

Currently, most organizations have an information architecture that resembles an overpacked and disorganized library. This issue creates two main challenges:

- Lack of data findability

- Lack of data usability

In other words, organizations' overwhelming amount of data makes it increasingly challenging for data users to find what they are looking for and make the most out of their data. And this problem is expected to grow within organizations that are collecting increasingly high amounts of data to improve their business processes. However, mere data collection without applying good data management practices won't bring the advantages that companies expect from data.

How can good metadata management practices change your life for the better?

By now, you've probably understood the importance of metadata. But how can it practically improve your organization? Metadata tells you what data you have, where it comes from, what it means, and its relationship to other data you have. This helps organizations in 4 main areas:

- Data discovery & trust

- Data governance

- Data quality

- Data maintenance

A robust metadata management strategy ensures that an organization's data is high-quality, consistent, and accurate across various systems. Organizations using a comprehensive metadata management strategy are more likely to make business decisions based on high-quality data than those with no metadata management solutions.

So, to tackle the challenge of dealing with metadata, organizations adopted data catalogs.

A data catalog can be defined as a detailed inventory of all data assets within an organization and their metadata. Data catalogs are designed to enable data professionals to quickly find and use the data they need for any business purpose. By centralizing and unifying data and metadata within organizations, data catalogs democratize data access, making an increasing number of roles able to make the most out of data - from IT teams to any business function. As a result, data catalogs have become the standard for metadata management.

The objective of a data catalog is to get all the relevant information to data users so that they can understand what they are monitoring, for what kind of purposes, the dependencies between data assets and the context of the creation of the data is.

The evolution of the data catalog

Similarly to data, how we think about metadata has evolved over time. And over the past three decades, there have been different phases in the development of the data catalog.

The 1990s - 2000s

Although the idea of metadata has been around for centuries, the modern notion of metadata dates back to the late 1990s - when the internet was widely embraced, and organizations started organizing their newly collected data. At that time, companies like Informatica and Talend emerged and became the first leaders in metadata management by creating the first "inventories of data".

The 2010s

By the 2010s, data had become essential, and its importance started being recognized beyond the IT team. This led to the adoption of Data Stewardship - the idea that organizations need a dedicated group of people handling metadata. On top of this, organizations realized that simply having an inventory of their data wasn't enough anymore and that more business context was needed within data catalogs. This led to the creation of the second generation of data catalogs, like Collibra and Alation. But this didn't come without challenges. These catalogs were complex and had high requirements, so they were tough to set up and maintain.

The future of the data catalog: Data discovery

Although the second generation of data catalogs brought forward a lot of innovation regarding how we think about metadata, they still fall short of specific characteristics. For us, the future of metadata management should revolve around the following aspects:

- Scalability: Unstructured data is becoming more and more common. This data needs to undergo several transformations before being useful, making it more difficult to catalog.

- Automation: Data catalogs often rely on data teams to manually update the catalog as data assets change. This is extremely time-consuming for data engineers and analysts who could be working on more important projects.

Metadata management tools need to enable DataOps, support distributed architectures, and scale as the demand for data and knowledge increases within the organization. Data discovery detects patterns and outliers by visually navigating data or applying guided advanced analytics. Data discovery offers businesses a way to make their data clean, easily understandable, and user-friendly, giving everyone in the organization the possibility to use the data.

What are the benefits of data discovery?

Organizations benefit from implementing Data Discovery in many ways:

- A better understanding of enterprise data, where it is, and who can access it

- Knowing what data is sensitive and where it can be found leads to better compliance management, which allows businesses to implement the appropriate security measures to prevent the loss of sensitive data and avoid potentially damaging consequences

- The ability to dig deeper into the data allows companies to unlock essential information for decision-makers, leading to business innovation

What are the main characteristics of data discovery tools?

Broadly, data discovery tools have the following characteristics:

- Self-service discovery and automation: Data Discovery enables data teams to use their data without the need for dedicated support from the data engineering team, therefore removing the silos between the different steps of the data pipeline. This makes accessing and understanding the data easier, leading to increased data adoption among teams.

- End-to-end visibility through data lineage: Data Discovery is facilitated by field-level lineage, which maps upstream and downstream dependencies between data assets. Lineage enables teams to find and access the correct information while also being in the know when data pipelines break.

- Scalability: Data Discovery leverages Machine Learning to ensure that, as data changes, so does your understanding of the data.

- Data reliability: Data Discovery enables data teams to truly understand the state of their data and how it is being used across domains and stages of the pipeline, allowing them to trust the data's reliability fully.

What to look for in your data catalog/data discovery tool

If you have arrived this far in this blog, it means that you are probably interested in trying out some data catalog or data discovery solutions. Here I'll try to summarize the features you need to look for in a data catalog.

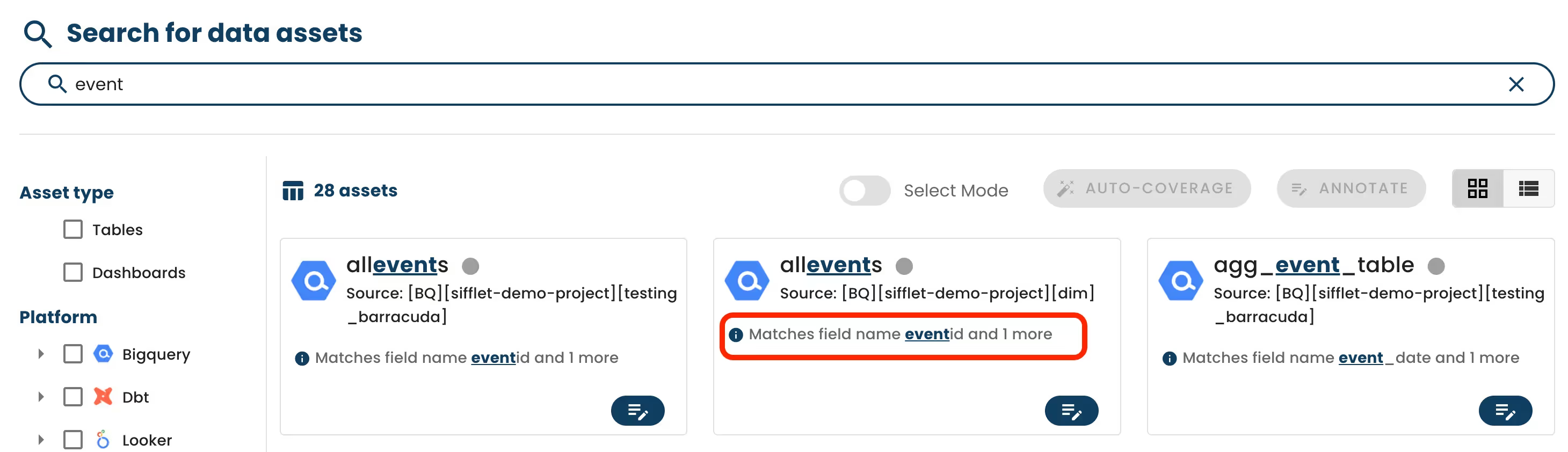

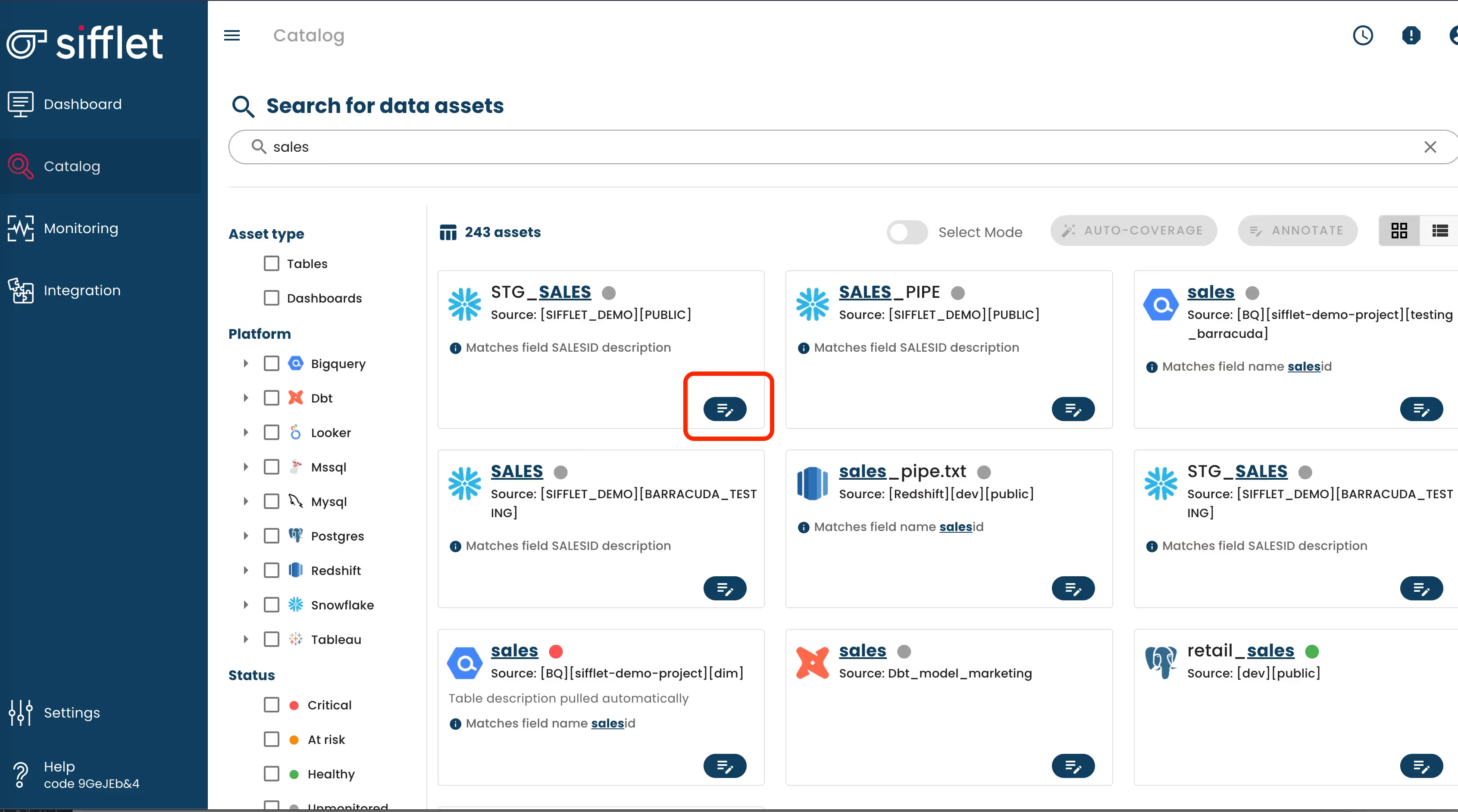

Advanced search

On the data catalog, you can find data assets based on their metadata. Here is an example. Sifflet's advanced search prioritizes the most accurate results based on your keywords.

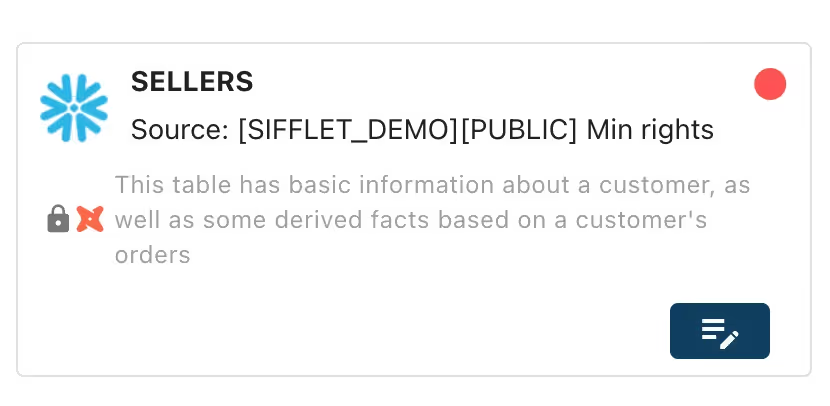

Table status

In your data catalog, you should also be able to see the health status of the table you are working with. On Sifflet, the dot next to the table name represents the quality rules' status. In this case, the dot is red, meaning you should not trust the data on that table.

Having access to the table status on the data catalog allows you to not only find the data you need quickly but also to trust it and immediately make use of it.

Possibility to enrich your assets

In your data catalog, you should be able to enrich your data assets by adding descriptions and tags.

The need for an integrated solution to enable discovery and ensure trust

You don't want to find yourself questioning the data you are using to make strategic business decisions. To have this kind of trust, your team will need to know that the information they are using is reliable at all times. It's for this reason that your data catalog/discovery tool on its own may not be enough. To be able to unlock the trust you need in your data, you will need to implement some form of data quality monitoring or data observability. Read more on data observability on this blog. Tools like Sifflet provide data observability from data ingestion to consumption and a built-in data catalog which allows you to quickly understand the health status of the tables you are using.

Conclusion

Data is exponentially growing within organizations, and people have other priorities than keeping a track record of metadata. Locating a table exactly when you need it is crucial for business, but tracking every digital asset in your cloud data warehouse is not an activity worth dedicating 100% of your time. This is when it becomes crucial to invest in metadata management tools that can automatically collect metadata about your datasets.

To sum up, investing in a data catalog is a great first step, helping you with the different challenges I have mentioned above, as well as improving how your data team works. However, you can't call your data catalog a single source of truth unless you include some data quality monitoring or data observability initiatives to ensure that you can trust your data assets.

Want to learn more about our information-rich data catalog? Reach out for a demo.

%2520copy%2520(3).avif)

-p-500.png)