Data schema is the web that structures your data within a database or pipeline.

In other words, it sets the foundation of your data system and defined what you can expect from each dataset.

Schema is an essential pillar of data observability, along with lineage, volume, distribution, and freshness.

If schema breaks and you don’t notice, you could impact everything from dashboards to machine learning models.

What Is a Data Schema?

A data schema is the blueprint that defines how data is organized, structured, and related in a database or pipeline.

It tells your systems and your teams what to expect from each dataset and what components build that specific piece of information.

Take a healthcare analytics platform, for example.

Your schema might define that patient records always contain fields like patient_id (integer), admission_date (timestamp), diagnosis_code (string), and treatment_cost (decimal).

This structure ensures that everyone, from data engineers to business analysts, knows exactly what to expect and what to look for when working with patient data.

Without a clear schema, your data becomes unpredictable.

One day treatment_cost might be stored as dollars, the next as cents. Or a critical field like patient_id might suddenly allow null values, breaking your reporting pipeline.

Schema provides the guardrails that keep your data consistent and reliable.

Data Schema vs. Database Schema

The distinction between data schema and database schema often gets blurred.

Data schema is the structure that is defined across your entire data ecosystem, including pipelines, APIs, ingestion tools, and reverse ETL.

On the other hand, database schema is more specific than that.

Database schema only defines the structure within a specific system (like PostgreSQL). This could be applies to tables, columns, relationships, and constraints.

For example, your data schema defines the universal contract for how customer, product, or transaction data should look across the organization.

Each database schema then implements that contract inside a particular system. For example, how your CRM structures customer tables, fields, and relationships.

In another example, a fintech company’s data schema might define how customer transactions move from mobile app to data warehouse. The database schema, meanwhile, defines how those transactions are stored, say, in a transactions table in BigQuery with foreign keys pointing to a customers table.

Both are critical but one governs the journey, and the other governs the destination.

The 5 Types of Data Schema (+ Examples)

Schema isn’t one-size-fits-all. Here are five types every data team should understand:

1. Physical schema

This is how data is stored on disk: file formats, compression, partitioning.

Example: A media company stores video metadata in Parquet format, partitioned by upload date, compressed with GZIP to optimize cost and query speed.

2. Logical schema

The human-readable structure of tables and relationships.

Example: A logistics provider uses a logical schema to relate shipments, customers, and tracking_events, making it easier to model real-world relationships.

3. Evolving schema

Schemas that change over time; this is common in ELT pipelines.

Example: A SaaS product adds session_duration and feature_flag fields to their events table as product analytics matures.

4. Contractual schema (API)

Machine-to-machine schema, like JSON or GraphQL contracts.

Example: An IoT device sends temperature and humidity data via API. The schema ensures every payload includes valid fields in the correct format.

5. Metadata Schema

Schemas that describe other schemas, frequently used in data governance and data observability.

Example: Tools like dbt and OpenMetadata use metadata schemas to track lineage, column descriptions, and test results.

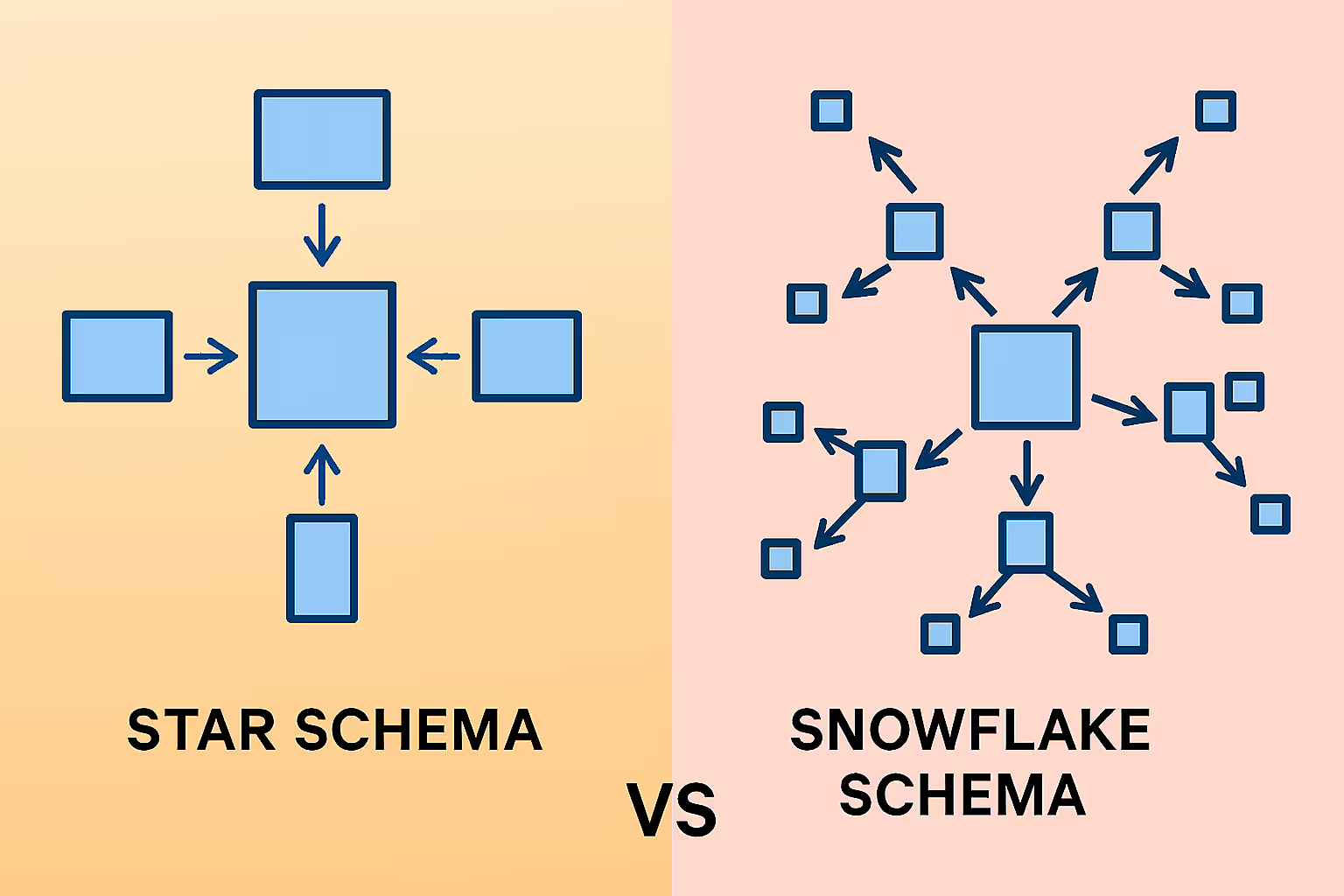

Star Schema vs. Snowflake Schema

Aside from the 5 different types of schema, there are also 2 different approaches to building the design and structure of your schema.

A basic one, the star schema, and a more intricate web, the snowflake schema.

Star Schema arranges data around a central fact table (like sales_transactions) surrounded by dimension tables (customers, products, time_periods). It's like having a main hub with spokes radiating outward.

Several dimensions all centre around one larger dimension.

Snowflake Schema extends those dimension tables further, creating a more complex but storage-efficient structure. Dimension tables are broken down into sub-dimensions, creating a snowflake-like pattern.

So, while there is one focal point, other dimensions are also developed.

For a healthcare system analyzing patient outcomes:

- Star schema might have a central

patient_visitsfact table connected directly topatients,doctors,treatments, andhospitalsdimension tables - Snowflake schema would further normalize by breaking

hospitalsintohospital_details,hospital_locations, andhospital_departmentstables

Star schema wins on simplicity and query performance.

Snowflake schema wins on storage efficiency and data integrity.

You’ll want to choose based on your priorities, faster queries or optimized storage.

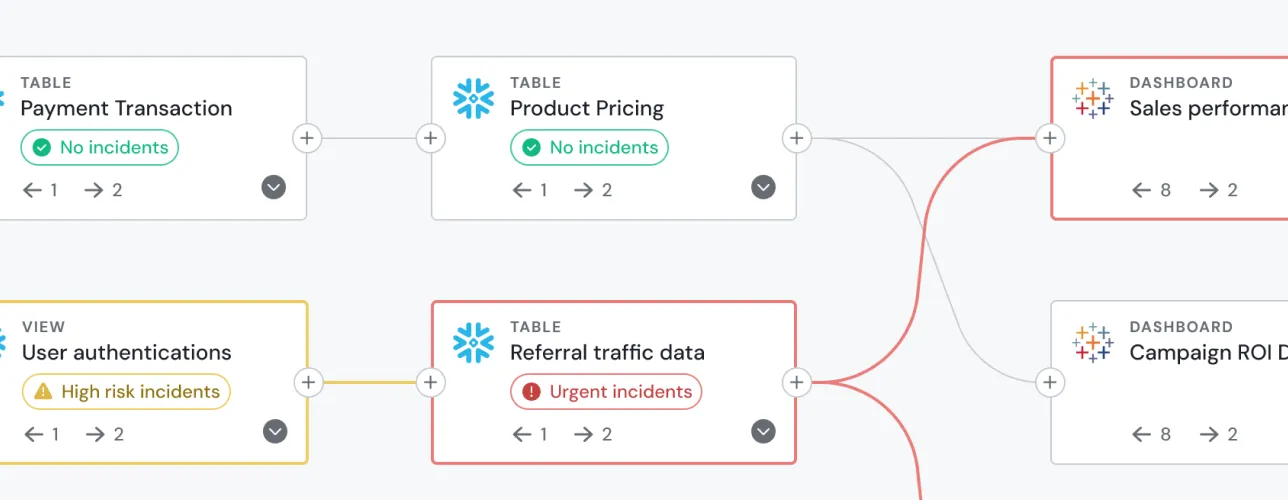

Why Schema Monitoring Matters in Data Observability

Schema changes are among the most disruptive events in data systems.

When a source system suddenly changes a field from order_total to total_amount, every downstream dashboard, model, and automated process that depends on that field can break.

Consider a real estate analytics platform that tracks property listings.

If the source system changes listing_price from an integer (dollars) to a float (dollars and cents), existing calculations might truncate decimal values, making million-dollar properties appear significantly cheaper in reports.

Schema monitoring catches these changes automatically:

- Detects drift when new columns appear or existing ones change type

- Alerts team members before broken data reaches critical business systems

- Tracks evolution over time, helping other teams understand how data structures change

- Enables proactive fixes rather than reactive fire-fighting

Tools like Sifflet integrate schema monitoring directly into your observability stack, providing real-time alerts when schema changes occur across your dbt pipelines, ingestion tools like Fivetran, or reverse ETL processes.

How to Design a Data Schema That Doesn't Break

You can design a effective a lasting data schema following these 4 steps:

Step 1: Start with the Business Use Case

Don't design your schema in a vacuum.

If you're building analytics for a subscription software company, start with questions like: "What metrics do we need to track customer churn?" or "How do we measure feature adoption?"

Let business requirements drive your schema design.

Step 2: Align on Naming Conventions and Data Types

Establish clear standards early. Will customer identifiers be customer_id, cust_id, or customer_key?

Will monetary values use DECIMAL(10,2) or FLOAT?

Step 3: Plan for Schema Evolution

Your schema will change, so plan for it by building flexibility into your design:

- Use nullable columns for new fields

- Version your schema changes

- Implement backward compatibility where possible

- Document all modifications

Step 4: Validate with Contract Testing and Observability

Test your schema assumptions continuously.

Modern data observability tools can automatically detect when incoming data doesn't match expected schemas, alerting you before broken data propagates downstream.

Common Scenarios of Data Schema

Data schema can affect your entire organization. These are 3 typical scenarios within a business and how schema works:

Marketing Attribution

A B2B software company tracks leads across multiple touchpoints, including website visits, email campaigns, webinar attendance, and sales calls. The schema must accommodate:

- Event tracking for new marketing channels

- Customer identification across systems

- Attribution modelling that links events to revenue

When the marketing team launches a new channel (say, podcast advertising), the schema needs to accommodate new event types without breaking existing attribution models.

Compliance Issues

Financial institutions face strict regulatory requirements around data structure and retention. Their schemas must:

- Enforce data types that comply with regulations (ex. precise decimal handling for transactions)

- Keep audit trails showing when and how data structure changed

- Support long-term retention without schema conflicts across years of data

Real-Time Analytics

A logistics company needs real-time visibility into shipment status. Their schema must balance:

- Low-latency updates for operational dashboards

- Analytical depth for strategic planning

- System integration across warehouse management, transport, and customer service

Schema As the Backbone of Reliable Data

A well-structured schema isn't just a technical asset, it's a shared language between data producers and consumers.

When your schema changes silently, trust disappears quickly as dashboards, model inputs or alerts are lose their accuracy.

Observability ensures your team always knows what changed, when, and why. It transforms schema from a brittle, hidden dependency into a transparent, managed asset that enables rather than constrains your data operations.

Want to monitor schema changes across your stack?

Sifflet provides automated schema drift detection and alerting across your entire data ecosystem.*

%2520copy%2520(3).avif)

-p-500.png)