From customer interactions, IoT sensors, and financial systems to marketing platforms and operational databases, companies collect information from dozens of sources.

In spite of having access to so much information, many still struggle to extract meaningful insights because raw data in its native form is rarely ready for analytics.

Data transformation converts disparate information into a unified, reliable format, making it possible to use for strategic-decision making and business intelligence.

What Is Data Transformation?

Data transformation is the process of standardizing formats, enriching context and improving data quality.

It involves converting data from one format, structure or schema into another to make it suitable for analysis, reporting, or operational use.

Much like a document translator converts content between languages while preserving meaning, data transformation converts information between systems while maintaining business value. The process can range from simple format conversions to complex multi-step operations that reshape entire datasets.

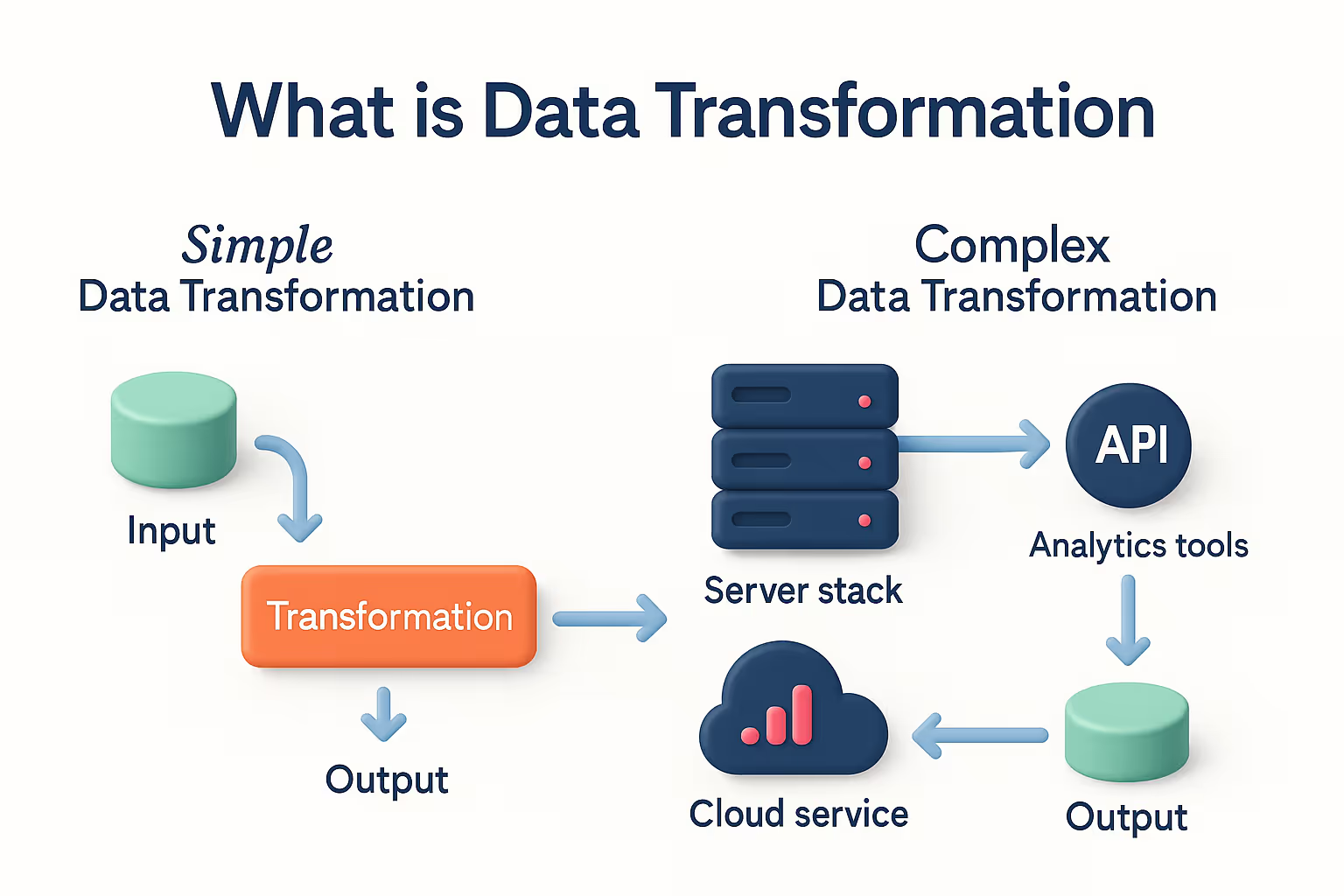

As broad categories, there are two types of data transformation:

- Simple data transformation involves straightforward conversions like changing date formats from MM/DD/YYYY to YYYY-MM-DD, or converting currencies from local denominations to USD. These operations typically require minimal processing power and can be executed quickly.

- Complex data transformation encompasses sophisticated operations like merging customer data from multiple touchpoints, calculating rolling averages for financial metrics, or applying machine learning models to enrich datasets with predictive scores. These processes often involve multiple data sources and require substantial computational resources.

You can find common transformation examples in every industry.

A manufacturing company might normalize part numbers across different suppliers, ensuring consistent inventory tracking.

A financial services firm could merge transaction data from various payment processors, creating a unified view of customer spending patterns.

Healthcare organizations frequently transform diagnostic codes between different classification systems to enable cross-institutional research.

[Visual opportunity: Diagram showing raw data sources flowing through transformation processes to produce analytics-ready datasets]

ETL vs ELT Data Transformation

There are two dominant approaches in data tranformation architecture: ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform).

Knowing when to use each approach can dramatically impact project success and cost efficiency.

The choice between ETL vs ELT often depends on business context rather than technical preference.

Organizations with strict compliance requirements might prefer ETL's pre-validation approach, while companies prioritizing speed-to-insight often choose ELT for its flexibility and performance advantages.

ETL works best when dealing with well-structured data sources, regulatory compliance requirements, or legacy systems with limited processing capacity.

For example, a regional bank processing daily transaction batches might choose ETL to ensure data quality before loading sensitive financial information into their data warehouse.

ELT excels in cloud-native environments with large data volumes and diverse source types.

A telecommunications company analyzing network performance across millions of devices would benefit from ELT's ability to handle massive datasets and perform transformations using the warehouse's distributed computing power.

The Data Transformation Process

Successful data transformation follows a systematic approach that ensures quality, consistency, and business alignment.

This six-step process has been refined through decades of enterprise data management experience:

Step 1: Discovery

Discovery begins with understanding source data characteristics, business requirements, and target system specifications.

Data teams catalog available sources, assess data quality, and identify transformation requirements.

A pharmaceutical company launching a new drug trial would retrieve patient data formats from each hospital, reporting rules, and clinical analysis needs.

Step 2: Cleaning

Cleaning addresses data quality issues like missing values, duplicates, and inconsistencies.

This step often reveals systemic data collection problems that require upstream fixes.

Teams establish data quality rules and automated validation processes to maintain standards throughout the pipeline.

Step 3: Mapping

Mapping defines the relationships between source and target data structures.

Business rules are translated into technical specifications, ensuring transformations align with analytical requirements.

This phase typically involves close collaboration between business stakeholders and technical teams.

Step 4: Code generation

Code generation converts mapping specifications into executable transformation logic.

Most newer tools will automate this step, generating SQL, Python, or other code from visual interfaces. However, complex business logic often requires custom development.

Step 5: Execution

Execution runs the transformation processes according to defined schedules and triggers.

This includes monitoring performance, handling errors, and ensuring data freshness meets business requirements.

Step 6: Review

The final review validates transformation outputs against business expectations and data quality standards.

Constant monitoring guarantees ongoing accuracy and identifies issues before they impact downstream analytics.

This process ties directly to data quality and observability practices. Without proper monitoring and validation, even well-designed transformations can produce misleading results that compromise business decisions.

Why Does Data Need to Be Transformed?

There are four main reasons that require data to be transformed so you can make the most out of your information assets.

In the first place, business reporting requires consistent metrics and standardized formats across departments and systems.

A multinational corporation needs unified financial reporting despite operating in dozens of countries with different accounting standards, currencies, and fiscal calendars.

Transformation normalizes these variations, allowing accurate performance comparisons and strategic planning.

Secondly, machine learning applications demand structured, feature-rich datasets that algorithms can process effectively. Raw operational data rarely meets these requirements.

A predictive maintenance system for manufacturing equipment needs sensor readings converted to standardized units, imputed missing values, and engineered time-series features before models can generate accurate failure predictions.

Additionally, compliance and governance mandates data protection, audit trails, and regulatory reporting capabilities.

Healthcare organizations must de-identify patient records for research while keeping analytical value.

Financial institutions need to mask sensitive customer information in development environments while preserving data relationships for testing purposes.

And finally, real-time analytics requires immediate data processing and standardization to support operational decision-making.

Supply chain optimization systems need inventory levels, forecasts, and logistics data transformed and synchronized across global operations to prevent stockouts and reduce carrying costs.

For these reasons data transformation goes beyond technical necessity. Transformation enables business agility, regulatory compliance, and competitive advantage through superior analytics capabilities.

How to Transform Data

Modern data transformation employs various techniques and tools, each optimized for specific scenarios and scale requirements.

Data Transformation Techniques

There are 4 techniques that can help you take transform your data from its raw source to a usable and analytics-ready format.

Aggregation combines detailed records into summary statistics, reducing data volume while preserving analytical value.

Monthly sales rollups from daily transactions enable trend analysis without overwhelming storage systems or query performance.

Enrichment enhances datasets by adding contextual information from external sources. Geographic coordinates added to customer addresses enable location-based analytics and personalization strategies.

Normalization ensures consistent formats and scales across different data sources. Converting all temperature readings to Celsius, regardless of source system preferences, enables accurate environmental monitoring and analysis.

Deduplication identifies and removes redundant records that compromise analytical accuracy. Customer databases often contain multiple entries for the same individual across different touchpoints, requiring sophisticated matching algorithms to maintain data integrity.

Data Transformation Tools

Choosing the right techniques is crucial to make sure you make the most out of your data. And to is picking the perfect tools.

Apache Spark provides distributed processing capabilities for large-scale data transformation workloads.

Its in-memory computing architecture delivers superior performance for complex transformations involving multiple data sources and iterative processing requirements.

Dataform offers a Git-based workflow for managing SQL-based transformations with version control, testing, and deployment automation.

This approach appeals to organizations prioritizing code quality and collaborative development practices.

dbt (data build tool) has revolutionized analytics engineering by enabling software development best practices in data transformation projects.

Its modular approach and extensive testing framework help teams build reliable, maintainable transformation pipelines.

Modern data transformation tools increasingly incorporate observability features that monitor pipeline health, data quality, and business metrics.

This integration ensures that transformed data is trustworthy and aligned with business expectations throughout its lifecycle.

Benefits and Challenges of Data Transformation

Understanding both advantages and obstacles helps organizations make informed decisions about transformation investments and strategies.

Benefits

- Data transformation offers improved analytics thank to standardized and high-quality datasets. Organizations can implement advanced analytics, machine learning models, and real-time dashboards that would be impossible with raw, inconsistent data.

- Regulatory compliance becomes manageable through systematic data protection, audit trail generation, and detailed reporting. Transformation processes can automatically apply privacy controls, data retention policies, and regulatory formatting requirements.

- Thanks to automated transformation pipelines increase your operational scalability, allowing you to handle growing data volumes without increasing manual effort. Cloud-native transformation architectures can scale to accommodate business growth and seasonal variations.

- AI and machine learning readiness accelerates through feature engineering, data quality improvements, and format standardization that modern algorithms require. Organizations can rapidly deploy predictive models and intelligent automation systems.

Challenges

On the other hand, we find some challenges that you will need to keep an eye on so they don’t negatively impact your datasets.

- Ingestion from too many data sources, long transformation rules, and many target systems will cause technical complexity to increase. However, this challenge can be easily solved with the right teams and tools to manage dependencies, errors, and performance optimization.

- Keeping a transformation infrastructure with cloud computing resources and skilled personnel can be expensive. This means you will need to take your budget contraints into consideration beforehand and plan ahead.

- If transformation mistakes occur, errors will cascade through your downstream systems and analytics applications, negatively impacting your data pipeline. A single misconfigured tranformation can compromise many reports and decisions that will impact many departments within your organization.

- Transformation pipelines require constant monitoring, testing, and optimization to maintain effectiveness.

The most significant challenge organizations face is transformation without proper observability, operating more or less as a black box where data enters and results emerge without visibility into the process quality or accuracy.

This approach creates substantial risks for business-critical applications that depend on transformation outputs.

Guarantee Transformation Success with Data Observability

Data transformation success requires ongoing monitoring, validation, and optimization to avoid errors.

Modern observability platforms address the black box problem by providing comprehensive visibility into pipeline health, data quality, and business impact.

Effective data observability solutions detect anomalies, alert teams to quality issues, and provide diagnostic information that accelerates problem resolution.

These platforms ensure that transformed data remains accurate, timely, and aligned with business requirements throughout evolving organizational needs.

Organizations implementing comprehensive data transformation strategies should prioritize observability capabilities that match their technical architecture and business requirements. This investment pays dividends through reduced troubleshooting time, improved data quality, and increased confidence in analytical outcomes.

Check out how data observability can strengthen your transformation pipeline reliability and business impact with Sifflet today.

-p-500.png)