AI is fully integrated from banking and insurance to retail and transportation, automated decisioning systems, GenAI, and LLMs now lie at the heart of most consumer-facing activities.

But model drift, algorithmic bias, and the proliferation of shadow AI tools have led to accusations of bias, legal jeopardy, and regulatory scrutiny.

Left unmanaged, hard-earned reputations and consumer trust are in jeopardy.

As AI government accelerates through new laws, enforcement bodies, and regulatory mandates, AI governance has moved from intellectual musing to strategic priority.

Move over data governance. AI governance is taking center stage.

What is AI Governance?

AI governance is the operational framework that guides the design, deployment, and oversight of artificial intelligence systems. It combines legal guardrails, organizational accountability, and technical controls to manage risk, uphold transparency, and maintain compliance across the AI lifecycle.

Objectives of an AI Framework

AI governance policy must achieve three core objectives.

- Risk management

Algorithmic bias. Model drift. Unsafe or policy-violating responses. Data leakage.

These are the primary risks AI introduces and what any AI policy must address.

That means requiring documented pre-deployment tests for bias and performance. Defining acceptable thresholds for model behavior. And monitoring for drift once models are in production.

AI governance must assign explicit ownership for each model, determine review intervals, and define when issues trigger rollback, retraining, or executive escalation.

History has shown what happens when these safeguards are missing.

Generative AI image tools such as DALL·E 2 and Stable Diffusion raised sharp questions about bias, as prompts for "CEO" or "engineer" overwhelmingly generated images of white males. Compounding the furor, prompts for "housekeeper" and "nurse" mainly produced images of women and racial minorities.

These patterns reflect stereotypes in the training data, not in the model design.

The lesson: standards for bias testing and mitigation controls must be explicitly stated in policy.

- Compliance assurance

Operational controls governing audit trails, model documentation, and review processes must comply with a myriad of mandates. Regimes such as the EU AI Act, GDPR, and emerging North American rules are only increasing in number and depth.

AI governance policy must translate regulatory obligations into lifecycle requirements. They must define the documentation, approvals, and evidence required at each stage of model development, deployment, and operation.

- Sustainable deployment

AI systems tend to fail in one of two ways: they either stall indefinitely in pilot mode, or they proliferate faster than the enterprise can control.

Policy must enable AI systems to move into production and scale without introducing unmanaged risk or operational stall. To do so, it should stipulate deployment requirements, approval criteria, and post-deployment oversight.

Clear lines of ownership, predefined risk tiers, and uniform review processes will permit multiple models and AI tools to operate throughout the enterprise under a single governing framework.

These 3 objectives allow enterprises to claim responsible AI use with evidence, not aspiration.

AI Governance vs. Data Governance

AI governance and data governance are complementary, but operate across different layers of risk.

Data governance manages inputs. It guides quality, security, lineage, and availability of data across analytics and operations.

AI governance controls behavior. It guides model decision-making and helps to determine if those decisions remain fair, explainable, and safe after deployment.

Data governance determines whether data is accurate, protected, and appropriately used.

AI governance evaluates the outcomes produced by that data and ensures they conform to enterprise standards, legal obligations, and risk tolerance.

AI Governance Regulations And Frameworks

Globally, governments have crafted several regulations to control AI use and behavior. It marks the rise of AI government: public authorities defining how AI can be built, deployed, and monitored in practice. Now, enterprises must demonstrate not only outcomes, but the governance processes used to achieve them.

EU AI Act

Focus: Tighter control of AI systems that affect people or critical outcomes.

Obligation:

- Classify AI systems based on the level of risk they introduce

- Apply more vigorous controls and human oversight over higher-risk systems

- Maintain adequate documentation, monitoring, and audit records from development through production

Timeline & Obligation:

- Feb 2025: Prohibitions on "Unacceptable Risk" systems took effect.

- Aug 2025: Governance obligations for General Purpose AI (GPAI) apply.

- Aug 2026: Full compliance for "High Risk" systems (e.g., employment, credit) begins.

- Aug 2026: Mandate for Regulatory Sandboxes to foster innovation.

GDPR (General Data Protection Regulation)

Focus: Protecting individual rights when decisions are influenced or made by automated systems.

Enterprise response:

- Identify where AI systems affect people in meaningful ways

- Ensure decisions can be explained, reviewed, and overridden by humans

- Maintain records connecting data inputs, model behavior, and decision outcomes

United States (NIST and State Laws)

Focus: A mix of voluntary federal frameworks and emerging state-level mandates.

Enterprise response:

- Adopt the NIST AI Risk Management Framework (AI RMF) to map, measure, and manage risks (the de facto US standard).

- Monitor state laws regarding automated employment decision tools (AEDT) and consumer profiling.

- Be able to show governance controls and decision processes during audits or investigations.

OECD Principles

Focus: To promote responsible AI and human-centric uses across economies and societies.

Enterprise response:

- Design governance policies that prioritize transparency, accountability, system reliability, and human oversight.

- Align internal AI standards with widely accepted international principles.

- Build governance practices that anticipate future regulatory expectations.

Although not legally binding, OECD principles hold significant sway. It's wise to tailor your policy to OECD standards to remain a step ahead of impending regulation.

How To Design An AI Governance Framework

You can create you AI governance framework by following five steps.

Step 1: Establish Decision Authority

Establish an AI governance group with clear authority over AI risk management and deployment decisions.

This group should guide how AI is designed, approved, and used across the organization, ensuring that innovation progresses without compromising compliance, safety, or accountability.

The governance group should be multidisciplinary.

Legal and compliance leaders play a critical role in interpreting applicable AI laws, regulations, and industry standards.

Technical leaders contribute by assessing feasibility, identifying risks, and designing effective controls.

Product owners help balance governance requirements with delivery timelines and business objectives, while domain experts provide essential insight into use-case-specific constraints, operational realities, and potential impacts on customers or stakeholders.

Once established, the AI governance team is responsible for defining and enforcing standards for approved AI use.

This includes setting clear approval criteria for all AI models, projects, and tools, as well as establishing escalation paths to resolve issues or risks as they arise. The team must also assign explicit ownership for key responsibilities, including deployment decisions, ongoing monitoring, and determining who has the authority to pause, retrain, or retire an AI model when necessary.

Step 2: Create Visibility Into Current AI Use

Build a complete inventory of all AI projects, models, and tools in use across the organization.

This inventory should include AI models currently in production, active pilots and proofs of concept, and any AI capabilities embedded within third-party software or platforms. It should also account for employee use of public generative AI tools, whether sanctioned or informal, as these can introduce unseen risks.

Keeping this complete and up-to-date inventory is a critical foundation for effective AI governance.

Without visibility into where and how AI is being used, it is not possible to design, apply, or enforce meaningful governance controls. This step enables informed decision-making, risk assessment, and the development of consistent standards for future AI adoption and oversight.

Step 3: Select A Governance Platform

Consider implementing an AI governance platform to centralize oversight and control across the AI lifecycle.

A dedicated platform can serve as a single source of truth for model documentation and ownership records, ensuring that accountability is clearly defined.

It can also store testing evidence related to bias, performance, and risk, providing transparency into how models are evaluated before and after deployment.

In addition, such platforms support structured approval workflows and preserve decision histories, which are critical for traceability and accountability. Centralized audit readiness and regulatory evidence further reduce friction when responding to internal reviews or external regulators.

Modern AI governance platforms act as compliance accelerators rather than administrative burdens.

They allow organizations to quickly update internal rule libraries as laws and regulations evolve, without rebuilding processes from scratch.

By centralizing evidence such as screenshots, logs, and validation artifacts in a single repository, these platforms simplify audits, reduce manual effort, and help organizations demonstrate responsible AI practices with confidence.

Step 4: Classify AI Risk Tiers

Select a risk-tiering model to serve as the foundation of your AI governance program.

Frameworks such as the EU AI Act or the NIST AI Risk Management Framework provide structured approaches for categorizing AI systems based on their potential impact on business operations, individuals, and consumer rights.

The chosen model should be adapted to your organization’s jurisdiction, regulatory exposure, and risk tolerance, ensuring that governance requirements scale appropriately with risk.

Under this approach, minimal-risk systems typically include internal tools, spam filters, or non-critical analytics.

These systems generally require only basic documentation and ongoing monitoring.

High-risk systems, by contrast, are those that influence sensitive or regulated areas such as hiring decisions, credit eligibility, healthcare outcomes, or critical infrastructure. These require rigorous validation processes, including bias testing, False Positive Rate Parity checks, robust performance evaluation, and meaningful human oversight.

Finally, prohibited or unacceptable systems are those that violate privacy laws or fundamental ethical standards, such as non-consensual biometric identification. These systems should not be developed or deployed under any circumstances.

Step 5: Define and Enforce Control Thresholds

Use the defined risk tiers to consistently enforce governance requirements throughout the entire AI lifecycle.

Each tier should clearly determine which approvals are required before a model can be deployed, the depth of testing and documentation that must be completed, the level of human oversight needed during operation, and how frequently models must be reviewed and revalidated.

This tiered approach ensures that governance controls are proportionate to risk and applied in a repeatable, transparent manner.

Governance requirements should be specific and measurable rather than abstract. Instead of broadly requesting that models be “fair,” establish enforceable thresholds, such as prohibiting the deployment of any high-risk model if its False Positive Rate Parity deviation exceeds 5 percent.

This approach ensures that no demographic group is disproportionately classified as high risk compared to others and removes ambiguity from compliance decisions.

With these controls in place, AI governance moves beyond policy documents and becomes embedded in day-to-day operational workflows.

Risk-based approvals, testing standards, and oversight mechanisms become part of how AI is built, deployed, and maintained, creating a sustainable and auditable governance framework.

Assess Your AI Governance Maturity

One doesn't move from zero governance to full governance overnight. Progression occurs through distinct stages of maturity as controls, processes, and tooling mature.

Understanding where your enterprise sits on this curve determines what to prioritize next.

Most companies fall into one of three maturity levels.

Level 1: Reactive

AI use is decentralized, with no formal governance in place.

Characteristics:

- No centralized inventory of AI models or use cases

- Individual judgment rather than policy

- Issues such as bias, hallucinations, or misuse surface only after deployment

Risk Level: High. Maximum exposure to unintended consequences.

Level 2: Managed

A cross-functional committee oversees the use of AI using manual processes.

Characteristics:

- Standardized documentation and review templates are used

- Models must pass a manual approval process before deployment

- Defined roles and responsibilities for oversight are in place

Risk Level: Medium. Slow deployment and difficulty scaling can leave gaps in governance execution.

Level 3: Automated

AI Governance is automated in all workflows.

Characteristics:

- Governance is embedded in deployment workflows

- Risk, bias, and performance controls are enforced automatically

- Models are continuously monitored against defined thresholds

Reaching this stage requires investment in automation and data observability tools.

The Role of Data Observability in AI Governance

AI governance defines the rules, but data observability is the tool that verifies whether AI actually does.

Once an AI model enters production or an AI tool is put into use, its expected behavior and outcomes become an open question. Observability answers these questions by continuously verifying that AI systems and tools operate within their approved boundaries.

That applies across the entire spectrum of AI use including custom models deployed into production, embedded AI features inside third-party platforms and SaaS tools, automated decisioning systems that influence customer outcomes, generative AI tools used by employees for analysis, content, or support.

Across each of these, observability helps governance answer the same core questions:

- Is the system still behaving as expected?

- Has data or decision logic drifted?

- Are outputs fair, explainable, and in line with AI policy?

- Are tools used in ways that match their approved purpose?

Observability also provides insight into key metrics that assess AI governance effectiveness.

Mean Time to Detection (MTTD) defines how quickly deviations such as drift or bias are identified once they emerge

Mean Time to Resolution (MTTR) defines dow long it takes to remediate issues through rollback, retraining, or adjustment

Observability also enables the detection of variations in model performance over time.

When there is a data drift, observability informs you when incoming data no longer resembles that on which a model was trained.

If there is a conept drift, observability will analyze when the relationship between inputs and outputs changes.

Degradation is detected by monitoring input distributions, output values, and decision outcomes.

Yet, even when functioning normally, AI’s outcomes must be explainable to fully pass muster.

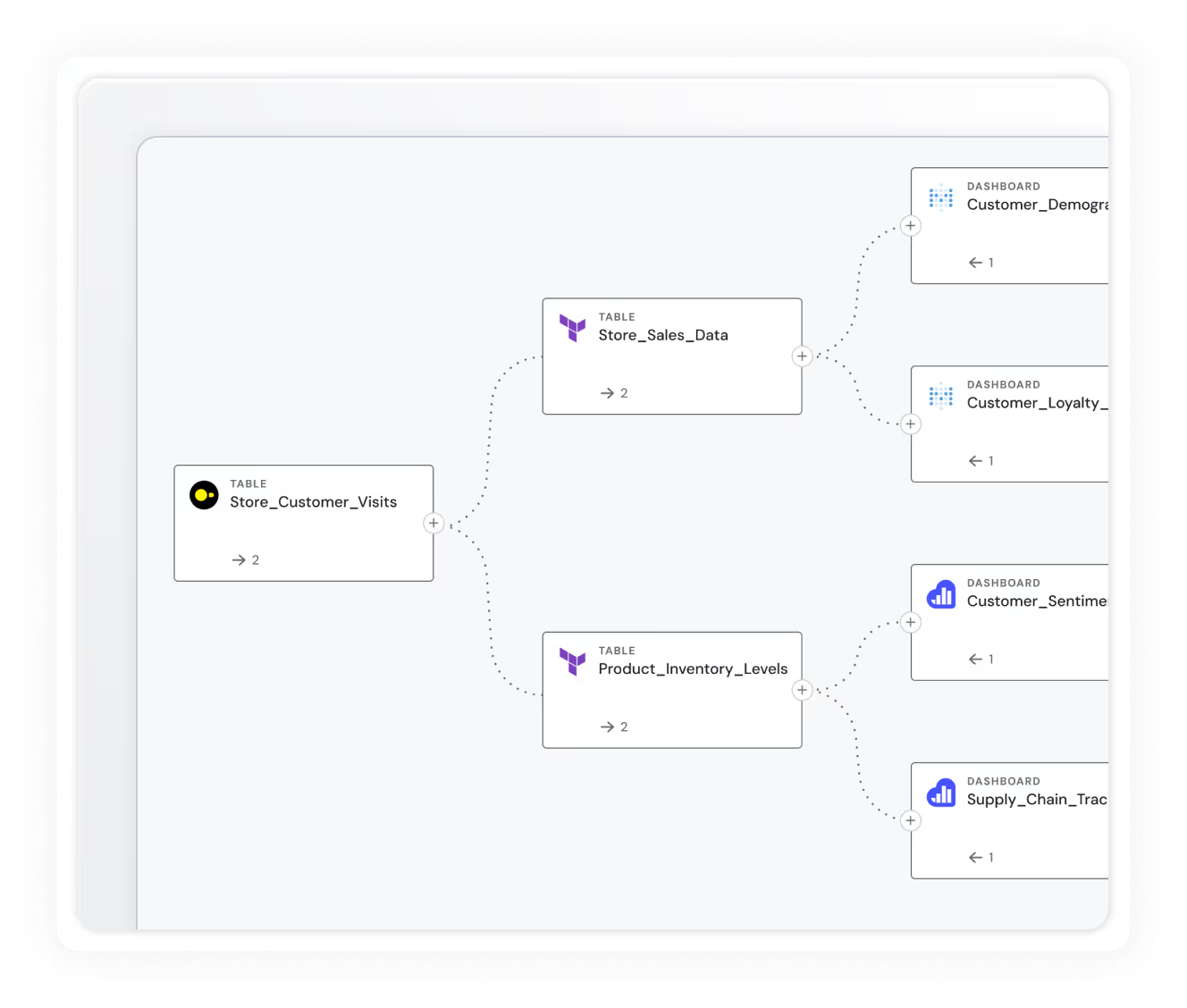

Observability unifies data lineage, input conditions, model behavior, and its downstream impact.

Rather than isolated metrics or one-off explanations, the full decision-making criteria and data can be reconstructed.

- Which data was used

- How it changed

- How the model responded

- Where the output was consumed

When a decision is questioned, it can be traced back through the pipeline to identify contributing factors and show whether the system operated within approved thresholds and policies when the decision was made with time-stamped evidence.

Technical Note: Advanced tools use interpretability techniques such as SHAP (Shapley Additive explanations) or LIME to explain individual predictions. This transforms AI from a 'black box' into a 'glass box,' giving auditors the evidence they need.

Where AI Governance Meets Reality

Risking unchecked AI experimentation is over. Enterprises need control over AI.

Not in theory, but in practice.

With global regulations tightening, from the EU AI Act's enforcement in 2026 to emerging US federal and state laws, the window for "wait and see" has closed.

But beyond compliance, the real driver is trust.

Governance defines acceptable AI use.

Observability is how you monitor and objectively demonstrate that those standards are being met.

%2520copy%2520(3).avif)

-p-500.png)