Data observability isn't new. Artificial intelligence is.

As AI reshapes how we collect, process, and interpret data, it's also redefining how we observe it.

Observability has always been about trust, but now that trust rests on a new discipline: AI Observability.

Monitoring vs AI Observability

Before AI, observability was limited to monitoring your data.

System monitoring employs binary checks and thresholds to determine whether conditions meet predefined standards: Was the pipeline completed? Was CPU usage within range? Did the dashboard refresh?

Effective for these pass/fail scenarios, system monitoring wasn't designed to address complex data issues.

Model monitoring tracks accuracy, drift, and bias in machine learning models, using performance metrics, statistical tests, and alerting rules. Yet, despite its sophistication, it operates in isolation, unable to assess how model errors might affect downstream processes and outputs.

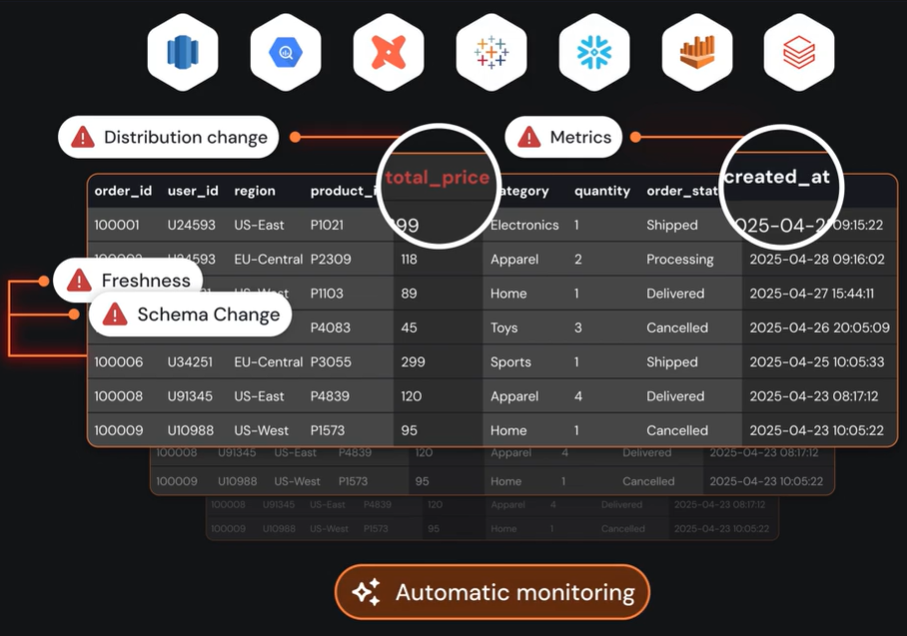

AI observability, on the other hand, is using dedicated AI-powered observability tools that monitor, measure, and understand your data, what breaks and why.

AI observability digs into model performance, data quality, feature drift, prediction accuract, and system behavior over time.

Essentially, AI observability allows proactive detection of issues, faster troubleshooting, and continuous improvement of AI systems.

Observability forms a connective layer over system and model monitoring.

Integrating and correlating system metrics, performance data, error logs, metadata catalog, and lineage, Sifflet creates a system-wide view of data and its transformations.

However, without a business context, traditional observability can’t trace issues back to their root causes or business-first, downstream effects.

Quantifying Business Impact with AI Observability

AI observability closes these blind spots by overlaying business context onto technical data streams. It follows and links system anomalies to the business-related metrics they ultimately distort: skewed revenue projections, flawed forecasts, and regulatory reporting errors.

AI observability alerts to these issues with context. Rather than flood dashboards with undifferentiated signals, it ranks anomalies by their potential impact on the business.

The result is a clear hierarchy of attention, where issues that threaten critical business processes are prioritized over routine noise.

It also strengthens governance. By capturing lineage, monitoring policy adherence, and surfacing audit-ready transparency, AI observability makes compliance an embedded capability rather than an afterthought.

Sifflet embeds this intelligence directly into its platform, tying anomalies to business KPIs so leaders see not just what failed, but how it affects revenue, compliance, and customer trust.

How Does AI Enhance Observability?

Traditional observability confirmed what failed, but not why it mattered. When a pipeline breaks or a query slows, the bigger question is left unanswered: Did that failure disrupt a forecast or hinder customer transactions?

The result is signals without context. Dashboards flash red, and every alert is indistinguishable from the other. Without context or clarity regarding an issue's actual business impact, resolution teams guess which alerts to address first.

AI observability goes further. It applies adaptive intelligence to detect data issues across the stack and ranks them according to their business consequence.

For example, in an e-commerce company, observability might detect a schema change in pricing logic that will result in undercharging customers. AI prioritizes this alert over dozens triggered by routine traffic spikes, directing attention to the issue threatening a critical business outcome: revenue.

The difference is in the business context and prioritization. AI analyzes the technical signals received, correlates them to their downstream impact, and prioritizes them based on their eventual effect on the business.

AI turns observability into an intelligence layer that responds to data and system issues and to the business outcomes they influence.

That shift in perspective sets the stage for the next challenge: applying this intelligence layer to the many forms AI takes.

AI Systems That Demand Visibility

AI isn't a single technology.

It shows up in models, agents, and predictive tools, each presenting unique risks. Some drift quietly until predictions no longer hold. Others produce convincing but flawed outputs. And some act autonomously, multiplying the impact of small mistakes.

Observability makes these risks visible, so trust is preserved no matter how AI is applied.

So, what technologies make up AI observability?

Machine Learning Models & Predictive Analytics

Machine learning models power everything from fraud detection to revenue forecasting. But as data shifts, so do outcomes. Data and concept drift can quietly erode accuracy, leaving forecasts, staffing plans, or financial models misaligned with reality.

AI observability tracks input distributions, feature behavior, and outputs against historical baselines. It flags irregularities when performance degrades and ties them back to the underlying data sources or transformations responsible. With this context, data scientists know not only that intervention is needed but exactly where to focus, whether retraining a model, adjusting inputs, or refining rules.

With Sifflet, these alerts are surfaced alongside their downstream impact, so leaders see how drift in a model cascades into missed forecasts, inaccurate KPIs, or flawed decisions.

Large Language Models (LLMs)

Large Language Models (LLMs), chatbots, and many other AI-driven text tools train on vast amounts of data. Although they generate content that can appear convincing, they are prone to errors, such as fabricating facts and details or straying from brand and compliance standards.

AI monitors LLM outputs in real time, flags when they move off course, and connects issues back to the prompts and data that produced them. Whether flawed inputs, insufficient data, or model drift, AI supplies the clarity to adjust prompts, retrain, or add guardrails before errors reach customers or regulators.

AI Agents

AI agents are autonomous software that use a variety of digital tools to make decisions and take action toward a defined goal. They can reconcile financial records, respond to customer service requests and emails, or coordinate multi-step workflows.

Their autonomy makes them decisive, but introduces the risk of compound error. A single mistake, caused by a bad input, flawed rule, or API failure, can spiral into a string of erroneous actions.

AI lends accountability to agents by continually scrutinizing their activities and decisions. In the event of an anomaly or mistake, it retraces the agent's actions and decisions back to their data sources and stimuli, supplying a complete analysis of cause and effect that aids and accelerates investigation and resolution.

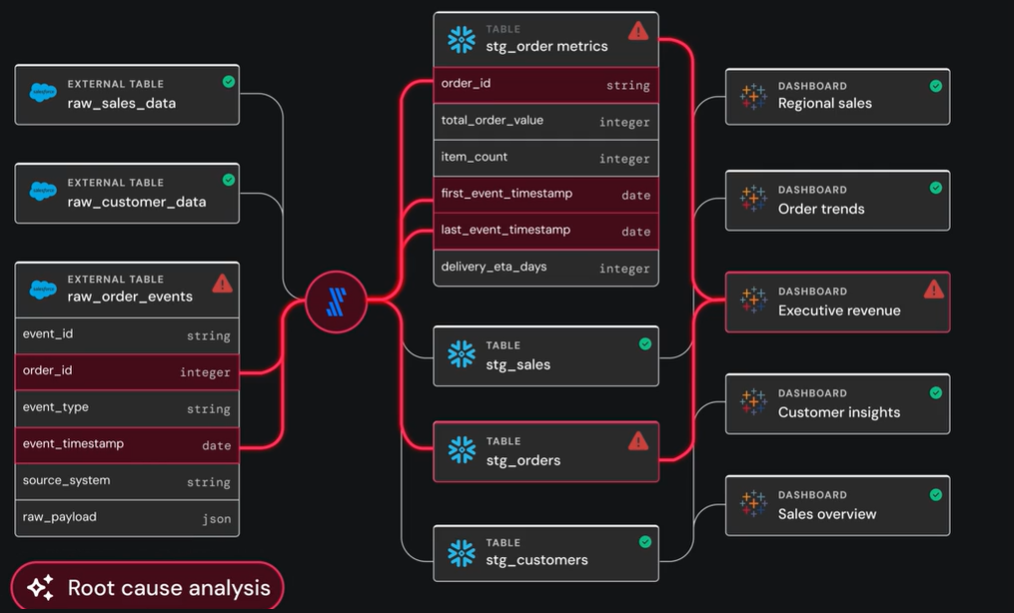

AI-Powered Root Cause Analysis

Root cause analysis (RCA) traces an incident to its source. When a pipeline breaks or a dashboard fails to refresh, the goal is to find the underlying issue at fault. Traditionally, this requires tedious, manual processes and hours, or even days, of investigation.

AI-powered RCA automates log, metric, trace, and lineage analysis to pinpoint incident sources in minutes rather than hours. It correlates anomalies across systems and surfaces the most likely causes for swift resolution.

As McKinsey observes, AI reframes the challenge of root cause analysis into a defined business problem.

That’s exactly where Sifflet stands apart: by linking each root cause not just to technical events, but to the KPIs and decisions they disrupt. Instead of engineers drowning in raw signals, leaders see a clear narrative that connects cause, impact, and business consequence.

Layers of AI Observability Across the Stack

Modern data stacks span multiple layers, each with its own risks.

AI observability transcends and connects them all. From user-facing apps and orchestration flows to autonomous agents and core models, observability permeates each layer to detect issues, trace their impact, and build trust from interface to infrastructure.

- Application layer

The application layer is where people interact with data via apps, dashboards, recommendation engines, and other tools.

AI closely surveys the user experience, measuring performance and error rates. It correlates user satisfaction scores with technical metrics to determine whether systems deliver reliable, high-quality user experiences.

- Orchestration layer

The orchestration layer acts as the connective engine of your stack, moving data through pipelines, syncing with external services, and coordinating complex workflows.

Here, observability tracks each workflow from start to finish. It tracks delays, failure points, and technical issues, linking them to missed insights or failed outcomes.

- Agentic layer

The agentic layer is where autonomous AI agents make decisions, take actions, and use numerous tools to accomplish their assigned objectives.

AI tracks each decision path in real time, linking the agent's actions to the data, prompts, and rules that influenced them. For example, if an agent approved duplicate payments or misrouted customer records, AI flags the error, identifies the root cause, and initiates an intervention.

- Model and LLM layer

The model and LLM layer are where predictive algorithms and language models generate outputs from their extensive data sources.

Here, AI monitors performance metrics, detects drift, and verifies that outputs are logical and consistent with their inputs.

Across all four layers, observability provides a connected view of how data and systems behave. It discovers where errors occur, illustrates their impact on downstream processes and outcomes, and prescribes and guides on remedial courses of action.

To bring this layered visibility to life, Sifflet embeds intelligence directly into its platform through a set of specialized AI agents.

Sifflet's AI Agents

Sifflet integrates intelligence at the core of its observability platform via three purpose-built AI agents.

Each agent plays its part in detecting critical issues, mapping their impact, and guiding toward successful resolutions.

Sentinel

Sentinel is the platform’s strategic guardian, built to stop failures before they spread.

It analyzes lineage, schema changes, and usage patterns to identify where risk is rising and recommends targeted monitoring with minimal setup.

The result: blind spots close, noise is reduced, and the KPIs that drive business decisions stay protected.

Sage

Sage is the platform’s institutional memory, turning every incident into a story leaders can trust.

It recalls how the environment has evolved, correlates today’s anomalies with past events, and rebuilds incidents into clear, explainable narratives.

By surfacing both the root cause and the business impact, Sage accelerates resolution and preserves critical knowledge across teams and time.

Forge

Forge is the contextual problem solver, turning diagnosis into action.

It learns from successful fixes, compares code patterns, and drafts recommended solutions complete with rationale and review guidance.

Engineers stay in control, but resolution accelerates and consistency improves. Forge ensures that insight leads directly to reliability, keeping the business moving without interruption.

All three agents work together. Sentinel prevents, Sage investigates, and Forge resolves.

Together, they form an AI-native intelligence layer that scales observability and embeds trust across the stack.

Final Thoughts…

AI has redefined observability.

While legacy platforms bolt on AI, Sifflet’s AI-native foundation, backed by an integrated catalog, real-time lineage, and specialized agents, delivers immediate, business-aligned insights.

The outcome: data and systems that remain reliable, accountable, and trusted across the stack.

That is AI observability.

Book a demo today and put business-first observability to work in your organization.

Frequently Asked Questions (FAQ)

What is AI observability?

AI observability uses artificial intelligence to monitor, analyze, and explain data and AI system behavior. It detects anomalies, prioritizes them by business impact, and traces issues to their root cause.

How does AI observability differ from traditional monitoring?

Traditional monitoring confirms system health using binary checks and thresholds. AI observability adds adaptive intelligence and business context to detect subtle anomalies, rank alerts by impact, and reveal how technical failures affect key metrics like revenue and compliance.

Why is AI observability critical for business?

It delivers on data reliability and decision confidence by surfacing the most consequential issues first, reducing downtime costs, and strengthening audit readiness with transparent lineage and impact analysis.

Does AI observability replace existing monitoring?

No, it complements it. Monitoring remains essential for infrastructure and job status checks. AI observability builds atop these signals, enriching them with context and guiding teams to the highest-priority issues.

%2520copy%2520(3).avif)

-p-500.png)