It's unlikely that one observability platform can fit every need perfectly.

Some excel at monitoring, while others shine more for data quality and impact analysis. Acceldata's Data Observability Cloud (ADOC), for example, promises to deliver end-to-end observability.

This Acceldata review helps you understand their strengths, where they fall short, and how real users rate their experience with the platform.

What Is Acceldata?

Acceldata is an agentic data management platform that handles both data observability and data reliability in one tool.

Quite recently, in 2025, Acceldata turned toward Agentic Data Management (ADM) as an integrated AI layer. It’s positioned as the industry's first autonomous, self-healing framework for data operations.

Included in this AI-first vision are AI agents that profile data, trace lineage, and surface anomalies. Agents learn baseline behavior to detect deviations, with ADM's Agentic Planning breaking issues into tasks, assigning them to agents, and adjusting response strategies.

ADM also features an AI conversational interface, allowing users to pose natural-language queries such as 'Show me pipelines with freshness issues last week' to receive instant insights, recommendations, or automated actions from the agentic framework.

The product offers depth of coverage, automation, and cross-platform reach. But who is best positioned to take full advantage of it?

The Data Observability Cloud is made up of 5 domains to deliver their brand of visibility into enterprise data: pipelines, infrastructure, quality, usage, and cost. That breadth of signals involved is their calling card.

ADOC's solution blends telemetry with metadata to spot operational anomalies across data quality, performance, and usage. Once detected, these issues feed into alerts, dashboards, and triage workflows where they can be adequately addressed.

Acceldata promises to debug data anomalies in complicated data environments, for example, resolving pipeline drift in Snowflake or tracking throughput drops in Kafka.

Essentially, Acceldata aims to stack your data platform.

Who Benefits Most from Acceldata’s Observability Platform?

High-volume enterprises with complex on-prem, cloud, and multi-cloud deployments are best positioned to capitalize on ADOC.

That includes financial firms who run on mainframes and cloud warehouses, telecoms who operate event-driven systems, and manufacturers with supply chains spanning on-prem and cloud platforms.

Most customers are large enterprises or mid-market companies with mature data teams who seek a unified view of pipeline health, infrastructure load, and cloud usage to reduce risk, improve reliability, and control costs.

What Are Acceldata’s AI Agents?

Acceldata's Agentic Data Management layer adds a new level of intelligence and coordination to the platform. At its center is the xLake Reasoning Engine, which acts as a shared brain for all agents, providing memory, context, and direction.

Extending across hybrid and multi-cloud systems using the Cross-Lake Coordination Protocol, agents can operate collaboratively to spot and solve data quality issues and perform root cause analysis.

ADM also brings governance-aware orchestration to the platform, combining shared memory, policy-driven workflows, and human-in-the-loop approvals to align automation with business rules.

Each agent handles a distinct domain but coordinates through ADM’s shared intelligence layer.

Data Quality Agent

The Data Quality Agent is in charge of keeping data reliability across pipelines by finding, fixing, and preventing data quality issues.

The agent monitors your data pipelines, tables, and batch jobs to detect missing values, schema drifts, duplicates or stale records. It adapts its system based on historical patters, data, and business context, much like Sifflet.

When a problem is found, the agent performs contextual diagnosis using data lineage and dependency analysis to trace anomalies back to their true source.

Once the cause of a problem is known, the Data Quality Agent can solve issues proactively.

Over time, the agent learns from these experiences and user feedback, recommending preventive measures such as adaptive guardrails or freshness policies that reduce the chance of the same issue recurring.

This agent has a human-in-the-loop (HITL) design. While the agent can act autonomously, users retain oversight: they can review anomalies before actions are taken, validate or reject findings, override false positives, approve remediation steps, and confirm or adjust the agent’s root-cause analysis.

The agent is powered by Acceldata’s xLake Reasoning Engine, a contextual AI layer that allows it to reason about data relationships, analyze causes, and interact through natural language. Meaning you can type in questions to make monitoring more intuitive.

Ultimately, the Data Quality Agent aims to give organizations a dependable, continuously improving data foundation.

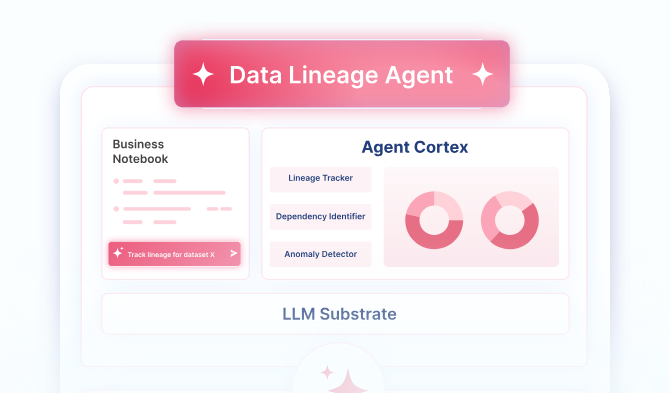

Lineage Agent

Acceldata’s Data Lineage Agent continuously maps, monitors, and manages data flows across your ecosystem.

Its purpose is to create a view of how data moves, from upstream sources through transformation pipelines to downstream consumers.

The Lineage Agent connects your data, providing awareness that other agents rely on for diagnosis, remediation, and governance.

The agent operates by automatically scanning data systems, pipelines, and metadata repositories to identify relationships among datasets, schemas, and processes.

When schema changes, pipeline logic adjustments, or new data sources appear, the Lineage Agent updates its graph automatically. This eliminates the need for static or manually built lineage diagrams.

Acceldata’s root-cause tracing allows you to understand exactly where and why a problem originated, improving both speed and accuracy in resolution.

For example, if a source table changes its schema unexpectedly, the agent can instantly reveal which downstream dashboards, machine learning models, or business reports will be affected.

The Lineage Agent runs on Acceldata’s xLake Reasoning Engine, which gives it contextual intelligence and the ability to interact through natural language.

Instead of static diagrams or periodic metadata scans, it offers real-time, auto-updated lineage visualization. Rather than requiring manual investigation across disparate tools, it provides instant root-cause tracing through impact graphs that integrate with other agents’ insights.

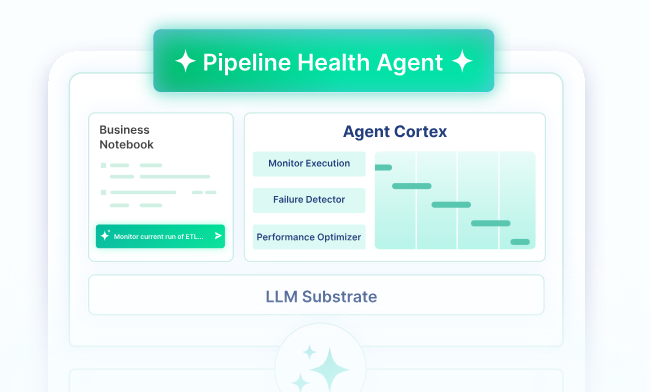

Pipeline Health Agent

Acceldata’s Data Pipeline Health Agent is designed to ensure reliability, efficiency, and resilience of complex data pipelines.

It operates as an intelligent observability layer, it monitors the different stages of pipeline execution, tracking performance metrics, detecting anomalies, and identifying failures before they cause disruptions.

The agent uses AI-driven analytics to detect bottlenecks, predict failures, and automatically recommend optimization strategies based on workload patterns, seasonal trends, and system behavior.

It also allows HITL collaboration, where users can approve or delay automated runs, validate root cause analyses, adjust resource allocation, and fine-tune pipeline cadence in real time.

It integrates with other tools such as Airflow, Spark, or Databricks to reduce resource waste and ensure pipelines align with business SLAs.

Ultimately, the Data Pipeline Agent acts as an autonomous, self-improving system that keeps data operations healthy, cost-efficient, and continuously optimized.

Profiling Agent

Acceldata’s Data Profiling Agent provides an automated, intelligent approach to understanding the structure, quality, and behavior of your data.

It acts as the foundation of your data observability framework, as it analyzes datasets to generate detailed statistical profiles, assess schema structures, and identify anomalies or inconsistencies that could compromise data reliability.

It replaces manual sampling and SQL checks with autonomous profiling across structured and semi-structured data sources.

By tracking distributions, detecting drift, nulls, and outliers, it gives you immediate insight into how datasets evolve and where potential quality issues may arise.

The Data Profiling Agent moves data profiling from a reactive, manual task into a proactive, automated process that drives quality, governance, and AI-readiness across the enterprise.

Acceldata's Observability in Action

As we stated previously, the company's approach to observability involves targeting five critical areas where data systems stall, misfire, or leak value.

We took a close look at each.

Data Quality

Enterprises can define their own quality checks within the platform using no-code and low-code rules. The profiling engine helps to set baselines by analyzing historical patterns, suggesting applicable quality checks, and tailoring rules to each dataset's behavior.

Quality metrics are summarized for batch and streaming flows, identifying where data integrity slips. When something breaks, notifications are sent, invalid records are isolated, and remediation workflows tied to the rule violated are triggered.

Data Pipeline Health

The Pulse engine tracks how data moves through multi-stage pipelines. It observes flow volume, job duration, and frequency changes, scoring and reporting on their reliability based on deviations from expected patterns.

With native support for Spark, Kafka, and Airflow, ADOC monitors the orchestration layer where jobs initiate, stream, and retry. It warns of skipped runs, excessive retries, and queue stalls before problems ripple into missed SLAs or downstream failures.

Data Infrastructure

Many tools stop at the pipeline. This product pushes deeper into the execution layer, tracing slowdowns to underlying storage, compute, or streaming systems.

When a job slows or fails, ADOC assists in pinpointing the source. Identifying the origin speeds root cause analysis and informs on appropriate resolution strategies.

In multi-cloud stacks, its platform-agnostic visibility into infrastructure performance notes key metrics like compute load, query lag, and network throughput, regardless of whether workloads run in cloud or on-prem environments.

Data Cost Optimization

Cloud spend often grows for the wrong reasons. Idle resources, bloated refresh schedules, and misaligned workloads slip by unnoticed.

ADOC connects storage costs to genuine activity. It surfaces which queries, tables, and services consume the most space and why. This data moves to dashboards highlighting usage patterns across the stack, giving platform leaders the insight to act before budgets overrun.

Rather than wait to review monthly invoices, platform leaders can spot these inefficiencies early and optimize cluster sizing and scheduling patterns before additional unnecessary expense is incurred.

Data Users & Access

ADOC tracks how people interact with data: what they access, how often, and with what tools. This visibility indicates how analysts, engineers, and applications engage with key assets.

Dashboards report the most active datasets, dormant assets, and those that may require tighter access controls based on their use. These insights are valuable for policy enforcement and strengthening the enterprise's security posture.

Acceldata isn’t an engine for governance, but it does expose a layer of activity that many platforms lack.

That context sets the stage for security.

Security & Deployment: Cloud-Ready, Enterprise-Grade

This product is geared toward enterprise environments demanding both agility and control. The platform is SOC 2 Type 2 certified and includes layered safeguards to protect sensitive data and infrastructure:

- Multi-factor authentication (MFA)

- Role-based access control (RBAC)

- Encryption at rest and in transit

- API key–based authentication

- Static IP support and penetration-tested architecture

SSO integrations simplify onboarding and access governance, especially in large organizations with convoluted identity structures.

Deployment, however, is flexible. The product supports:

- Cloud-native platforms like AWS and GCP

- On-premise deployments for regulated or legacy systems

- Hybrid environments that require unified monitoring across both

Those controls check the right boxes for industries with strict regulatory requirements, such as financial services, healthcare, and telecom. Yet, while policy enforcement capabilities have matured under ADM, deeper governance integrations such as access certification, classification-driven controls, or audit workflows may still rely on external systems.

Strong security is one piece of the story. Real-world results tell the rest.

Acceldata DOC User Reviews and Market Feedback

User sentiment around Data Observability Cloud is mainly positive, especially among platform teams operating at scale.

⭐⭐⭐⭐ Ratings → G2 4.4/5 | Gartner 4/5

Pros

One standout for ADOC is in time to value.

Several users report that full Snowflake monitoring was stood up in under 24 hours. Onboarding support also earns consistently high marks, with many reviewers citing hands-on guidance during product implementation and upgrades.

Pulse, an engine for tracking pipeline and infrastructure health, receives frequent praise.

Data engineers say it spotlights hidden slowdowns, simplifies Hadoop and Kafka monitoring, and helps to resolve numerous errors before they escalate.

Cons

Ease of use isn't universal, with some users noting a steep learning curve, especially for non-engineers or teams migrating from simpler tools. Several noted thin documentation that interfered with implementation, fine-tuning, and adoption.

Other reviews pointed to quirks in the UI, sluggish dashboard performance, and limited

options for customizing alerts or visualizations.

Those reviews align closely with what we found in our own evaluation.

Where Acceldata Shines, and Where It Falls Short

Data Observability Cloud delivers on scale, speed, and technical coverage. Its platform effectively monitors sprawling infrastructure, handles high-throughput environments, and uncovers anomalies, often through built-in automation.

Support is another bright spot. Enterprise users consistently call out fast response times and proactive onboarding, despite others pointing to gaps in documentation and post-launch guidance.

Snowflake usage insights and Hadoop compatibility are other standout capabilities. In mixed stacks, those features close long-standing visibility gaps for data engineers and platform leads.

But governance is where it comes up short.

- Policy-driven automation is available, but lacks tight coupling with data classification, access tiers, or risk scoring frameworks

- Governance workflows are improving, but may not support full ownership validation or audit trails

- Escalation rules based on SLA or business impact are still evolving.

Lineage receives a similar grade of incomplete. While ADM provides interactive metadata visualization and dynamic lineage tracking, the depth of field-level tracing and exploration may not equal that of other platforms.

For companies with engineering-led ops and strong internal governance, ADOC offers substantial value. But if ownership, policy enforcement, and automated workflows are required, consider other solutions.

Is Acceldata Data Observability Cloud Right for You?

Yes, if:

- Complex, on-prem, cloud, and multi-cloud deployments are in place

- Observability is owned by engineering

- Platform health and cost visibility are top priorities

No, if:

- Strong lineage and/or governance features are necessary

- Robust policy enforcement and ownership assignment are required

- Significant SLA-based or business-driven remediation is required

- Cross-functional users need direct access and utility

Sifflet vs. Acceldata: When to Choose Sifflet for Data Trust and Governance

End-to-end observability means no holes or blind spots.

That's Sifflet.

Here's what makes our Native-AI observability platform different:

- Built in data governance

Sifflet embeds policy enforcement, ownership assignment, and audit-ready governance into its core. There's no need for external scripts or workflows to prove compliance or track accountability.

- Field-level lineage and interactive data catalog

Sifflet maps issues down to the column level and visualizes dependencies in a fully integrated, interactive catalog that supports impact analysis, audit prep, and root cause resolution.

- Trust scoring and SLA monitoring

Each dataset is scored by severity, business impact, and resolution progress. Sifflet shows which issues threaten executive dashboards or compliance KPIs, so teams first fix what matters most.

- Business context in every alert

Instead of generic "table changed" notifications, Sifflet tells you which dashboards, KPIs, or decisions are at risk. That means your team focuses on what actually impacts revenue, compliance, or executive reporting.

The result?

Observability that brings visibility to all of your data, prioritizes issues according to their business impact, and provides the complete context required to resolve them quickly.

Get your demo today to see Sifflet's Data Observability in action.

-p-500.png)