Modern enterprises use an average of 5+ analytics engines to query the same data lake, increasing the risk of silent failures when metadata drifts between tools.

Open Table Formats enable this flexibility, but without observability, inconsistencies across Spark, Trino, and Snowflake often go undetected until business dashboards break.

What Are Open Table Formats?

An Open Table Format (OTF) establishes the rules for organizing, versioning, and tracking data storage in data lakes. It is an open-source logic layer between raw data files and the compute engines that query them.

Open Table Formats, such as Apache Iceberg, Delta Lake, and Apache Hudi, move the data intelligence layer formerly locked within vendor solutions to the customer's own cloud storage environment.

Storing table logic in an open metadata layer allows enterprises to retain complete control over their data assets regardless of the platforms used to query them (e.g., Spark, Trino, or Snowflake).

OTFs share several common technical attributes:

OTFs like Iceberg and Delta Lake add an ACID-compliant metadata layer that acts as a gatekeeper. Compute engines check this metadata to determine which files are officially part of the table. If a write job crashes halfway through, the OTF never records those partial files, so BI tools continue reading the last healthy version of the data.

Metadata-driven intelligence enables advanced data skipping by ignoring irrelevant files, reducing cloud computing costs and query latency.

Adding and renaming columns, or other structural changes, can be made without rewriting the underlying data. Tables can evolve to meet maturing business needs, with strict schema enforcement serving as a quality gate.

Continuous snapshots of the metadata and file manifests create a permanent record of every change. Historical versioning allows auditing past states for compliance or instant recovery from accidental mistakes by rolling back the table state to a previous snapshot.

Open Table Format Leaders: Iceberg, Delta, and Hudi

OTFs are dominated by three primary standards, each born from a distinct operational need:

- Apache Iceberg: Developed at Netflix, it was designed for performance and management of massive datasets on S3. Engine-agnostic, Iceberg excels at read performance.

- Delta Lake: Created by Databricks, Delta Lake brings ACID transactions and reliability to Apache Spark workloads.

- Apache Hudi: Developed at Uber, Hudi is a format designed for high-volume streaming data.

How to Manage the Risks of Distributed Table Logic

While OTFs offer architectural freedom, they also bring new responsibilities. With table health no longer handled by the vendor, the responsibility for maintaining the integrity, performance, and synchronization of the data layer shifts entirely to the enterprise.

Data observability provides the cross-layer visibility needed to monitor the health of metadata and the data it represents.

- Cross-engine drift

Multi-engine environments are naturally prone to errors in metadata interpretation. Since each query engine uses its own connector to read the table manifest, a successful schema update in one tool can go unnoticed or be incompatible in another.

Data observability compares how each engine perceives the same table schema to identify gaps.

- Metadata bloat

Constant snapshots create mountains of metadata for engines to sift through. Thousands of outdated files lead to bloat that slows query performance and drives up cloud costs as systems spend more time navigating table structure than processing data.

Observability monitors metadata file counts and snapshot age, alerting when it's time to trigger compaction or vacuuming cycles.

- Catalog ghost data

Failures in handshakes between physical metadata and logical pointers create catalog drift. A disconnect results in ghost data: records physically present in storage but invisible to compute engines.

Data observability automates reconciliation between the data catalog and physical storage to find and remove these hidden records.

- The complexity of shared ownership

Identifying the tool responsible for a failure is difficult in a decoupled stack.

Cross-engine lineage, powered by observability, offers the forensic record needed to trace a metadata error back to the specific pipeline or engine that wrote it.

Open Table Formats: Universal Accessibility

Open Table Formats create a universal metadata layer that allows data to be accessed by any analytics or processing tool without moving or copying the underlying files.

By standardizing how table metadata is defined and stored, OTFs enable engines such as Spark, Trino, and Snowflake to interact with the same datasets consistently. This shared approach reduces data silos, lowers storage and pipeline complexity, and gives organizations long-term flexibility as tools and platforms change.

Enterprises adopt OTFs because they support mission-critical workloads that demand both freshness and reliability.

For example, formats like Apache Hudi and Delta Lake support near-real-time updates through upserts, allowing fraud detection systems to operate on the most current data instead of waiting for batch processing cycles. This improves the speed and accuracy of fraud prediction while keeping data management centralized.

OTFs also simplify secure data sharing by allowing organizations to expose live tables directly from their own cloud storage.

Using formats such as Apache Iceberg or Delta Lake, enterprises can grant partners access to current data without copying it or maintaining complex ETL pipelines. Because the data remains in the owner’s environment and is governed through metadata, sharing becomes both more efficient and more secure.

In addition, Open Table Formats make data inherently ready for AI and machine learning workloads.

Their support for schema evolution, versioning, and governance ensures that training datasets are consistent and auditable, which is critical for building reliable models. The same versioning capabilities allow time travel, allowing teams to examine exactly how a dataset looked at a specific point in time.

In supply chain operations, this makes it possible to investigate delays or discrepancies by replaying historical data states and understanding how changes affected downstream decisions.

The Metadata Handshake

Translating these business use cases into reality requires a precise handshake between physical data and a Data Catalog. While the OTF manages file-level details, the catalog acts as the enterprise registry through three primary functions:

Physical-to-Logical Mapping: OTFs identify the physical files that form a table at any given moment. Data catalogs route queries to the most recent version.

Automated Discovery: Data catalogs harvest metadata from OTF manifests. Catalogs keep the enterprise registry current as tables evolve with new columns or partitions.

Cost Efficiency through Pruning: Metadata-driven pruning helps compute engines skip irrelevant files. Logic within the metadata reduces query costs and processing time by reading only the required fragments.

The Reliability Gap

The integration between the Table Format and the data catalog is where many "open" architectures fail. If the catalog's logical pointer drifts away from the table's physical metadata, data becomes "invisible" or stale.

Reliability gaps like these make observability mandatory. Data observability provides the automated reconciliation needed to verify that physical data and logical catalog pointers remain in sync.

Manage OTFs with Sifflet

Adopting an Open Table Format creates a vendor-neutral data stack. But it also introduces a layer of distributed logic that lies beyond the reach of traditional monitoring.

Sifflet Data Observability manages the unique operational risks inherent in OTFs through:

- Metadata Harvesting to automatically collect and analyze manifest files and transaction logs from Iceberg, Delta, and Hudi.

- Automated Reconciliation that compares the physical state of data in cloud storage against the logical state registered in the data catalog.

- Behavior Analysis that uses machine learning to understand system behavior and to detect anomalies in metadata updates, flag unexpected schema changes, and spot volume drops before they disrupt downstream processes.

From OTF Oversight to Operational Advantage

Turning Open Table Format signals into measurable business value requires observability tools designed specifically for lakehouse architectures and multi-engine environments.

Because OTFs operate across different compute engines and rely heavily on metadata, organizations need visibility that goes beyond traditional pipeline monitoring and surface-level data quality checks.

Sifflet provides this visibility by acting as a unified metadata control plane across the entire data stack.

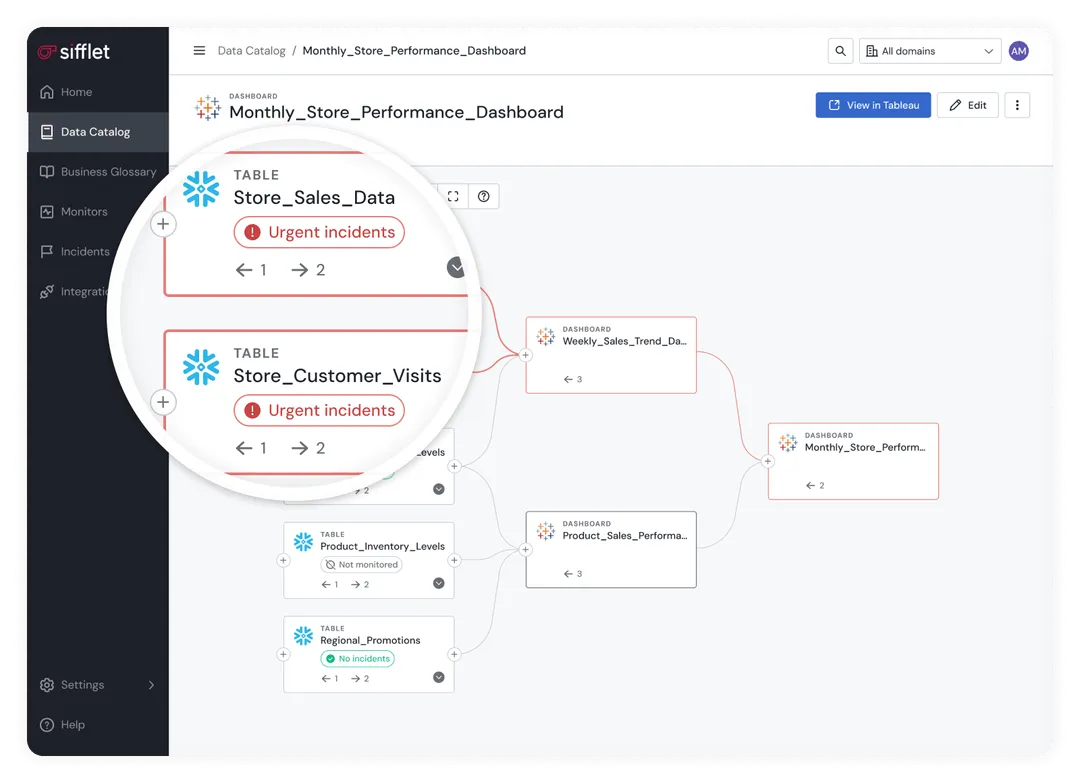

It continuously captures and analyzes active metadata to track how data flows between engines, how tables evolve over time, and how operational changes affect downstream consumers. By mapping cross-engine lineage, Sifflet makes dependencies explicit, allowing teams to understand how a change in one system impacts analytics, BI tools, or machine learning workloads elsewhere.

This active metadata foundation also enables early detection of schema drift, such as when a column is added, renamed, or removed. Instead of discovering issues after dashboards or models fail, teams are alerted immediately, preventing silent breakages caused by mismatches between table definitions and consuming tools. At the same time, Sifflet monitors the operational health of Open Table Formats by providing visibility into critical maintenance processes like compaction and snapshot retention. Automated alerts flag metadata bloat before it degrades query performance or increases storage costs.

When failures do occur, Sifflet accelerates resolution through automated root cause analysis. Its AI agents identify the exact pipeline, engine, or operation responsible for a conflict, reducing troubleshooting from hours to minutes. Unlike traditional observability tools that focus narrowly on data quality or pipeline uptime, Sifflet is built to observe the metadata-driven behavior of Open Table Formats themselves, enabling organizations to move from reactive oversight to proactive operational advantage.

Future-Proofing Your Open Data Architecture

The shift to an Open Data Architecture offers a welcome move toward data sovereignty and flexibility. Yet as data logic migrates to the metadata layer, observability must evolve alongside it.

Operating a reliable, multi-engine lakehouse requires a metadata-first approach that treats state changes as primary operational signals. Sifflet provides the intelligent control plane needed to preserve the flexibility of a decoupled stack without the chaos of unmanaged metadata.

Don't let your metadata become a silent liability. Book a Demo with Sifflet today to see how active metadata observability can secure your Open Data Stack.

-p-500.png)