Observability tells you your engine is smoking and why.

Agentic Data Management pulls the car over and fixes the leak.

The distinction between the two lies in the gap between detection and resolution. One gives you the ground truth of your data health; the other provides the autonomous rationale to act on it.

Understanding the bridge between the two is the difference between managing a dashboard and managing a self-healing enterprise.

Let’s give this topic a quick spin.

What Is Data Observability?

Data observability is the practice of monitoring, understanding, and ensuring the health of your data systems so you can trust the data that powers your business decisions and data products, such as recommendation engines or AI models.

Basically, it is a quality control for your modern data stack.

Data is the raw material of the modern enterprise. Whether you're power-steering a business via executive dashboards or training a new LLM, your outcomes are only as good as your inputs.

Data observability tools provide the high-fidelity visibility needed to keep those inputs clean, consistent, and ready for use.

Maintaining this quality requires monitoring five specific data dimensions.

- Freshness: Monitoring update frequency against expected schedules.

- Distribution: Audits the integrity of values within a dataset.

- Volume: Tracks significant fluctuations in row counts.

- Schema: Detects structural changes at the source, such as renamed fields or altered data types.

- Lineage: Maps the lifecycle and interactions of data from source to consumption.

The Value of Data Observability

Data observability is the difference between time spent firefighting and building for future growth. It transforms the stack into a production-grade environment for:

- AI and machine learning

AI and ML models and platforms can be used with confidence that their underlying inputs will remain clean and consistent using AI observability.

- Production-grade data operations

Observability provides the rigor, predictable SLAs, and reliability expected of mission-critical software products via ai monitoring.

- Faster incident resolution

Isolating the root cause across complex pipelines, observability replaces cross-team confusion with coordinated remediation.

Observability provides the ground truth, paving the way for more advanced, agentic automation.

What Is Agentic Data Management?

Agentic Data Management (ADM) is the evolution of data operations from static, rule-based automation to goal-oriented, autonomous systems. Unlike traditional methods that require manual configuration for every scenario, ADM leverages LLMs to reason over metadata and execute complex tasks across the data stack.

The shift to agentic systems is already well underway. Deloitte reports that 25% of firms using GenAI are expected to deploy AI agents, a figure projected to grow to 50% by 2027.

The DNA of an Agentic System

Agentic data management extends decision-making beyond the limitations of "if-then" logic. These systems don't just follow scripts; they pursue high-level objectives through three core capabilities:

- Autonomous orchestration: Agents interpret their environment, reading documentation and catalogs, to decide which tools to trigger and in what order.

- Goal-driven execution: An agent reasons through the specific steps required to achieve a goal or task, adjusting as circumstances change.

- Self-correction: Agents diagnose failures by analyzing logs and metadata, modifying their own parameters to resolve issues and resume progress without human intervention.

The Pivot: Sensing vs. Acting

In manual processes, the engineer provides both the rationale to determine what’s necessary and the physical involvement to perform the task.

In an agentic world, the system assumes both roles by pairing two interdependent layers.

Observability is the sensing layer. It supplies the high-fidelity ground truth an agent needs to make informed decisions.

Agentic Management is the execution layer. It’s the brain and hands. The agent interprets signals from the sensing layer to develop and execute a logic-based plan across the stack.

The Need For Verifiable Autonomy

The primary barrier to adopting agentic systems is the risk of automated chaos. An agent that can act but cannot sense is a liability.

If the underlying metadata is compromised, the agent may diligently execute a fix that actually breaks production.

Pairing agentic execution with a robust observability layer creates a system of verifiable autonomy. Observability serves as a guardrail, with AI monitoring verifying that data signals are accurate before the agent is allowed to modify a line of code or redirect a pipeline.

This keeps the system self-correcting rather than just self-operating.

How Sifflet Delivers Data Observability

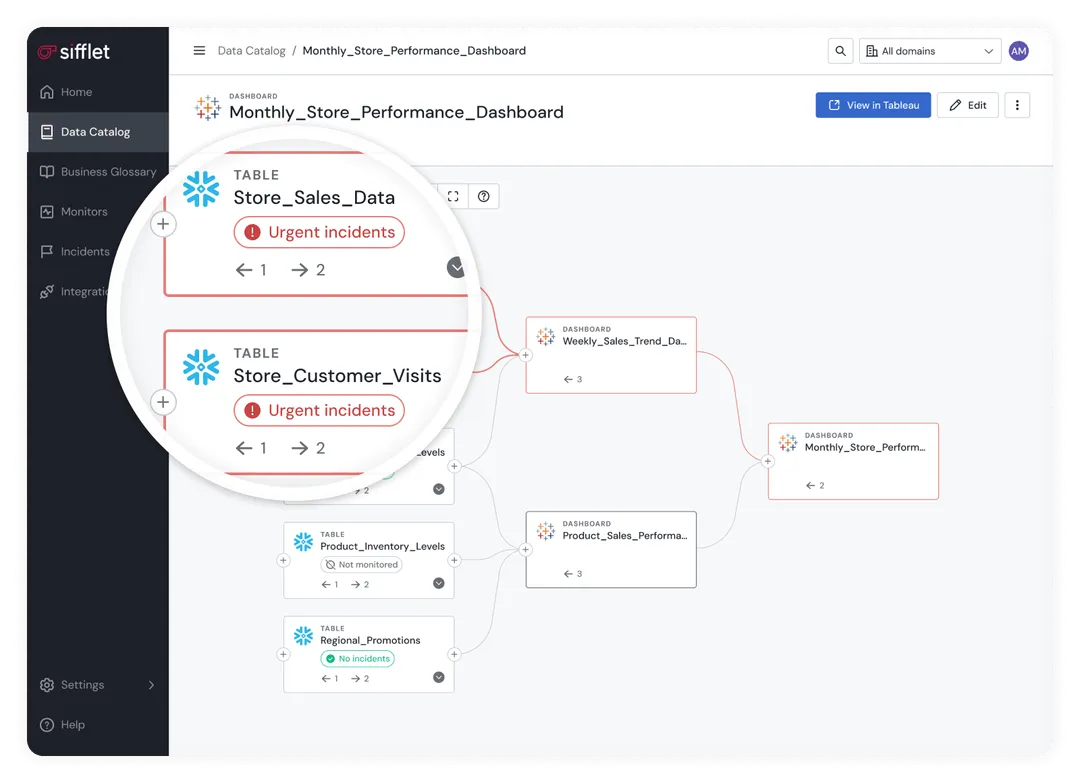

Sifflet is an AI-native observability platform designed to handle the complexity of the modern data stack. Sifflet is metadata-first, an AI data observability tool that transforms raw technical signals into high-fidelity business context, providing the ground truth that both human teams and autonomous agents require to operate with certainty.

The platform unifies three main technologies to give you a complete view of your data's health:

- Machine learning-powered monitoring: Instead of manual threshold tuning, Sifflet automatically learns the specific rhythms of your data. The platform identifies subtle anomalies across the five pillars of health while filtering out noise that can cause alert fatigue.

- Field-level data lineage: This is your map. Sifflet traces data from ingestion through to BI reporting. When a dashboard metric looks off, lineage lets your team trace back to the root cause of the failure in minutes rather than days.

- A business-context catalog: Data is only useful if people understand it. The catalog enriches technical metadata with business definitions, clarifying ownership of the data and its sensitivity, bridging the gap between engineers and end users.

Sifflet's Agentic Observability

Sifflet introduces a suite of purpose-built AI agents, Sentinel, Sage, and Forge, acting as the connective tissue between sensing problems and resolving them:

Sentinel doesn't wait for a crash. It analyzes your metadata and lineage to recommend strategic quality checks before failures occur.

Sage handles investigations when incidents occur. It generates a narrative that explains exactly what went wrong, why it happened, and which business-critical processes are at risk of broken data.

Forge closes the loop by drafting tailored fixes. Based on historical patterns and your specific operational history, it provides the code or configuration changes needed to get things back on track.

Sifflet provides the foundation for Data Trust. Whether a human or an agent takes the next step, Sifflet provides the history, visibility, and context needed to keep enterprise data reliable.

When to Choose Observability vs. Agentic Management

Agentic systems can’t act if they can’t see.

Before you think about autonomous remediation, you need clarity, trust, and context in your data environment.

Observability should be your first move when confidence in the data is shaky.

If teams regularly question dashboards, double-check numbers in spreadsheets, or argue about “whose data is right,” that’s a trust problem, not an automation problem. The same applies when incident resolution is slow and manual, with engineers spending hours tracing failures across layers of SQL and pipelines.

In regulated environments, observability is also non-optional: you need a clear, human-auditable record of what happened, when it happened, and why. And if changes to a single source feel risky because no one fully understands downstream impact, visibility has to come before autonomy.

Agentic management becomes the right choice once that foundation is firmly in place.

When your observability layer is mature and producing reliable signals, agents can act without guessing. This is especially valuable when scale overwhelms headcount, when there are simply too many pipelines for humans to investigate every alert.

Repetitive failures are another strong signal: if the same errors keep appearing and always require the same fix, those workflows are ideal for autonomous remediation.

Finally, if downtime carries a direct and growing financial cost, waiting for human intervention is no longer acceptable; systems need the ability to detect, decide, and repair in real time.

You can simplify the decision with this checklist:

Choose observability if:

- Users don’t trust dashboards or data accuracy

- Incident resolution is slow and highly manual

- Compliance and auditability are critical

- Downstream data impact is unclear or risky

Advance to agentic management if:

- Observability signals are reliable and high-fidelity

- Pipeline scale exceeds team capacity

- Failures are repetitive with known fixes

- Downtime has immediate financial impact

Sifflet Observability for Safe Agentic Data Management

Observability delivers the infrastructure for trust, while agentic management provides the path to scale.

Establishing a complete view of data health lays the foundation for offloading mechanical reasoning to agents and moving engineering teams away from routine maintenance and back to high-value innovation.

Book a demo with Sifflet today to see how our AI observability platform can help you build a reliable, trust-first agentic data foundation.

.avif)

-p-500.png)